AI and Cybersecurity in Finance A Modern Defense Guide

Discover how AI and cybersecurity in finance are transforming threat detection. Our guide explains how to build a smarter, more secure financial future.

Financial institutions are in a constant battle with cyber threats, and the rulebook is changing. The old-school, reactive approach to security just can't keep up with the smart, fast, and often AI-powered attacks we're seeing today. It's time to stop just responding to breaches and start getting ahead of them. This is precisely where AI and cybersecurity in finance come together.

Why Traditional Security Is No Longer Enough

For years, the financial sector built its defences like a fortress. The strategy was to create a strong perimeter using firewalls, antivirus software, and intrusion detection systems that worked off a set of predefined rules. This worked well enough when the threats were predictable and followed familiar patterns.

Think of it like a security guard with a list of known troublemakers. As long as the threat is on the list, you're safe.

The problem is, today's cybercriminals are far more creative and move at lightning speed. They often use AI themselves to cook up entirely new strains of malware—the so-called "zero-day" attacks that have never been seen before. These slip right past traditional defences because they don't match any known signature. This leaves security teams perpetually playing catch-up, only fixing a hole after someone has already broken in.

The Speed and Scale Mismatch

The sheer volume of data is another huge problem. A major bank might handle millions of transactions and log trillions of network events every single day. No human team, no matter how skilled, can possibly sift through that ocean of information in real time to spot a threat.

This creates a dangerous gap where sophisticated, quiet attacks can go unnoticed for weeks, or even months.

The core failure of traditional security lies in its inability to match the speed and scale of modern cyber threats. It's like trying to catch raindrops with a thimble during a hurricane—the tools are simply not designed for the volume of the problem.

This table breaks down how legacy security stacks up against a modern, AI-driven approach.

Traditional vs AI-Driven Cybersecurity in Finance

| Capability | Traditional Cybersecurity | AI-Driven Cybersecurity |

|---|---|---|

| Threat Detection | Based on known signatures and predefined rules. Struggles with new or "zero-day" attacks. | Uses behavioural analysis and anomaly detection to identify novel and unknown threats. |

| Response Time | Slow and manual. Often requires human intervention, leading to delays. | Automated and instant. Can isolate threats and initiate countermeasures in real time. |

| Data Analysis | Limited capacity. Human analysts are easily overwhelmed by the volume of alerts and data. | Can process and analyse massive datasets (petabytes) in seconds to find subtle patterns. |

| Adaptability | Static. Rules must be manually updated to address new threats. | Self-learning and dynamic. Continuously adapts its understanding of "normal" to improve accuracy. |

| False Positives | High. Rigid rules often flag legitimate activities, creating "alert fatigue" for security teams. | Significantly lower. Differentiates between genuine threats and benign anomalies more effectively. |

It's clear that while traditional methods laid the groundwork, they are simply outmatched by the complexity and pace of today's threats.

For a deeper dive into how these approaches differ, especially when it comes to testing your defences, it's worth understanding the differences between vulnerability scanning, AI pentesting, and manual pentesting. The comparison really drives home why a strategic shift is necessary.

From Reaction to Prediction

This is where AI completely flips the script. Instead of just looking for known bad guys, AI systems learn what normal, everyday activity looks like across your entire network. They sift through mountains of data to establish a baseline, and then they flag any deviation, no matter how tiny.

This move from a reactive posture to a predictive one is the key. It's about spotting the trouble before it starts.

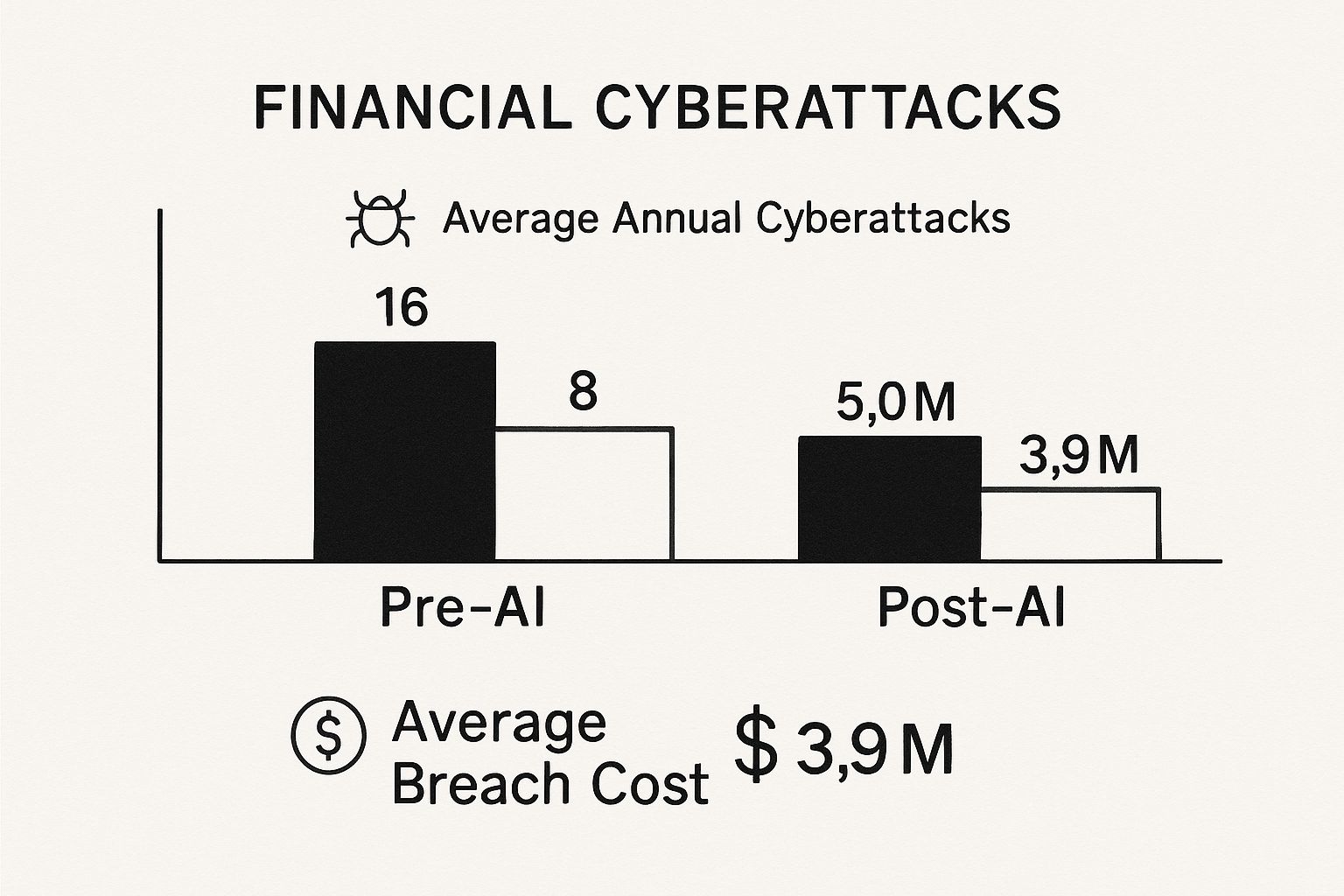

The image below gives a stark visual of how attack frequency and breach costs can change after bringing AI-driven security into the fold.

The numbers speak for themselves. Adopting AI defences doesn't just block more attacks; it dramatically reduces the financial fallout when a breach does manage to get through. It’s a powerful argument for leaving outdated security models behind.

How AI Is Reshaping Threat Detection

Artificial intelligence is completely changing how financial institutions spot threats. Think of it as a tireless digital detective, but one that operates on a scale no human team ever could. The real magic lies in its ability to learn what’s normal, which makes anything out of the ordinary stick out like a sore thumb.

This is where machine learning (ML) algorithms shine. These models sift through mountains of data from across a bank's network—every transaction, login, file transfer, and server request. From this constant stream of information, they build a dynamic, high-fidelity baseline of "normal" activity. It's like an AI that has memorised the unique rhythm and pulse of your entire digital operation.

The moment something deviates from this established pattern, even slightly, the system flags it. This could be a user logging in from an unfamiliar country at 3 a.m. or a server trying to connect to a strange external address. These are the subtle breadcrumbs a human analyst, drowning in millions of daily alerts, could easily miss. But for an AI, they’re glaring red flags.

Moving Beyond Rules with Behavioural Analytics

This approach takes security from a static, rule-based checklist to a dynamic, context-aware defence. AI-powered behavioural analytics create a profile for the typical actions of every user and device. By deeply understanding how an employee usually interacts with systems and data, the AI can spot insider threats or compromised accounts with incredible accuracy.

For example, imagine an accountant who typically views customer reports between 9 a.m. and 5 p.m. suddenly starts downloading massive volumes of sensitive client files in the middle of the night. The AI immediately recognises this as a critical anomaly. This kind of proactive alert happens long before any real damage can be done, adding a vital layer of internal protection.

Natural Language Processing (NLP) is another powerful tool in the arsenal, especially for dissecting text-based threats. NLP algorithms can analyse the language, tone, and structure of emails to identify sophisticated phishing attempts far more effectively than simple keyword filters. For a deeper dive into this, it's worth exploring the pivotal role of Artificial Intelligence in enhancing cybersecurity. This automated vetting creates a powerful shield against social engineering.

The Power of Automation at Scale

When you combine these AI capabilities, you get a level of defence that is both incredibly smart and massively scalable. Instead of relying on human teams to manually connect the dots between disparate alerts, financial institutions can now deploy systems that work around the clock, correlating every potential clue simultaneously.

AI in cybersecurity is like having a team of a million analysts working 24/7. It doesn’t just find the needle in the haystack; it watches every single piece of straw in real time.

This shift is especially urgent in the UK, a major global financial centre. The financial services sector here is a prime target for cybercriminals, with around 75% of UK organisations reporting at least one cyber-attack in the past year. This has rightly prompted a surge in government investment to support the integration of AI for better threat intelligence and faster response times.

Strengthening identity verification is a key part of this defence. Advanced AI tools for business like Verifai are built to tackle this challenge head-on, using AI to automate and secure document and identity checks. By locking down these fundamental processes, financial institutions can build a much safer and more trustworthy foundation for everything they do.

AI in Action: Real-World Financial Security Examples

It's one thing to talk about theory, but it’s on the front lines where you really see how AI and cybersecurity in finance come together. These aren't just abstract ideas; they're active, tangible solutions defending institutions against real threats, every single second. Seeing AI in action truly brings its value into focus.

Think about a major bank processing millions of online transactions every hour. A skilled team of human analysts, no matter how dedicated, could only ever review a tiny fraction of that volume. This is where AI steps in, acting as a super-powered fraud detection engine that never sleeps.

An AI system can analyse every single transaction in real time. It cross-references thousands of data points—transaction amount, location, time, recipient history, and the user's typical behaviour. It might spot a £5,000 payment to a new recipient in a different country at 2 a.m. from an account that normally only makes small, local purchases during business hours. Instantly, the AI flags the transaction, blocks the payment, and alerts the account holder, stopping fraud before a single penny is lost.

Unravelling Complex AML Schemes

Anti-Money Laundering (AML) is another area where AI is a complete game-changer. Criminals often try to hide illicit funds by funnelling them through a complex web of shell companies and multiple bank accounts across different countries. Manually tracing these connections is a painstaking process that can take a team of investigators months, if not years.

AI, on the other hand, can digest and connect vast, unstructured datasets from global sources. It sifts through news articles, sanctions lists, corporate ownership records, and transaction logs to identify hidden relationships and suspicious patterns that are practically invisible to the human eye.

Imagine an AI model detecting a network of seemingly unrelated small businesses making regular, coordinated payments to an offshore account. By connecting these subtle dots, the system could unravel a sophisticated money laundering ring that would have otherwise gone completely unnoticed.

This kind of proactive discovery is a huge leap forward from the traditional, reactive approach to AML compliance. Exploring other real-world use cases shows just how versatile these applications are across different financial operations. Integrating these advanced systems often requires specialised internal tooling to securely connect the AI models with existing banking infrastructure.

Predictive Threat Intelligence: Forecasting Attacks

Perhaps one of AI's most powerful applications is in predictive threat intelligence. Instead of just reacting to attacks as they happen, AI can act as an early warning system, forecasting future threats before they even materialise.

AI models analyse chatter on dark web forums, monitor new malware signatures emerging globally, and track the tactics used by known hacking groups. By piecing together these fragments of information, the AI can predict which attack vectors are likely to be aimed at the financial sector next.

For example, an AI might detect a surge in discussions about a specific vulnerability in a widely used piece of banking software. It can then alert security teams to patch their systems before criminals have a chance to exploit it. This predictive capability turns the tables on attackers, allowing institutions to reinforce their defences proactively. For many organisations, developing this foresight starts with a clear plan, which is where effective AI strategy consulting becomes invaluable.

Ultimately, these examples show a fundamental shift in thinking. AI isn't just another tool; it’s a strategic partner that gives financial institutions the power to operate with greater security, efficiency, and confidence. To see how these opportunities apply to your own organisation, you can explore what's possible with a powerful AI Strategy consulting tool.

Navigating Compliance and the Regulatory Landscape

Rolling out powerful AI security systems isn't just a technical challenge; it throws you into a complex web of regulatory duties. For any UK financial institution, this means walking a tightrope between deploying state-of-the-art defences and staying on the right side of bodies like the Financial Conduct Authority (FCA). It’s not about ticking boxes. It’s about maintaining trust.

The real headache often comes from the very nature of advanced AI. Some models work in ways that are difficult for humans to interpret, leading to the infamous "black box" problem. Regulators, auditors, and even your own team need to know why an AI system flagged a transaction or blocked a customer's account. If the model can't explain itself, you’re looking at a serious compliance risk.

This is precisely why Explainable AI (XAI) has gone from a niche academic concept to a must-have. Your models need to be transparent and auditable, ensuring every decision can be traced back and justified to anyone who needs to know—be it a regulator, a customer, or a board member.

The UK's Pioneering AI Cybersecurity Standard

The UK has stepped up to this challenge with a groundbreaking AI cybersecurity standard, a world first designed to secure the digital economy. This voluntary code of practice lays out 13 core principles to guide organisations in protecting their AI systems from sophisticated attacks and manipulation. Considering nearly 75% of UK organisations report facing cyber-attacks and the AI sector pulled in £14.2 billion in revenue, this standard is a critical piece of the puzzle. It offers a framework for innovating responsibly.

For financial firms, this guidance is invaluable. It provides a roadmap for adopting AI with confidence, ensuring systems are not just effective but also built on a solid foundation of security and accountability.

Data Privacy in the Age of AI

AI security systems are data-hungry, but their diet is strictly controlled by regulations like the General Data Protection Regulation (GDPR). Financial institutions have to be incredibly careful that the huge datasets used to train and run their AI models are handled in full compliance with these laws.

A few key principles are non-negotiable:

- Data Minimisation: Only collect and use the absolute minimum data required for the security task.

- Purpose Limitation: Data gathered for fraud detection can't suddenly be repurposed for a marketing campaign without explicit consent.

- Anonymisation and Pseudonymisation: Whenever possible, strip out or obscure personal details to protect individual identities.

Getting this wrong can result in eye-watering fines and a catastrophic loss of customer trust. Data handling protocols must be woven into the very fabric of your AI security strategy, a principle we outline in our own privacy policy.

Building Compliance in from Day One

Trying to bolt on compliance after the fact is a recipe for disaster. To navigate this landscape successfully, you have to bake regulatory requirements into the AI development process right from the start. This makes your security solutions compliant by design, not by frantic, last-minute fixes.

Adopting a 'compliance-by-design' mindset means treating regulatory adherence as a core feature of your AI security system, not as an afterthought. It shifts the focus from fixing problems to preventing them entirely.

This journey begins with a detailed AI requirements analysis that maps out not just technical specifications but all legal and regulatory duties. By embedding compliance into the initial design—and checking in at every stage of development—financial institutions can build AI security systems that are powerful, effective, and ready to stand up to any scrutiny.

Building Your AI-Powered Cybersecurity Roadmap

Moving to an AI-driven security model isn't about buying a single piece of software; it's a strategic journey. It requires a clear, step-by-step framework that builds momentum and shows its worth along the way. A solid roadmap is what turns the abstract idea of AI and cybersecurity in finance into a concrete plan for a more secure future.

The first step, always, is to take an honest look at where you stand right now. You can't plan the route if you don't know your starting point. This means identifying your most critical assets, mapping out how attackers might get in, and pinpointing the vulnerabilities that pose the biggest threat to your organisation. This foundational analysis will tell you exactly where AI can make the most immediate and meaningful impact.

Phase 1: Laying the Groundwork

Before you can even think about deploying sophisticated algorithms, you have to get your data in order. AI models are only ever as good as the data they learn from, so setting up clean, accessible, and well-managed data pipelines is non-negotiable. This phase is all about breaking down data silos and making sure your security information and event management (SIEM) systems are collecting high-quality logs.

Once the data is flowing, you need to pick the right tools for the job. Not all AI solutions are created equal. The market is full of options designed for specific tasks like anomaly detection, phishing prevention, or behavioural analytics. The trick is to match the tool to the specific weaknesses you uncovered in your initial assessment.

Phase 2: Proving Value with Pilot Projects

Instead of attempting a massive, company-wide overhaul from the get-go, smart leaders start small with targeted pilot projects. These are small-scale initiatives designed to prove the value of AI quickly and with minimal risk. A pilot might focus on a single, high-impact area, like automating the initial review of security alerts to give your analysts a break from the noise.

The goal here is to get a measurable win. A successful pilot builds crucial momentum, helps get key stakeholders on board, and teaches you valuable lessons you can apply when you're ready to expand.

Phase 3: Integration and Scaling

With a successful pilot or two under your belt, the next step is to weave these new AI capabilities into your core security operations. This means connecting your new AI tools with existing platforms like your SIEM and Security Orchestration, Automation, and Response (SOAR) systems. This integration is what unlocks genuine automation, allowing the AI to not just detect threats but also trigger an immediate, automated response.

This is also the point where you start to scale the solution across other departments and use cases. The insights you gained from your pilot projects will guide a broader rollout, making the expansion much smoother and more effective.

A successful AI security roadmap isn't static; it's a living document. It must be designed to adapt as your organisation evolves and as attackers develop new tactics. Continuous learning and model refinement are the keys to long-term resilience.

This commitment to strengthening digital defences isn't just happening within individual firms. The UK government has significantly increased its investment in this area, allocating an additional £1.2 billion to bolster national digital priorities. Much of this funding is aimed at enhancing the capabilities of intelligence agencies and the National Cyber Security Centre (NCSC) to fight threats against critical infrastructure, including the financial sector.

Phase 4: Continuous Improvement

Finally, the journey doesn't end once everything is implemented. Cyber threats are constantly changing, and your AI models must change with them. This final phase is a continuous loop of monitoring, training, and refining your AI systems. Regularly retraining your models with fresh data ensures your defences stay sharp and effective against the latest attack methods.

This entire process, from the first assessment to ongoing improvement, is best guided by a clear plan. A Custom AI Strategy report can provide the clarity and direction needed to navigate this complex but essential transformation, making sure every step you take is aligned with your security and business goals.

The Future of Finance: Secure and Intelligent

So, what's the bottom line? AI isn't just some far-off idea anymore; it's become a cornerstone of cybersecurity in finance. The conversation has moved past if firms should bring AI on board and is now squarely focused on how to do it smartly and responsibly.

By shifting from a reactive, "wait-and-see" defence to actively hunting for threats and automating responses, financial institutions aren't just protecting data and money. They're building something far more valuable: deep, lasting trust with their customers. In the world of finance, that's the real gold standard.

Getting Ready for What's Around the Corner

Of course, getting there isn't as simple as flipping a switch. It takes thoughtful planning around the right technology, finding people with the right skills, and navigating the regulatory maze. But let's be clear—the cost of doing nothing is a much steeper price to pay than the investment in modernising your defences. New trends are already on the horizon that will shake things up all over again.

Take quantum computing, for example. It's a double-edged sword. On one hand, it threatens to shatter today's encryption methods. On the other, it promises the possibility of security that's truly unbreakable. Staying ahead of game-changing shifts like this means you have to be committed to constantly moving forward.

The future of financial security won’t be won with a single piece of software or a one-off project. It will be secured by a culture of constant adaptation, where intelligent systems and human experts collaborate to stay one step ahead of the bad guys.

This journey begins with a solid commitment to innovation, often through partnerships like AI co creation. As we've seen, having a clear roadmap is essential, and the right partner can make all the difference. To see the kind of experts who can guide you through this complex but rewarding journey, get to know our expert team and discover how we can help you build a more secure and intelligent future.

Frequently Asked Questions

Got questions about bringing AI into your financial cybersecurity setup? You're not alone. Here are some of the most common queries we hear, with straight-talking answers to help you navigate the topic.

What's the Single Biggest Win with AI in Financial Cybersecurity?

The biggest advantage, hands down, is the shift from playing defence to playing offence. Traditional security is all about reacting—it waits for a known threat to pop up on the radar before it acts. AI, on the other hand, can spot the quiet signals of a brand-new, zero-day attack by catching tiny irregularities in data that a human analyst would never see.

This is the real game-changer. It’s about getting ahead of the attackers and stopping threats before they can do any real damage, instead of constantly being one step behind.

Are There Any Downsides to Using AI for Security?

Absolutely, and it's crucial to manage them. The top concern is something called 'adversarial AI'. This is where attackers get smart and create malware specifically designed to trick or bypass your AI security models. Another big hurdle is the 'black box' problem—sometimes, the AI makes a decision, but you can't easily see why, which can be a nightmare for compliance and regulatory checks.

And, of course, relying too much on automation without a human in the loop can open up new weak spots. The best approach is a balanced one, using slick workflow automation to handle the heavy lifting while keeping expert human oversight for the big-picture strategy.

People often think AI is here to replace human experts. That's a myth. The most effective security teams use AI as a force multiplier. It automates the monotonous work, freeing up analysts to focus their brainpower on complex investigations and strategic threats.

How Can a Smaller Financial Firm Get Started with AI Security?

If you're a smaller firm, don't try to boil the ocean. The key is to start with high-impact solutions that are easy to get off the ground. A fantastic first move is to bring in AI-powered email security. Phishing is still a massive threat for every business, and AI is brilliant at shutting it down.

Another practical starting point is using AI tools for identity and access management (IAM). This helps ensure that only the right people get into your most sensitive systems.

Rather than building a solution from scratch, it makes more sense to partner with specialised vendors. They have proven models ready to go. The smartest way to kick things off is by defining a clear pilot project, perhaps with an AI Strategy consulting tool, to make sure you see a return on your investment sooner rather than later.

Ready to build a more secure, intelligent future for your financial institution? Ekipa provides the expertise to turn your AI vision into a reality. Our end-to-end platform helps you discover, plan, and execute your AI strategy with speed and confidence. Get your Custom AI Strategy report today.