AI Readiness Assessments: Prepare Your Business for AI Success

Discover how AI readiness assessments can evaluate your data and strategy to ensure a smooth AI integration. Get ready for AI success today!

Before you dive headfirst into AI, you need a clear picture of where your organization stands. An AI readiness assessment is exactly that—a strategic look under the hood at your people, processes, data, and tech to see if you're truly ready for artificial intelligence.

Think of it less as a test and more as a detailed roadmap for your entire AI journey. It’s the essential first step that helps you avoid expensive missteps and gets everyone on the same page, focused on opportunities that will actually deliver a return. This kind of AI co creation process ensures that your strategy is built on a solid foundation from day one.

Why Your First Step in AI Should Be an Assessment

Jumping into AI without a solid plan is a recipe for disaster. I've seen it happen time and again: companies get swept up in the excitement, buy the latest tech, and then realize they don't have the internal capacity to make it work.

The numbers back this up. A global survey found that while 97% of companies are eager to implement AI, very few are actually prepared for it. This gap between ambition and reality is where projects stumble, money gets wasted, and golden opportunities are lost.

An AI readiness assessment is your diagnostic tool. It’s not just about your tech stack; it gives you a complete, 360-degree view of your organization. This is where you ask the hard questions before you invest. Is our data a mess? Do our teams have the skills they need? Is our culture even open to this kind of change? Getting honest answers here is the foundation of any successful strategy.

Setting the Stage for Success

A proper assessment gives you the clarity to build something that lasts. The process isn't that different from broader digital transformation consulting, where you have to know exactly where you are today before you can plan for tomorrow. By sizing up your current capabilities against your business goals, you can craft a focused plan that shores up your weak spots and plays to your strengths, as we explored in our AI adoption guide.

This initial deep-dive achieves a few critical things right away:

- It flags critical gaps. You’ll uncover potential deal-breakers early, like poor data quality or a major skills shortage, before they can derail a project.

- It aligns your stakeholders. The assessment process naturally brings leaders from IT, operations, and other business units to the table, helping forge a unified vision for how AI will be used.

- It helps prioritize spending. Instead of guessing, you can channel your budget into areas that will deliver the most significant impact, making every dollar count.

A thoughtful AI readiness assessment isn't about finding reasons to put off AI. It's about making sure that when you finally do make a move, you win. It turns a speculative gamble into a calculated, strategic play.

Ultimately, this assessment is the first and most critical piece of a solid AI strategy. For more on this, check out our guide here: https://www.ekipa.ai/strategy. It gives you the hard data you need to build a realistic roadmap, pick the right pilot projects, and actually measure what success looks like. Without it, you’re not just flying blind—you’re launching a mission-critical rocket without checking if there’s any fuel in the tank.

The Four Pillars of AI Readiness

Kicking off an AI initiative that actually delivers results depends on far more than a slick algorithm or powerful hardware. From my experience, a truly effective assessment looks at your entire organization to make sure every critical piece is ready for the change that's coming. This is a core part of our AI strategy framework, which we've broken down into four foundational pillars.

If you skip over any of these areas, you risk building your AI projects on a shaky foundation. I always tell my clients to think of them as the four legs of a table—if one is weak, the whole thing comes crashing down.

Before you can dive into an assessment, you need a solid framework. The four pillars—Strategic Alignment, Data Maturity, Technological Infrastructure, and People & Culture—give you a comprehensive map for evaluating where you stand. Each pillar has its own set of critical questions that need honest answers.

Let’s look at what this means in practice.

Pillar Focus Area Key Questions to Ask

Strategic Alignment

Connecting AI to business value

Does this project directly support our revenue goals, cost-cutting efforts, or customer experience improvements? How will we measure success with clear KPIs?

Data Maturity

Quality and accessibility of data

Is our data clean, complete, and reliable? Can our teams actually get to the data they need, or is it stuck in silos? Do we have strong data governance and privacy policies?

Technological Infrastructure

Hardware, software, and scalability

Do we have the necessary computing power (CPUs/GPUs)? Can our systems scale from a pilot to a full enterprise-wide deployment? How will new AI tools integrate with our current tech stack (e.g., CRM, ERP)?

People & Culture

Skills, talent, and change readiness

Does our team have the right skills in data science and ML engineering? Is our company culture open to adopting new technologies, or will there be resistance?

This table isn't just a checklist; it's a starting point for the deep, often difficult, conversations that need to happen. By methodically working through these areas, you uncover the hidden risks and opportunities that will make or break your AI ambitions.

Pillar 1: Strategic Alignment

Before anyone writes a single line of code, you have to tie your AI goals directly to core business objectives. Let's be blunt: AI for the sake of AI is a fantastic way to waste money. The real work here is moving past the buzzwords and defining what success actually looks like in dollars and cents, or customer satisfaction points.

Start by asking some tough questions that connect the tech to real-world use cases:

- Will this project actually increase revenue, cut operational costs, or make our customers happier? Be specific.

- How does this particular AI use case support our company's three-year strategic plan?

- Can we define crystal-clear key performance indicators (KPIs) to prove this thing is working?

Answering these forces every AI project to have a clear purpose and, just as importantly, an executive champion who gets its value. This alignment is what gets you the budget, the resources, and the long-term support you'll need. It’s the difference between a cool tech demo and a tool that genuinely moves the needle.

Pillar 2: Data Maturity

Data is the fuel for any AI model. Without high-quality, accessible, and well-governed data, even the most sophisticated algorithms are useless. An AI readiness assessment has to include a brutally honest look at your organization's data situation.

This is about much more than just having big datasets. True data maturity hinges on a few key factors:

- Data Quality: Is your data accurate, complete, and consistent? Garbage in, garbage out is the oldest rule in the book for a reason. Messy data leads to AI predictions you can't trust.

- Data Accessibility: Can your data scientists and AI models actually get to the data they need? Or is it locked away in a dozen disconnected silos?

- Data Governance: Do you have clear, enforced policies for data privacy, security, and compliance? In today's world, this is non-negotiable for building trust and avoiding massive fines.

A recent global survey of over 700 organizations showed a major shift from small proof-of-concept projects to full-scale enterprise AI. This trend puts a massive spotlight on the need for solid data readiness and strategic planning. The takeaway is clear: getting your data house in order is an absolute prerequisite for any serious AI ambition.

One of the biggest mistakes I see teams make is underestimating the sheer effort required to prepare data. It's not uncommon to find that 80% of an AI project is spent just on data collection, cleaning, and preparation. You have to factor this in from day one to set realistic timelines and budgets.

Pillar 3: Technological Infrastructure

Your current tech stack has to be able to handle the heavy lifting that AI and machine learning demand. This means looking at everything from your servers to your software development practices. A brilliant AI model is completely worthless if your infrastructure can't run it efficiently or scale it up.

You need to look at your tech capabilities from a few different angles:

- Computational Power: Do you have the processing muscle (CPUs, GPUs) you need, whether it's on-premise or through a cloud provider?

- Scalability: If your pilot project is a huge success, can your infrastructure handle scaling it up to serve thousands, or even millions, of users?

- Integration: How smoothly can new AI tools plug into the systems you already rely on, like your CRM or ERP?

This kind of technical audit helps you spot potential bottlenecks before they derail a project. It’s a pragmatic step to make sure your tech can actually support your vision.

Pillar 4: People and Culture

Finally, we get to what I believe is the most important piece of the puzzle: the human element. Your company's culture and your team's skills will ultimately decide whether AI is adopted with enthusiasm or met with a wall of resistance. Technology is only half the battle.

This pillar is all about talent and change management. You have to assess your team's current skills to find the gaps in critical areas like data science, machine learning engineering, and AI ethics. At the same time, you must gauge your organization’s readiness for change. Are your employees open to new ways of working, or is there a deep-seated resistance to new technology? Fostering a culture of curiosity and continuous learning is absolutely essential for this to work long-term.

Looking at these four pillars gives you the 360-degree view you need to navigate your AI journey successfully. This holistic approach is a cornerstone of effective AI strategy consulting and ensures no critical stone is left unturned. For hands-on guidance through this complex process, feel free to connect with our expert team.

How to Run Your Own AI Readiness Assessment

Alright, let's move from theory to action. Running an AI readiness assessment isn't just a box-ticking exercise; it's a hands-on investigation into the very core of your organization. Think of it as creating a detailed map of your starting point. A properly executed assessment gives you the data-backed clarity you need to stop just talking about AI and start building an actionable plan.

The whole process boils down to getting the right people in a room, asking some tough questions, and systematically pulling together information from all corners of the business.

First, Assemble Your "Tiger Team"

Your first move is putting together a dedicated team. This is non-negotiable. An AI assessment driven solely by the IT department is doomed from the start. You'll get a skewed, incomplete picture. For a true 360-degree view, you need a mix of minds from across the organization.

A solid assessment team usually includes:

- Executive Leadership: You absolutely need a C-suite sponsor. This person provides the authority, unlocks resources, and makes sure the findings actually get taken seriously.

- IT and Infrastructure: These are your technical experts. They’ll give you the ground truth on your current systems, data architecture, and whether your infrastructure can handle the load.

- Data Science and Analytics: If you’re lucky enough to have these folks already, their insight into data quality, access, and governance is priceless.

- Business Operations: These are the people on the front lines. They live the day-to-day workflows and can immediately point to the real-world pain points and opportunities where AI could have the biggest impact.

- Human Resources: HR brings a critical perspective on the "people" side of the equation—talent, skills gaps, and whether the company culture is even ready for this kind of change.

Bringing these different voices together from day one is the secret to a comprehensive assessment and, more importantly, to getting company-wide buy-in for whatever comes next.

Define Your Scope and Start Digging for Information

With your team in place, you need to decide where to point your flashlight. Are you going to assess the entire organization at once, or start with a single department or business unit? My advice? Start small. A focused scope for your first go-around makes the process far more manageable and delivers valuable insights much faster.

Once you know what you're looking at, the real work begins. You have to dig deep to get a clear picture of where you stand across the four readiness pillars.

The goal here isn’t just to collect stats. A technical audit tells you what systems you have. A conversation with a department head tells you how people actually use them—and what drives them crazy every day. That context is everything.

You can't rely on just one method to get the full story. A good approach combines a few different tactics:

- Stakeholder Interviews: There's no substitute for one-on-one conversations with key leaders and individual contributors. This is where you uncover the qualitative gold—the political dynamics, the cultural roadblocks, and the hidden frustrations a survey will never capture.

- Workshops: Get people together to brainstorm potential AI use cases and literally map out how things get done today. You'd be surprised how often these sessions reveal broken processes and dependencies between departments that no one was aware of.

- Technical Audits: This is where you get the hard data. A thorough review of your data infrastructure, tech stack, and security protocols will show you exactly what you're working with, warts and all.

- Surveys: For a broader pulse check, targeted surveys are great. They can help you gauge how people feel about AI, map out skill levels across different teams, and get a sense of the company's appetite for change.

This can feel like a lot to juggle, which is why we built our own AI Strategy consulting tool to help organizations streamline this exact process, making the data collection and analysis much more efficient.

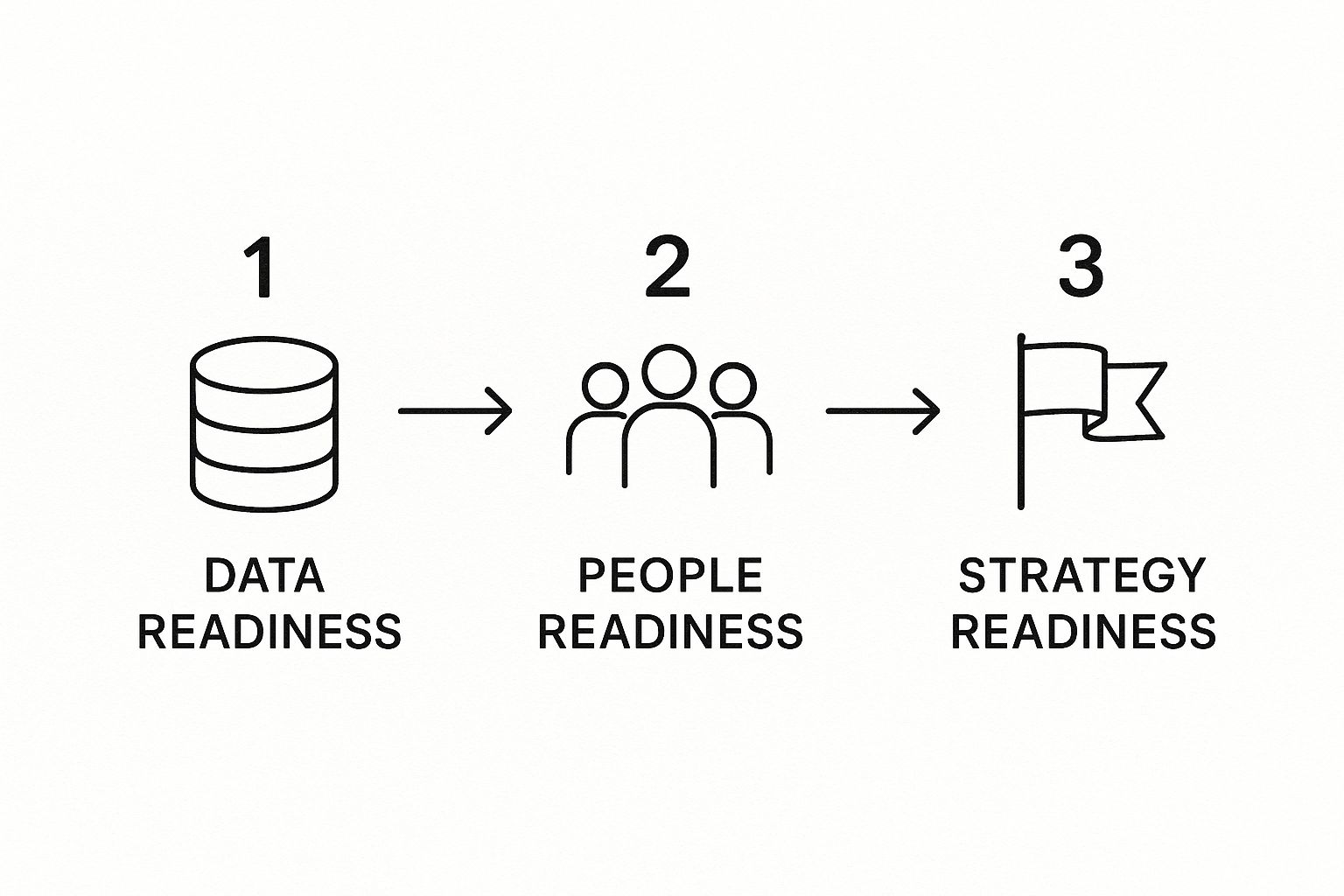

The flow of a great assessment often follows the path laid out below, focusing on how data, people, and strategy all fit together.

As you can see, it's a logical journey. Solid data readiness empowers your people, and a clear strategy guides both.

Analyze the Results and Score Your Maturity

Once you've gathered all this intel, it's time to make sense of it. This is where you connect the dots between the interviews, workshop notes, and technical reports to score your organization's maturity level for each of the four pillars. A simple 1-to-5 scale works well, where 1 is "just starting" and 5 is "fully optimized."

This scoring system isn't about passing or failing; it’s about creating an objective snapshot of your strengths and weaknesses. For example, you might discover you have a fantastic cloud platform (high score in Technology), but a serious lack of data literacy across your teams (low score in People & Culture). Part of this phase involves a detailed AI requirements analysis to nail down the specific tools, skills, and data you'll need for the projects you want to tackle first.

The final deliverable from your assessment shouldn't be a dry spreadsheet. It needs to be a compelling report that tells a story, clearly highlighting:

- Your Key Strengths: What you can build on right now.

- Your Critical Gaps: The biggest risks that could derail your AI plans.

- Prioritized Recommendations: An actionable list of things to do next to close those gaps.

This report becomes your playbook. It transforms the assessment from a simple evaluation into a concrete strategic roadmap, guiding your journey from where you are today to a future where AI genuinely drives your business forward. If you need a hand building this out, you can see how we approach AI strategy consulting.

Analyzing Your Results to Find the Critical Gaps

Alright, you've done the legwork—the workshops, interviews, and audits are complete. Now comes the part where all that data gets turned into real insight. This is where we move from information gathering to genuine understanding, piecing together an honest, unfiltered picture of where your organization truly stands with AI.

More importantly, this is how you'll pinpoint the critical gaps that could sink your AI ambitions before they ever leave the harbor.

This analysis isn't about ticking boxes or just creating another report to sit on a shelf. It's about laying the foundation for your strategic roadmap. The quality of your interpretation here will directly shape the success of your entire AI initiative, informing every single decision you make from this point forward.

From Raw Data to Actionable Insights

First things first, you need a way to make sense of everything you've collected. This is where a simple maturity model or scoring system—like the 1-to-5 scale we talked about earlier—comes in handy. It helps you move past anecdotal feedback and objectively benchmark where you are across the four key pillars: Strategy, Data, Technology, and People.

For example, you might look at your findings and score a solid 4/5 on Technology because you’ve invested in modern cloud infrastructure. But then you look at your data and realize it's a mess of siloed, poor-quality sources, earning you a 1/5 on Data Maturity. Just like that, your biggest imbalance is staring you right in the face.

As you dig into your assessment, you'll almost certainly find that data quality is a major hurdle. Getting a handle on essential data quality best practices is a non-negotiable step to fix these fundamental problems.

Your most important discoveries will often pop up in the disconnects between the pillars. A sky-high score in Strategic Alignment doesn't mean much if your People & Culture score is in the basement, signaling a team that's resistant to change or just doesn't have the skills to pull it off.

Identifying and Prioritizing Your Gaps

Once you have your scores, you can start mapping out the gaps. But don't just create a long, overwhelming list of problems. The key is to categorize them to understand their root causes and, ultimately, their urgency.

I've seen these issues pop up time and time again. They usually fall into a few common buckets:

- Data Gaps: This is the big one for most companies. It can be anything from data locked away in legacy systems to a complete lack of governance, which makes building reliable AI models impossible.

- Talent Gaps: Maybe you have enthusiastic teams, but you’re missing key roles like ML engineers or experienced data scientists. This kind of gap can stop a project from ever moving past a PowerPoint slide.

- Cultural Gaps: This is the silent killer of AI projects. If your company culture fears failure or resists making decisions based on data, even the most brilliant AI tool will get rejected by the organizational immune system.

- Infrastructure Gaps: Sometimes the pipes just aren't there. Your current systems might not have the raw computing power for AI or lack the APIs needed to integrate new tools smoothly.

This kind of analysis gives you a clear, evidence-based view of where to focus your energy first. It turns that vague feeling of "we're not ready" into a concrete list of problems you can actually start solving.

Benchmarking Your AI Maturity

It’s also incredibly helpful to see how you measure up against the rest of the world. Understanding industry benchmarks provides crucial context for your own results. It helps you manage expectations and can be a powerful tool when you need to make the case for investment to your executive team.

A recent analysis by HG Insights offers a great reference point. It found that the average AI maturity score for the top 1,000 AI-ready organizations is just 24.5 out of 100.

What’s interesting is that companies with higher revenue tend to score higher; those bringing in over a billion dollars average 27.9. The biggest players, with more than 10,000 employees, have the highest average score at 28.9.

The takeaway here? Even the most advanced companies have a long way to go. It’s a comforting reality check. Your goal isn't to achieve perfection overnight but to make steady, strategic progress from wherever you're starting today.

Categorizing Gaps for Your Roadmap

The final step in your analysis is to translate your prioritized gaps into action. This is the crucial bridge between your assessment and your future AI roadmap. Every weakness you've identified needs to be reframed as an opportunity for improvement.

I recommend organizing your findings into two main buckets:

- Short-Term Fixes (The Quick Wins): These are the things you can realistically tackle in the next 3-6 months. Think about launching a data literacy program for a key team, cleaning up one specific high-value dataset, or running a small pilot project to show some immediate value.

- Long-Term Strategic Initiatives: These are the bigger, foundational changes that will take a year or more. This is where you'd put a complete overhaul of your data governance framework, a long-term hiring plan for AI talent, or a major investment in new cloud infrastructure.

By categorizing your findings this way, your assessment becomes a practical, living document. It's the critical link that transforms what you've learned into a tangible plan, ensuring your AI journey is built on a solid, well-understood foundation.

Turning Your Assessment into a Strategic AI Roadmap

An AI readiness assessment is only worth the paper it's printed on if it actually leads to action. Once the deep dive is done, you're left with an honest, sometimes bracing, look at where you stand—your strengths, your weaknesses, the whole picture. This is the moment of truth where all those findings have to evolve from a static report into a living, breathing AI roadmap that will genuinely guide your next steps.

Think of the roadmap as the bridge connecting where you are now to where you want to be with AI. It’s how you take the gaps you’ve uncovered and turn them into a prioritized list of projects, translating data-backed insights into a real plan of attack. Skip this, and even the most detailed assessment is doomed to become just another file gathering digital dust on a server.

From Gaps to Actionable Initiatives

First things first, you need to reframe every gap you found as a specific, actionable project. If your assessment flagged a low score in data quality, the initiative isn't vaguely "improve data." It's something concrete, like "launch a pilot project in Q3 to clean and consolidate customer data from our top three sources."

This really is a shift in mindset. You're not looking at a list of problems; you're looking at a portfolio of opportunities.

- Talent Gaps: This isn't just a weakness. It's the impetus for a strategic hiring plan or an internal upskilling program focused on data literacy and the basics of machine learning.

- Technology Gaps: This becomes the foundation for a solid business case to migrate to a new cloud platform or invest in specific data warehousing tools that will actually get the job done.

- Cultural Gaps: This is your cue to create a real change management plan, maybe by setting up an AI ethics committee or launching informal "lunch and learns" to take the mystery out of AI for everyone.

When you frame the gaps as tangible projects, you create a clear path forward that people can actually understand and support.

Prioritizing Based on Impact and Feasibility

Let's be realistic: you can't fix everything at once. That's why prioritization is so critical. A simple but incredibly effective way to do this is to plot each of your new initiatives on an impact vs. feasibility matrix. It forces you to think strategically.

- High-Impact, High-Feasibility: These are your quick wins. They deliver visible value without a massive investment of time or money. A perfect example? Implementing an AI-powered chatbot for internal IT support to slash ticket resolution times.

- High-Impact, Low-Feasibility: These are your big, strategic bets. They’ll require serious investment but have the potential to truly change the game for your business. Think about developing a proprietary predictive maintenance model for your manufacturing line.

- Low-Impact, High-Feasibility: These are the "nice-to-haves" that you can put on the back burner or delegate to a team when they have downtime.

- Low-Impact, Low-Feasibility: Steer clear of these. They’re a drain on resources with little to no payoff.

This framework forces the tough conversations and strategic trade-offs, making sure you put your energy where it will count the most, especially early on.

Building a Phased Plan with Clear Milestones

With your priorities locked in, you can start building a phased roadmap with a clear timeline. This is where your assessment really comes to life as a guide for the future. Your plan might look something like this:

- Phase 1 (Months 1-6): Foundational Fixes. This is all about tackling the quick wins and getting your house in order. Think data cleanup projects, launching an initial training program, and maybe selecting a vendor for a small, contained pilot project.

- Phase 2 (Months 7-18): Scale and Expand. Time to build on those early successes. This phase could involve scaling up that successful pilot, hiring key AI talent you’ve identified, and tackling more complex system integrations.

- Phase 3 (Months 19+): Enterprise-Wide Adoption. The goal here is to embed AI across multiple parts of the business, fostering a culture where data-driven innovation is just how you operate.

As you build out this roadmap, think about how AI can be applied to specific functions. For example, you can leverage practical guidance on using AI in recruiting to sharpen your talent acquisition strategy.

A great roadmap tells a compelling story. It shows executives not just what you're going to do, but why it matters and how it all connects back to the company's biggest goals. This narrative is absolutely crucial for securing long-term buy-in and resources.

This structured approach turns what feels like a massive challenge into a sequence of manageable, achievable steps. If your team needs a hand during this critical planning stage, our implementation support services can bring the expertise needed to build a roadmap that’s both robust and realistic.

Setting KPIs to Measure Success

Finally, every single initiative on your roadmap needs its own set of clearly defined Key Performance Indicators (KPIs). You can't improve what you don't measure. These metrics are what you'll use to track progress, demonstrate ROI, and keep the entire organization pulling in the same direction.

The 2025 Global AI Readiness Index drives this point home, showing how the leading nations excel because they focus on strong public sector deployment and workforce upskilling—both areas where clear metrics are absolutely vital for tracking progress.

By turning your assessment into a well-defined roadmap with clear priorities and measurable goals, you create the blueprint for sustainable AI success. You’re not just hoping for the best; you're planning for it.

Common Questions About AI Readiness

Even with the best-laid plans, embarking on an AI readiness assessment always brings up questions. It's only natural. Tackling these common queries head-on can demystify the process and give your team the confidence to dive in.

Let’s walk through some of the most frequent questions we hear from organizations taking their first steps. Think of this as a quick-start guide to clear those final hurdles.

Where Do We Even Begin?

This is easily the most common question, and the answer isn't technical. Before you touch a single line of code or analyze a dataset, your absolute first step is to secure executive sponsorship.

Getting leadership buy-in is non-negotiable. It’s what gives you the authority, budget, and organizational muscle to make the assessment a success. Without it, even the best intentions will stall out. Once you have that top-level support, your next move is to assemble a cross-functional team. You’ll want people from IT, key business units, data science, and even HR to get a complete picture of your company's real-world capabilities.

What Does a Successful Assessment Look Like?

So, how do you know if you've succeeded? A successful assessment doesn't just produce a long report that sits on a shelf. It delivers a clear, actionable roadmap with prioritized initiatives, concrete KPIs, and a timeline that actually makes sense for your business.

Success really boils down to three things:

- It aligns stakeholders around a unified vision for AI.

- It pinpoints critical gaps in your strategy or operations—the ones you didn't even know you had.

- It provides a clear path forward that the organization immediately starts to follow.

It’s the tool that turns vague ideas into a data-driven strategy.

The ultimate sign of a successful AI readiness assessment is when it stops being a "project" and becomes the foundation of how your company makes strategic decisions about technology. It should spark action, not just conversation.

How Often Should We Revisit the Assessment?

It's tempting to finish a big assessment, check the box, and move on. But the world of AI moves incredibly fast. What was cutting-edge last year might be standard practice today.

We recommend doing a lighter "health check" annually or biennially. Your business goals will evolve, and new technologies will open up new possibilities. Regular check-ins ensure your roadmap doesn't become a relic built on outdated assumptions.

For more detailed answers, you can explore our full frequently asked questions page. Need more personalized guidance? Reach out to our team of experts to see how we can help you build and execute a winning AI strategy.