Mastering AI Requirement Analysis for Project Success

Unlock project success with our guide to AI requirement analysis. Learn a battle-tested framework for defining objectives, assessing data, and mitigating risks.

Before anyone writes a single line of code, the fate of an AI project is often already sealed. It all comes down to the quality of the initial blueprint, a process we call AI requirement analysis. This isn't just some technical checklist to tick off; it's a strategic business exercise that gets everyone on the same page and helps you sidestep classic project killers like scope creep and spiralling budgets.

Why AI Requirement Analysis Is Your Project's Foundation

Jumping into an AI project without a solid plan is like setting sail without a map. You might have a vague idea of your destination, but you’re almost guaranteed a rough, unpredictable journey. The analysis phase is that map. It’s the foundational work that translates business goals into something technical teams can actually build.

At its core, it's about asking the tough questions right at the start to make sure the project solves a real problem and delivers value you can actually measure.

This initial planning is the cornerstone of any successful AI strategy framework and dictates everything that follows, from finding the right data to deploying the final model. I’ve seen it happen time and again: without this step, teams build something technically brilliant that nobody needs or uses. A proper analysis is what turns a fuzzy idea into a concrete, achievable plan.

Connecting Your Strategy to the Real World

One of the biggest mistakes I see is when companies treat AI as a pure tech problem. It’s not. It's a strategic business initiative, first and foremost. The analysis process forces a conversation between the business side and the tech side, linking high-level goals to what AI can realistically do. This is where collaborative methods like AI co creation really prove their worth, ensuring the final product is directly tied to a business outcome.

One of the biggest mistakes I see is when companies treat AI as a pure tech problem. It’s not. It's a strategic business initiative, first and foremost. The analysis process forces a conversation between the business side and the tech side, linking high-level goals to what AI can realistically do. This is where collaborative methods like AI co creation really prove their worth, ensuring the final product is directly tied to a business outcome.

Without this alignment, you risk teams burning through months and budget developing a model that misses the point entirely. For instance, a vague goal like "improve customer service" is a recipe for failure. A good analysis drills this down into something specific: "reduce customer query resolution time by 30% using an AI-powered chatbot that handles common questions."

Suddenly, everyone has clarity. The data scientists know what to build, and the executives know what they're paying for. This specific objective becomes the guiding star for every decision made down the line.

A solid analysis prevents the most common project killers—scope creep, budget overruns, and delivering a solution that nobody actually needs. It’s about asking the right questions upfront.

The Growing Need for a Structured Approach

The race to adopt AI is on, and it’s accelerating. In Germany, for example, the artificial intelligence market is booming and expected to soar past €10 billion by 2025. This growth is fuelled by massive investments in AI-driven automation and predictive maintenance, particularly in powerhouse sectors like automotive and engineering. With this much money on the line, meticulous planning is no longer a "nice-to-have"—it's essential for seeing a return on that investment. You can read more about these latest AI market trends and statistics to get a sense of the competitive pressures.

This market expansion makes a structured approach, often guided by AI strategy consulting, more critical than ever. We find that companies with a formal analysis process are far better equipped to:

Pinpoint high-impact opportunities that fit their unique market.

Get stakeholders on board with a clear, data-supported business case.

Address risks around data privacy, bias, and regulations from the outset.

Allocate resources smartly, focusing on projects with the highest chance of success.

To give you a clearer picture, here’s a breakdown of the core components we look at during this phase.

Core Components of AI Requirement Analysis

This table offers a quick overview of the foundational elements that ensure a comprehensive and effective AI requirement analysis. Getting these right sets the stage for a successful project.

Component | Core Focus | Key Outcome |

|---|---|---|

Stakeholder Mapping | Identifying everyone impacted by the project, from end-users to executives. | A clear understanding of needs, expectations, and potential resistance. |

Data Readiness | Assessing the quality, quantity, and accessibility of available data. | A realistic view of what’s possible with the data you have. |

Use-Case Prioritisation | Evaluating potential AI applications based on value and feasibility. | A focused project with a strong business case. |

Feasibility Assessment | Analysing technical, operational, and financial viability. | A go/no-go decision based on a 360-degree view of the project. |

Success Metrics | Defining clear, measurable KPIs to track performance. | A shared definition of what "success" looks like. |

Each component builds on the last, creating a holistic blueprint for your initiative.

In the end, AI requirement analysis isn’t just a box-ticking exercise you do at the start. It’s a continuous discipline that keeps your AI initiatives on track, delivering real value and helping you stay competitive. It’s what separates successful AI implementations from expensive science experiments.

Turning Business Goals into AI Objectives

Here’s a hard truth: a technically brilliant AI model that doesn’t solve a genuine business problem is nothing more than an expensive science experiment. The single most important part of any AI requirement analysis is translating broad business ambitions into tangible, technical objectives. Success isn't measured by the sophistication of the algorithm; it's measured by the real-world impact it has on your bottom line.

Too many AI projects get stuck right at the start. They begin with vague, boardroom-friendly goals like “improving efficiency” or “enhancing the customer experience.” While these sound impressive, they give a development team absolutely nothing to work with. The real task is to get from these fuzzy aspirations to crystal-clear, measurable targets.

This translation process is where the real work happens. It’s about having deep, honest conversations with stakeholders to figure out what they actually need, which is often different from what they first say they want.

From Vague Ideas to Specific Targets

So, how do you get past the surface-level requests? You need to guide the conversation with structured workshops and probing interviews. The whole point is to dig deeper and put a number on the desired outcome.

When someone says they want to "improve operational efficiency," don't just nod and write it down. Start asking the right questions:

Which specific operation are we talking about? Is it invoicing? Customer onboarding?

How are you measuring its inefficiency today? Is it time spent, cost per transaction, or error rates?

What would a 10% or 20% improvement actually look like? Fewer manual corrections? Faster turnaround times?

Whose daily work is most affected by this problem?

Suddenly, a wishy-washy goal transforms into a concrete objective. "Improve operational efficiency" becomes "Reduce manual invoice processing time by 40% by automating data extraction and validation." Now the technical team has a clear mission, and the leadership team has a measurable ROI to track.

A project’s success is defined by its ability to hit specific, pre-defined business targets. If you can't measure it, you can't manage it, and you certainly can't prove its value to the organisation.

Documenting these objectives is just as crucial as defining them. A solid requirements document becomes the single source of truth, aligning everyone and turning the project into a collaborative effort between business and tech.

Identifying and Aligning Key Stakeholders

A classic mistake I see all the time is overlooking a key group of stakeholders. An AI initiative can send ripples across the entire organisation, affecting everyone from the C-suite to the people on the front lines. That's why building a comprehensive stakeholder map is non-negotiable.

Start by listing everyone who will be touched by the project or who holds influence over it. This typically includes:

Executive Sponsors: The champions who hold the purse strings and advocate for the project.

Business Unit Leaders: The people who own the problem, like the Head of Sales or the Operations Manager.

End-Users: The team members who will use the AI system every day. Their buy-in is absolutely critical for adoption.

IT and Data Teams: The guardians of the infrastructure and the data you'll need.

Legal and Compliance Officers: The experts who make sure you’re not crossing any regulatory lines.

Once you know who they are, you need to talk to them. Each group has its own perspective and priorities. An operations manager might be focused on cutting costs, while an end-user will care far more about how intuitive the new tool is. Acknowledging and balancing these different needs early on prevents expensive rework and ensures the final solution actually gets used.

I've personally seen technically flawless AI models get shelved because the very people they were designed to help rejected them. Why? Because the initial analysis missed the realities of their daily workflow or failed to address their fears. When you engage stakeholders properly, you turn potential critics into your biggest supporters, clearing the path for a solution that isn't just built right, but is also the right solution for the business.

Gauging Your Data Readiness and Technical Feasibility

Let's be blunt: an AI system without high-quality, relevant data is like an engine without fuel. It’s just not going to work. A crucial part of any genuine AI requirement analysis is moving past a simple "do we have data?" checkbox. This is where you have to get brutally honest about your data's quality, how accessible it is, and whether it truly relates to the business problem you're trying to solve. Think of it as the ultimate reality check for your project.

This means putting your data assets under a microscope. You need to understand not just what information you collect, but its actual condition. Is it clean, structured, and ready to go? Or is it a messy, inconsistent jumble of records spread across different systems? Knowing where your data lives and how easily your team can get to it for model training is fundamental. Answering these questions early on saves you from chasing projects that are destined to fail because of data roadblocks.

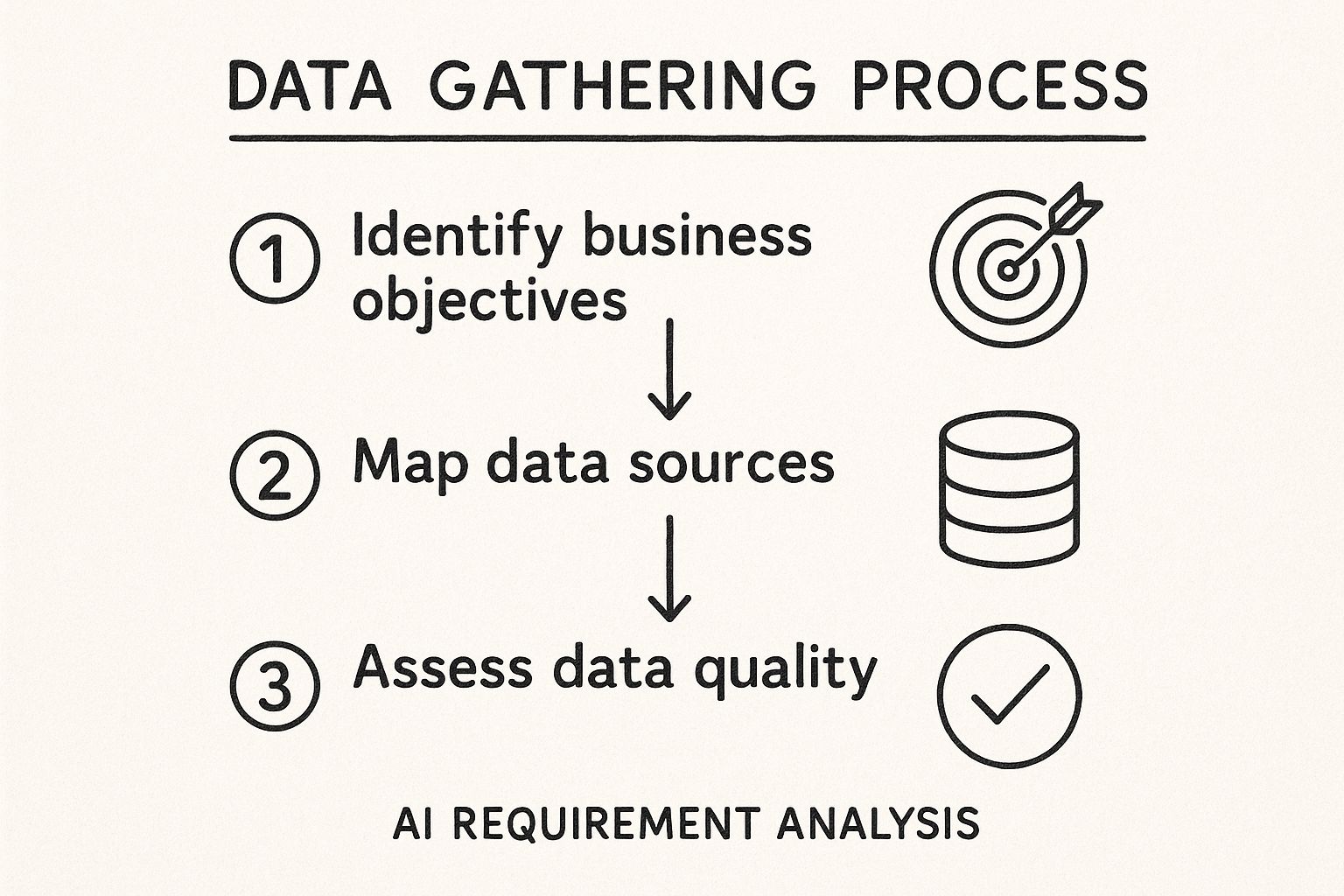

This initial data gathering process isn't just a technical exercise; it's a strategic one.

As you can see, the whole process is anchored in business objectives. This flow really drives home the point that technical data work must always be guided by strategy. The big takeaway here is that successful AI projects treat data sourcing and quality checks not as an IT task, but as a direct extension of the business strategy itself.

Is Your Data Fit for AI?

Once you’ve mapped out where your data is, the real scrutiny begins. A classic mistake I’ve seen many times is assuming that a huge volume of data automatically makes it suitable for AI. In practice, quality trumps quantity every single time. Biased, incomplete, or irrelevant data will only produce a biased and ineffective AI model, no matter how much of it you feed into the system.

You have to ask some tough questions about your datasets:

Relevance: Does this data actually relate to the problem we're trying to solve?

Completeness: Are there significant gaps or missing values that could throw off the results?

Accuracy: How reliable is this information? Is it riddled with human error or outdated?

Accessibility: Is the data stored where the development team can easily and securely access it, or is it locked away in siloed legacy systems?

Bias: Does the data reflect historical biases? For instance, if past hiring data favoured a certain demographic, an AI model trained on it will only learn to repeat that bias.

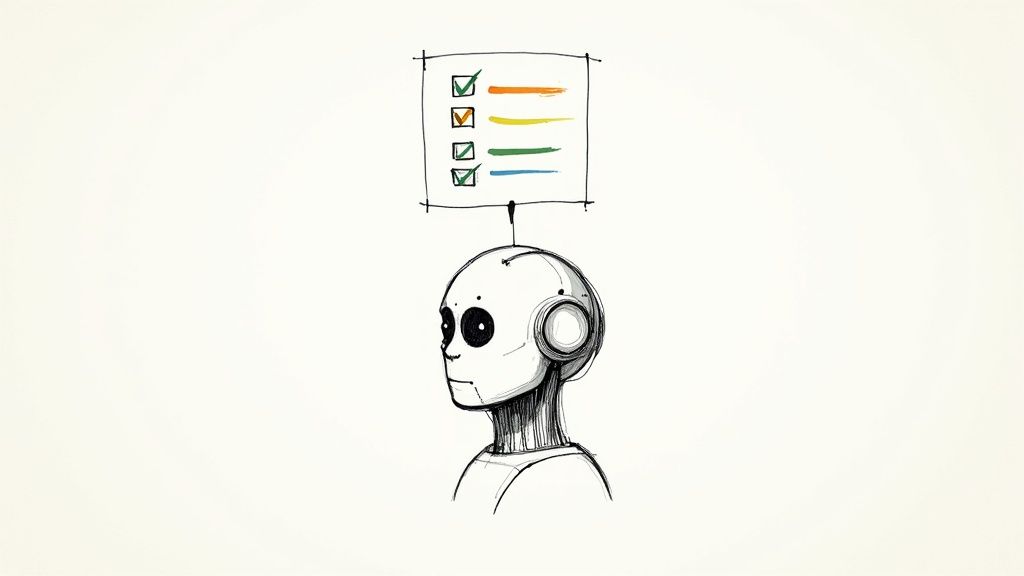

To give you a practical tool for this, I've put together a checklist to help you gauge where you stand. It’s a simple way to start the conversation and spot potential red flags before they become major problems.

| Data Readiness Assessment Checklist |

| :--- | :--- | :--- |

| Assessment Area | Key Question to Ask | Green Flag |

| Data Volume | Do we have enough historical data to train a meaningful model? | You have at least 12-24 months of consistent data relevant to the use case. |

| Data Quality | Is our data clean, with minimal missing values or errors? | Data is largely complete, and you have processes for data cleansing. |

| Data Accessibility | Can our data science team easily query and extract the data they need? | Data is in a centralised repository (e.g., data warehouse) with clear access protocols. |

| Relevance | Does the data contain the specific features needed to predict the target outcome? | Your subject matter experts confirm the available data directly influences the problem. |

| Bias & Ethics | Have we reviewed the data for historical biases that could lead to unfair outcomes? | You have a documented process for bias detection and mitigation. |

Working through this checklist will give you a much clearer picture of your starting point. Identifying these issues early is what separates successful projects from failed ones. It gives you time to build a plan to cleanse, augment, or even acquire new data before you commit serious resources to development. To fast-track this evaluation, a specialised AI requirements analysis tool can provide a structured framework to ensure you don't miss a thing.

Checking Your Technical and Team Feasibility

Beyond the data itself, a successful AI project leans heavily on having the right technical infrastructure and human expertise. This part of the analysis is all about looking at your organisation's current capabilities to see if you're truly set up to build, deploy, and maintain an AI solution.

A project can be perfectly planned and have flawless data, but it will still fail if the technical foundation is shaky or the team lacks the necessary skills to execute.

This feasibility check isn't about having the absolute latest, most expensive technology; it's about having the right technology and skills for the job at hand. You’ll need to evaluate your current tech stack, including cloud services, processing power, and development tools.

Even more importantly, you must be honest about your team's skillset. Do you have data scientists, machine learning engineers, and data analysts who know how to translate business goals into a functional model? As we explored in our AI adoption guide, having the right people is just as critical as having the right tech. If you spot gaps in expertise, you need a plan to fill them—whether that’s through hiring, upskilling your current team, or partnering with an external group like our expert team. This candid assessment prevents you from starting a project that’s logistically or technically doomed from the get-go.

Prioritising Use Cases for Maximum Impact

So, you've aligned your business goals and given your data a clean bill of health. Now comes the exciting, and often overwhelming, part: you're staring at a long list of potential AI projects. It's a great problem to have, but you can't do everything at once.

The real skill here is moving from a scattered brainstorm to a focused, strategic roadmap. This stage of your AI requirement analysis is all about making shrewd decisions to ensure your first few projects create a serious splash. From my experience, trying to boil the ocean is a classic recipe for disaster. A focused approach, on the other hand, helps you nail those early wins, build momentum, and show tangible value to the people holding the purse strings. This isn't about guesswork; it's about a structured way to sift through your ideas and find the true gems.

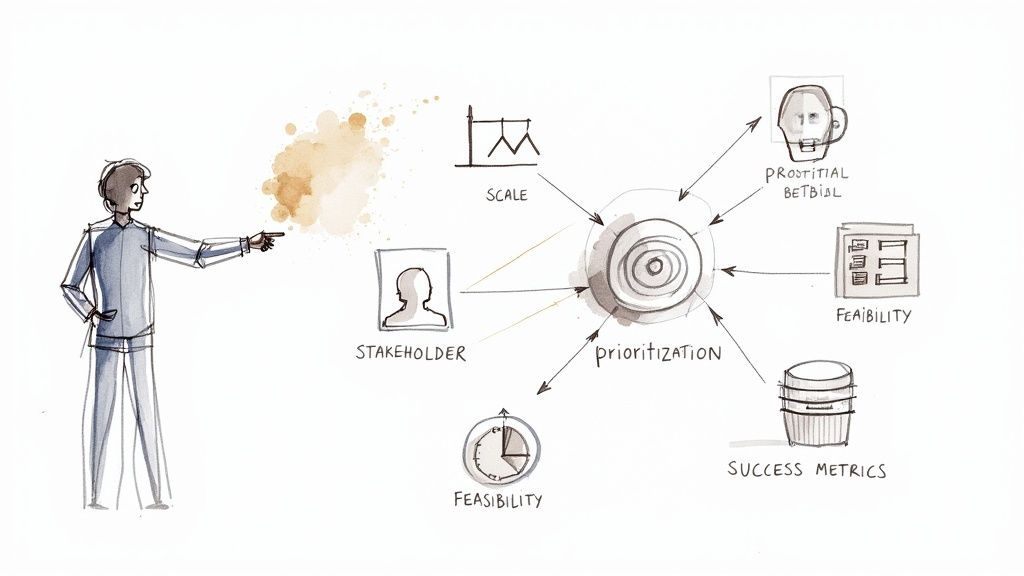

Creating a Scoring Framework

A good scoring framework injects much-needed objectivity into what can easily become a debate over pet projects. It forces everyone to evaluate each idea against the same set of criteria—criteria that matter to the business. Suddenly, the conversation shifts from "Which idea is coolest?" to "Which idea makes the most sense for us right now?"

To get this right, you need to score each potential project against four fundamental pillars:

Business Value: How much will this move the needle? We're talking hard numbers: direct revenue, cost savings, risk reduction, or even a measurable lift in customer satisfaction.

Technical Feasibility: Let's be realistic. Based on your current data and team expertise, how tough will this be? Is the tech mature enough for this specific problem, or are you venturing into uncharted territory?

Implementation Cost: What's the real price tag? Think beyond just the initial development. Factor in infrastructure, maintenance, and the time your team will be tied up.

Strategic Alignment: Does this project actually support the company's long-term vision? Will it give you an edge over the competition or open up a new market?

By giving each category a score (a simple 1-5 scale works well), you can calculate a total for every use case. This gives you a clear, data-informed ranking of your opportunities.

The goal isn't just to find any AI project, but to find the right one to start with. Prioritisation is the bridge between a good idea and a successful, high-impact implementation.

From a Long List to a Shortlist

With your scoring system ready, filtering that initial list of ideas becomes much more straightforward. The projects that float to the top are your prime candidates.

These are your sweet spots—the opportunities that offer a brilliant balance of high value and realistic achievability. They represent the quick wins that can deliver noticeable results without locking you into a massive, multi-year saga.

If your initial list is looking a bit thin, don't worry. A great way to get the creative juices flowing is to explore a library of real-world use cases. Seeing what others have already accomplished can often spark brilliant ideas for your own organisation.

Building Your Phased AI Roadmap

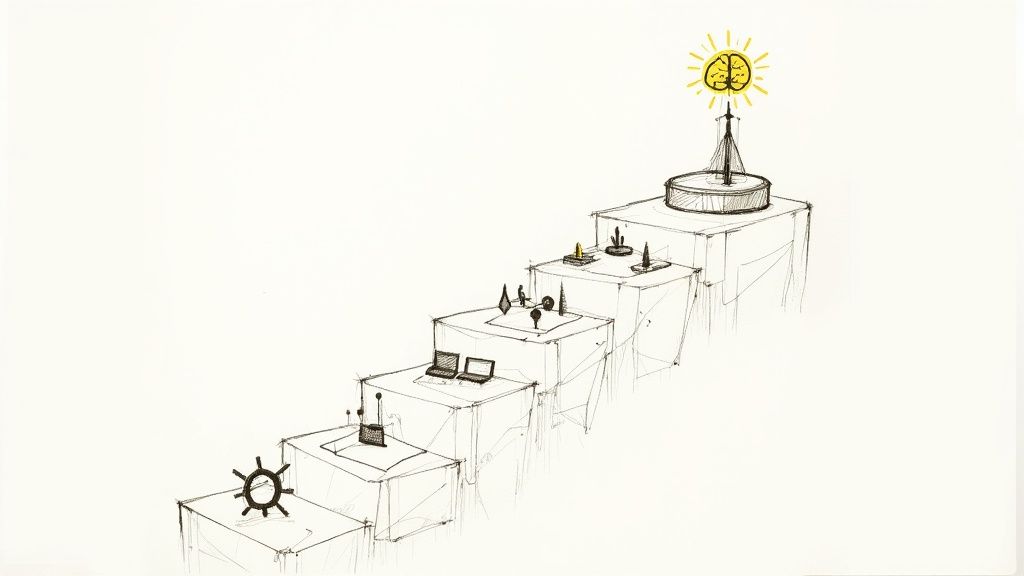

Once you have your shortlist, the final piece of the puzzle is to arrange these projects into a phased roadmap. This isn't a rigid plan set in stone. Treat it as a living document, a sequence of projects designed to deliver growing value over time.

A smart AI roadmap usually unfolds in a few logical phases:

Phase 1 (The Pilot): Kick things off with one or two high-value, low-complexity projects from your shortlist. The goal here is simple: prove the concept, learn some invaluable lessons, and get key people excited.

Phase 2 (The Expansion): Armed with the success and momentum from your pilot, you can start tackling slightly bigger challenges. This is where you scale your efforts and apply what you've learned to other parts of the business.

Phase 3 (The Transformation): With a solid track record, you've earned the right to go after the big, ambitious projects—the ones that become deeply embedded in your core operations.

This phased approach is a cornerstone of any sound AI strategy framework. It minimises risk, shows constant progress, and makes sure every step you take is built on a foundation of proven success. This is how you turn ambitious AI goals into a real, executable plan.

Setting Your Sights: Defining Success and Ethical Boundaries

So, how will you actually know if your AI project is a win? It's a simple question, but the answer often gets lost in the technical weeds. Let's be clear: a model with near-perfect accuracy means nothing if it doesn't move the needle on your business goals. True success is measured by real-world impact, which means you need to define your business-centric key performance indicators (KPIs) right from the start.

This isn't just a box-ticking exercise; it’s a crucial part of your AI requirement analysis. This is the moment you tie all the technical effort back to the "why" – the business objectives you hammered out earlier. Vague ambitions won't cut it. You need hard, trackable numbers that everyone on the project, from the data scientists to the C-suite, can understand and agree upon. These are the metrics that will ultimately prove the project's worth.

Looking Beyond Model Accuracy to Business Value

It’s incredibly common for tech teams to get laser-focused on optimising for metrics like precision and recall. And while these are important for building a robust model, they don't paint the full picture. I’ve seen projects boast about 99% accuracy yet completely fail to solve the actual business problem.

Your success metrics need to be a direct reflection of the goals you’ve already identified. Let's make this tangible with a few examples I've seen in the field:

For a sales forecasting model: Forget just focusing on prediction accuracy. The real goal is something like a 15% reduction in costly excess inventory or a 10% lift in sales for specific products you're pushing.

For a predictive maintenance system: Success isn't a flawless algorithm; it's a 20% drop in unplanned operational downtime. It’s a real, measurable decrease in what you spend on maintenance each year.

For a customer service chatbot: The true win is a 30% reduction in the number of simple queries that need a human agent's touch, or seeing a clear improvement in customer lifetime value (CLV).

When you define these KPIs upfront, success stops being a matter of opinion and becomes an objective reality. It gives your team a clear finish line and ensures everyone is pulling in the same direction.

Success isn't just about what the AI can do; it's about what it achieves for the business. The only way to prove a genuine return on investment is by linking your metrics directly to financial or operational outcomes.

Weaving Ethical Guardrails into Your Project DNA

In the excitement of launching a new AI initiative, it's dangerously easy for ethical considerations to become an afterthought. But let's be realistic: a project that boosts profits but sinks your company's reputation is a spectacular failure. That’s precisely why building ethical guardrails directly into your requirements from day one isn't optional—it's essential.

This is about proactively thinking through the tough questions and planning for them. It means asking:

Fairness: How do we make sure our AI doesn't just copy, or even worsen, existing societal biases? This requires a hard look at your training data and rigorous testing to check for biased outcomes across different groups of people.

Transparency: Can we actually explain why the AI made a particular decision? In high-stakes situations, a "black box" model is a massive liability. You have to decide on the level of explainability you need before you start building.

Accountability: When the AI gets it wrong—and it will—who is responsible? You need to establish crystal-clear lines of ownership and have processes in place for monitoring the model and fixing issues as they appear.

As we explored in our AI adoption guide, building this foundation of trust is fundamental to the long-term success of any AI system. By tackling these ethical questions during the requirements phase, you’re not just managing risk. You’re building a stronger, more dependable, and ultimately more valuable solution. This kind of forward-thinking, a cornerstone of effective AI strategy consulting, ensures your project succeeds on every level—financially, operationally, and ethically.

From Analysis to Action with Expert Guidance

A thorough AI requirement analysis is more than just a box-ticking exercise; it's the foundational blueprint for your entire project. It’s where you align your business strategy with the right technology and, most importantly, genuine human expertise. Getting this initial work right is what consistently separates successful AI initiatives from expensive learning experiences. For any organisation serious about getting results, partnering with seasoned professionals can make all the difference.

This is particularly true when you look at what's happening on the ground. In Germany, for instance, AI adoption has seen a significant jump, with 19.8% of companies now using AI in 2024—a sharp increase from 11.6% just a year ago. But here's the catch: that growth is running headfirst into a massive talent gap, with a reported 78% shortage of skilled AI professionals. This skills crunch makes expert guidance not just helpful, but often essential. You can discover more insights on the German AI market to get a clearer picture of the competitive environment.

A successful AI initiative is always a fusion of strategy, technology, and deep human expertise. A structured, upfront approach is your best defence against common project pitfalls.

Turning Your Plan into a Reality

Bringing in external knowledge from day one helps you sidestep common mistakes and keep your focus squarely on what will deliver real value. It ensures your projects are built for success right from the start. Whether you need an AI Strategy consulting tool to bring structure to your process or need someone in the trenches with you to navigate the complexities, the right partner is invaluable.

Our approach is hands-on. We often kick things off with a discovery session designed to solidify your findings and craft a clear, actionable plan. In fact, many of our clients find our AI discovery workshop is the perfect next step after they've done their initial analysis. It's a practical, collaborative way to pressure-test your ideas and walk away with a concrete roadmap.

When you're ready to transform your analysis into a fully-realised project, remember that success hinges on having the right people in your corner. Our expert team is here to guide your organisation toward responsible and impactful AI implementation.

Frequently Asked Questions

When you're stepping into the world of AI projects, it's natural for questions to pop up. Let's tackle some of the most common ones I hear from teams to help you navigate your AI requirement analysis with more confidence.

What Is the Biggest Mistake in AI Requirement Analysis?

I've seen it happen time and time again: teams get dazzled by the technology and dive straight into discussing algorithms and models. This is, without a doubt, the single biggest pitfall.

They get so caught up in the potential of AI that they skip the most crucial part: getting a crystal-clear, shared understanding of the business problem they're actually trying to solve. When you don't start with the "why," you end up with a technically impressive model that doesn't move the needle for the business. It’s a classic case of a solution looking for a problem.

How Is AI Analysis Different from Traditional Software Analysis?

That's a great question, and the distinction is vital. While you'll still do things like stakeholder interviews, AI requirement analysis has its own unique set of challenges that can make or break a project.

The real difference comes down to three things: an intense focus on data (its quality, volume, and hidden biases), the probabilistic nature of the outcomes (AI gives you educated guesses, not guarantees), and the need for constant monitoring and retraining long after the model goes live.

Think of it this way: in traditional software, you write the rules. In AI, you define the goal and feed the system the right data so it can learn the rules for itself.

How Long Should AI Requirement Analysis Take?

There's no magic number here. The timeline really depends on the project's complexity, how comfortable your organisation is with AI, and the state of your data. A simple chatbot project will be much quicker than, say, a predictive maintenance system for an entire factory floor.

As a rule of thumb, I advise clients to dedicate a solid 15-20% of the total project timeline to this discovery phase before any major development work begins. Trying to save time here is a false economy. A thorough upfront investment to define goals and check feasibility is what prevents costly rework and project failure down the line.

If you have more questions, our main FAQ page is another great resource to explore. For direct support, you can always connect with our team to discuss your specific needs.