A Guide to AI Requirements Planning

Master AI requirements planning with our expert guide. Learn to define data, model, and infrastructure needs to ensure your AI projects succeed.

Trying to shoehorn an AI project into a traditional software development plan is a recipe for disaster. It's like trying to navigate a new city with a map from ten years ago—you might get somewhere, but it won't be where you intended, and you'll be frustrated the entire way.

The fundamental disconnect is this: AI development is probabilistic, not deterministic. Success isn't just about writing flawless code. It hinges on the quality of your data, constant model experimentation, and a cycle of iterative learning. Rigid, old-school plans become obsolete the moment you start.

Why Old Planning Methods Fail AI Projects

Classic methodologies, from waterfall to even some standard agile frameworks, are built on the assumption of a clear, fixed scope and predictable outcomes. You define the features, your team builds them, quality assurance tests them, and you deploy. This works beautifully when you know precisely what the end product needs to do.

But AI projects are, by their very nature, experimental. You’re not just building a static feature; you're teaching a system how to learn and make decisions on its own. This introduces a massive amount of uncertainty that traditional project plans simply can't accommodate.

This paradigm shift is central to our philosophy of AI co creation and a core tenet of our AI strategy consulting practice.

The Problem with Predictability

Traditional planning is all about predictable timelines and resource allocation. For an AI initiative, many of the most critical variables are complete unknowns at the starting line.

- Data Availability and Quality: Do you actually have enough of the right data? Is it clean enough to train a model that works? You often won't know the real answer until your data scientists start digging in.

- Model Performance: You can't just write a requirement that a model will achieve 95% accuracy. That level of performance is discovered through rigorous experimentation, not defined upfront in a project charter.

- Iterative Learning: Some of the most valuable breakthroughs happen during the project, forcing you to pivot your strategy. A rigid plan actively works against this essential learning process, stifling innovation.

This deep-seated uncertainty is precisely why a new playbook for AI requirements planning is so critical. The numbers back this up: companies without a formal AI strategy report a success rate of only 37%. This tells us that a structured, yet highly flexible, planning framework is non-negotiable. If you want to dive deeper, you can discover more insights on AI success rates here.

Think of it this way: a traditional project is like building a bridge with a fixed blueprint. An AI project is more like exploring uncharted territory with a compass and a map that you have to draw as you go. You know the destination, but the exact path is discovered along the journey.

To really nail this point home, let's look at the core differences between these two worlds. A simple copy-and-paste of old methods just won't cut it.

Traditional Software vs AI Project Planning

This table breaks down the fundamental distinctions that make AI project planning a unique discipline.

Aspect Traditional Software Planning AI Requirements Planning

Outcome

Deterministic and predictable

Probabilistic and experimental

Requirements

Fixed features and functions

Performance metrics and data needs

Process

Linear and sequential (e.g., Waterfall)

Iterative and cyclical

Core Asset

Code and architecture

Data and algorithms

Risk

Scope creep and budget overruns

Poor model performance and bad data

Grasping these differences is the first real step toward building an AI initiative that actually delivers. It's less about rigid execution and more about embracing adaptive exploration. This mindset shift is everything.

From Business Ambition to AI Blueprint

This is where the magic—and the hard work—really begins. You've got a big-picture business goal, maybe something like "we need to improve customer retention." That’s a fantastic starting point, but you can't just hand that to a data science team. It’s an ambition, not an algorithm.

The most critical part of AI planning is translating that broad vision into a concrete, machine-readable problem. This is the bridge between strategic intent and technical execution. Nailing this step saves countless hours and prevents projects from going off the rails.

Success boils down to getting crystal clear on three things: the real business problem you're solving, the data you'll need to solve it, and how you'll measure success. Without this clarity, your technical team is flying blind, trying to hit a target they can't even see.

For smaller teams, this translation can feel daunting. This is often where bringing in outside help makes sense. A guide on AI consulting for small businesses highlights how specialized support can help connect those high-level goals to specific, actionable AI requirements.

Pinpointing the Core Business Objective

First things first, you have to reframe your business goal as a specific prediction or classification task. Let's stick with the "improve customer retention" example.

- Vague Business Goal: "We need to reduce customer churn."

- Specific ML Task: "We need to predict which customers are most likely to churn in the next 30 days based on their recent activity, support ticket history, and subscription tier."

See the difference? That reframing provides immediate direction. Suddenly, you have a clear question for the AI to answer. This isn't just semantics; it's the foundation of the entire project.

The same logic applies across the board:

- Goal: "Increase sales from our email campaigns."

- ML Task: "Classify which users in our database are most likely to respond to a discount offer for Product X."

- Goal: "Make our support team more efficient."

- ML Task: "Automatically categorize incoming support tickets into 'Billing,' 'Technical Issue,' or 'General Inquiry' with high accuracy."

What's Your Data Story?

With a specific task in hand, the conversation immediately turns to data. And it's not just about having data; it's about having the right data. Your requirements need to get granular about where it's coming from, its quality, how much you have, and any privacy hoops you need to jump through.

Let's go back to our churn prediction model. Your data requirements document should answer these questions:

- Sourcing: Where does this data live? Is it all in our CRM, or is it spread across a billing platform and a separate support database?

- Quality: How clean is it, really? Are there a ton of missing values in the 'last login date' field that we need to account for?

- Volume: Do we actually have enough historical data—on both customers who churned and those who stayed—to train a reliable model?

- Privacy: What PII (Personally Identifiable Information) is involved? How are we going to make sure we're compliant with regulations like GDPR?

A classic mistake is just assuming the data you need is ready to go. A thorough data audit is a non-negotiable part of AI requirements planning. It helps you spot the roadblocks before you’ve sunk serious time and money into a project. A detailed plan for data is a key component of a Custom AI Strategy report.

Choosing Metrics That Actually Matter

Finally, how will you know if you've succeeded? Technical accuracy, on its own, can be a dangerous vanity metric. Think about it: a model that's 99% accurate at predicting churn sounds amazing, right? But what if your natural churn rate is only 1%? The model could just be guessing "no churn" for every single customer and still look great on paper.

You need to choose performance metrics that are directly tied to your business outcomes.

- Precision: Of all the customers we predicted would churn, how many actually did? High precision avoids wasting money and effort trying to "save" customers who were never going to leave.

- Recall: Of all the customers who actually churned, how many did our model successfully catch? High recall ensures you aren't missing the very people you're trying to identify.

- F1-Score: This is a handy blend of both Precision and Recall. It's particularly useful when the business cost of getting it wrong is similar for both false positives and false negatives.

Picking the right metric from the start is what ensures the AI is truly optimized to create value, not just to look good in a report. It brings everything full circle, tying the model's technical performance directly back to the original business objective.

Mapping Your Data and Infrastructure Blueprint

An ambitious AI vision is only as good as the technical foundation it’s built on. Before anyone even thinks about writing model code, you have to get brutally honest about your data architecture and infrastructure. This is where your AI requirements planning moves from a whiteboard concept to something real—making sure you actually have the horsepower and data pipelines to pull it off.

Honestly, skipping this step is like designing a skyscraper without checking the bedrock first. The whole project is at risk of collapse. You have to start by asking some tough questions. Is your data locked away in a dozen different legacy systems? Do you have a scalable way to actually process and serve that data to a hungry AI model?

This isn't just a technical exercise for the IT department; it's a core strategic activity. It’s the moment your big ideas meet the reality of what you can do today, and it shines a bright light on the gaps you need to fill right now.

Conducting a Data Readiness Audit

First things first: you need to create a complete inventory of your data assets. And I don't just mean a list of databases. You need to know if the data is actually usable for machine learning.

I’ve seen plenty of projects get derailed because the data they thought they had was a complete mess. To avoid that, run through a simple audit. Ask yourself:

- Is it accessible? How easily can your data scientists get their hands on what they need? Are they going to be stuck waiting for permissions or battling technical hurdles for weeks?

- Is it clean? Take a hard look at the data's completeness. Are there huge gaps, inconsistent formats, or old, useless entries that will demand a massive pre-processing effort?

- Is it labeled? If you're doing supervised learning (which is most of the time), is your data labeled? If not, what's the plan? You'll need to budget for the time and cost to get it labeled accurately.

- Is there enough of it? Do you have enough historical data to train a model that actually learns something useful? And can your systems keep up with the speed at which new data is flooding in?

Answering these questions forms the backbone of your data strategy and is a non-negotiable part of AI requirements planning. You might discover that you need to invest in a proper data warehouse or build out some new ETL pipelines before you can even get started. Better to know that now.

Choosing the Right Infrastructure Stack

Once you have a handle on your data situation, the conversation shifts to the hardware. The choices you make here will directly impact your budget, your ability to scale, and how fast you can move.

The AI infrastructure market is absolutely exploding for a reason. By 2025, it's expected to be worth somewhere between $60.23 billion and $156.45 billion worldwide. That's a staggering figure, and it's all driven by companies needing specialized, high-powered systems. You can read the full research about AI infrastructure market growth to get a sense of just how big this shift is.

Your main decisions will boil down to a few key areas:

- Cloud vs. On-Premise: This is the classic debate. Cloud platforms like AWS, Azure, and GCP offer incredible flexibility and the ability to scale up or down on a dime, which is perfect when you're experimenting. On-premise setups give you more control, especially over data security, and can be cheaper in the long run for very predictable, heavy workloads.

- CPU vs. GPU: This is less of a debate and more of a technical necessity. For any serious deep learning—think computer vision or natural language processing—GPUs (Graphics Processing Units) are essential. Their parallel processing capabilities are just on another level. For simpler machine learning models, traditional CPUs (Central Processing Units) might be perfectly fine.

A project trying to analyze thousands of customer photos to detect sentiment? That screams for a GPU-powered cloud setup. But a model that forecasts next quarter's sales based on clean, tabular data? That could probably run just fine on a CPU-based server you already have in-house.

Making these infrastructure calls early on saves a world of headaches and costly changes down the road. It ensures your technical blueprint can support your business goals from day one and grow with you.

Integrating Human Expertise and Ethical Guardrails

Technology can't succeed in a vacuum. The most brilliant algorithm is useless—or even dangerous—without human oversight and a strong ethical foundation. This is why a critical part of AI requirements planning is figuring out where people fit in and establishing clear principles right from the start.

An AI system's "intelligence" is a direct reflection of the data and human expertise it learns from. You absolutely have to get your domain experts, data scientists, and end-users involved in the development process from day one. Their knowledge isn’t an afterthought; it’s a core requirement that needs to be baked into the system. This collaborative approach is a cornerstone of our AI Product Development Workflow.

Defining the Human-in-the-Loop

The "human-in-the-loop" isn't just one job. Think of it as a series of checkpoints where human judgment is non-negotiable. During your planning, you need to pinpoint exactly where these interventions will happen.

- Domain Experts: Your subject matter experts are the ones who can validate data labels and review model outputs to see if they make sense in the real world. They set the business context for what "good performance" actually means.

- Data Scientists: Their job is to turn that expert knowledge into technical specs. They're also responsible for spotting potential biases in the dataset and explaining how a model works to people who aren’t data scientists.

- End-Users: They offer the ultimate reality check. They’ll tell you if the interface is usable and if the AI's outputs are actually helpful and trustworthy in their day-to-day work. Without their buy-in, even the most accurate model will sit on a shelf.

This constant back-and-forth ensures the AI evolves in a way that truly serves the business and its people. For complex projects, managing these roles can be tough, but our expert team has years of experience structuring these human-centric workflows.

Building Your Ethical Framework

Ethical guardrails aren't just about ticking compliance boxes—they're about building trust. A model that churns out biased or inexplicable results will be rejected by users and could put your company's reputation on the line. Your requirements must tackle these issues head-on.

Your ethical framework is a core project requirement, not a "nice-to-have." It should define how you will measure and mitigate bias, ensure transparency, and maintain fairness, creating a foundation of trust with your users and stakeholders from day one.

Start by asking some tough questions:

- Fairness and Bias: How will we audit our training data for historical biases? What metrics will we use to ensure the model performs equitably across different user groups?

- Transparency and Explainability (XAI): When the model makes a prediction, how will we explain the "why" behind it to a customer or a regulator? Can we do it in plain English?

- Accountability: If the AI messes up, who is responsible? What’s the process for someone to flag, review, and correct an error?

Defining these guardrails upfront is just responsible innovation. To make sure your AI initiatives are managed ethically and responsibly, you should also explore these essential AI governance best practices. By putting both human expertise and ethical principles at the forefront, you build AI solutions that are not only powerful but also trustworthy and sustainable.

Building a Dynamic and Iterative AI Roadmap

Let's get one thing straight: the old-school, five-year strategic plan is a death sentence for an AI project. If you try to carve your AI roadmap in stone, you're setting yourself up for failure. The field moves too fast. Instead, the most successful AI initiatives I've seen are built on a dynamic, iterative plan—one that expects and even embraces uncertainty.

Think of your roadmap less as a single, rigid path and more as a series of focused sprints. Each sprint has one job: to answer a critical question or prove (or disprove) a key assumption. This agile approach lets your team learn from real results, not just outdated projections, and pivot when necessary. It's a highly collaborative process, a core principle of AI co creation, where diverse teams work together to build a strategy that can actually react to change.

Structuring Your Roadmap for Agility

The heart of an iterative roadmap is breaking down a massive project into smaller, digestible phases. Every phase needs a crystal-clear goal, a realistic timeline, and—this is non-negotiable—a formal go/no-go decision gate at the end. This simple structure is your best defense against throwing good money after bad.

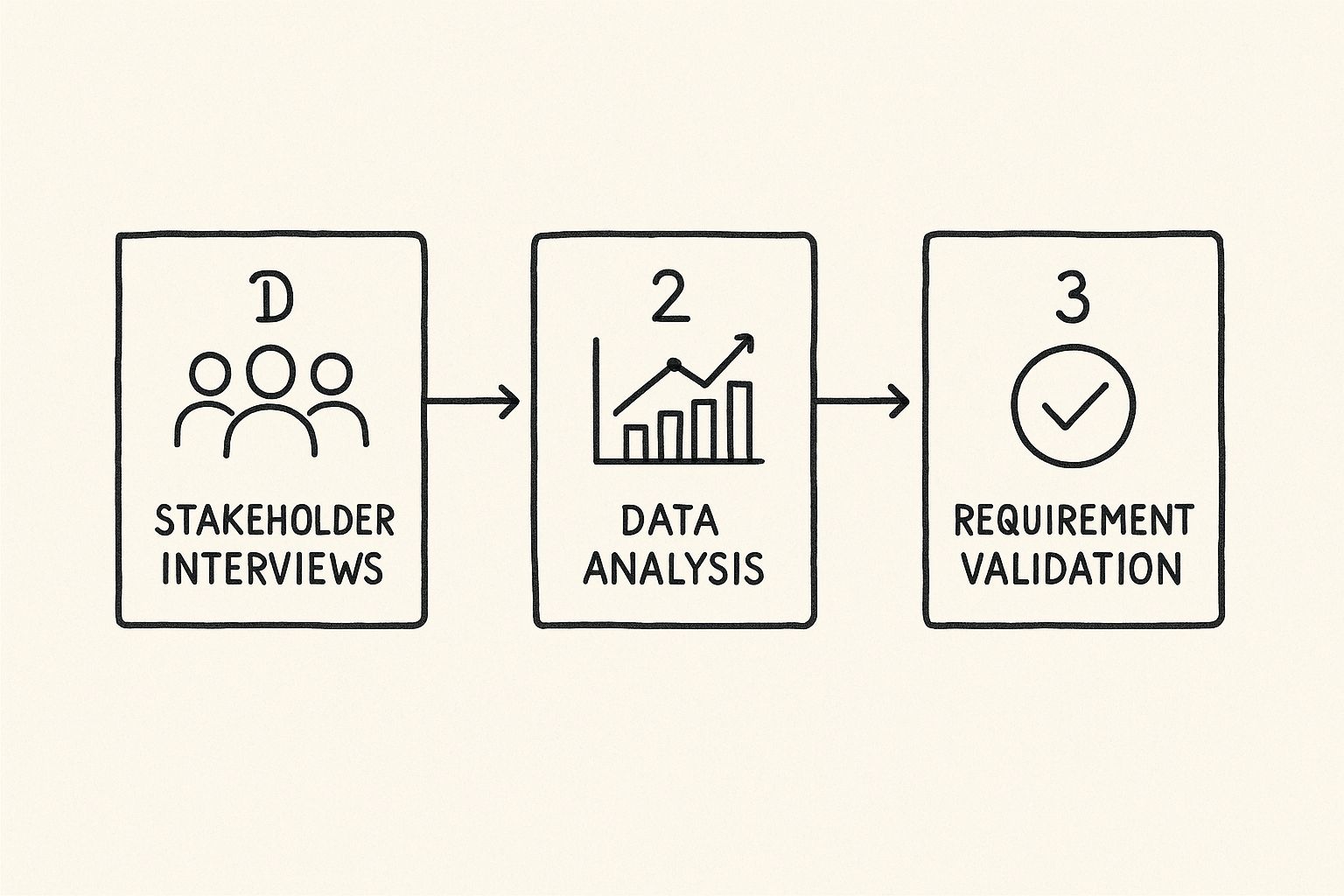

The flow usually moves from discovery and interviews right through to final validation of the requirements.

This diagram shows how you start with stakeholder insights, use those to guide your data analysis, and then formally validate everything to make sure the plan is both technically possible and actually solves the business problem.

At the end of each phase, whether it's a proof-of-concept or a pilot program, everyone needs to ask the hard questions: "Did we hit our mark? And based on what we just learned, does it still make sense to move forward?" This discipline turns AI requirements planning from a one-off task into a continuous, living process. You can see how this flexibility plays out in many real-world use cases.

To make this concept more concrete, here’s a look at how these phases typically break down in an iterative AI project.

Phases of an Iterative AI Project Roadmap

Phase Key Activities Primary Goal

Phase 0: Ideation & Feasibility

Brainstorming, high-level business case, data availability check, initial stakeholder interviews.

Validate the problem and determine if an AI solution is viable and valuable.

Phase 1: Proof-of-Concept (PoC)

Build a small-scale model with a limited dataset, test a core hypothesis, define key metrics.

Prove technical feasibility. Can we actually build a model that does the thing?

Phase 2: Pilot Program

Develop a Minimum Viable Product (MVP), deploy to a small, controlled user group, gather initial feedback.

Test the solution in a real-world (but limited) environment. Does it work and deliver value?

Phase 3: Scaled Deployment

Refine the model based on pilot feedback, integrate with production systems, roll out to a wider audience.

Achieve full operational deployment and start realizing business value at scale.

Phase 4: Ongoing Optimization

Continuous monitoring, scheduled retraining, implement user feedback loops, plan for model v2.

Maintain and improve model performance over its entire lifecycle.

Each phase provides a natural checkpoint to assess progress and decide the next steps, ensuring the project stays aligned with business reality.

Planning for the Full AI Lifecycle

A great roadmap looks beyond the go-live date. AI models aren't "set it and forget it" assets. Their performance degrades over time as real-world data patterns change—it’s called model drift, and it's inevitable. Your plan has to account for this from day one.

Make sure your roadmap explicitly includes these post-deployment activities:

- Scheduled Model Retraining: This isn't a bug; it's a feature. Plan to retrain your model with fresh data at regular intervals to maintain its accuracy and relevance.

- Continuous Performance Monitoring: You need to know how the model is doing in the wild. Define your key metrics and set up automated alerts for when performance drops below an acceptable threshold.

- Feedback Loops: Create simple, direct ways for end-users to give feedback. This qualitative data is gold for identifying subtle issues and planning the next set of improvements.

By baking these cycles into your plan, you transform your roadmap from a static document into a living guide for the AI's entire life. Documenting this in a Custom AI Strategy report is a fantastic way to keep everyone on the same page. This kind of forward-thinking is the hallmark of effective AI strategy consulting.

From Prototype to Production and Beyond

This is the make-or-break moment. You’ve built a promising AI prototype, and now it’s time to move it from the lab into the real world. This transition from a controlled experiment to a reliable, production-ready system is where many AI initiatives fall flat.

Successfully navigating this final hurdle is all about bridging the gap between your careful AI requirements planning and the messy reality of execution.

The secret? Think like a software company and embrace MLOps (Machine Learning Operations) right from the start. MLOps is what brings discipline, automation, and reliability to the entire AI lifecycle. We’re talking about building automated pipelines for data processing, systematically managing different model versions as they improve, and setting up dashboards for real-time performance monitoring.

For teams looking for a structured path through this maze, our AI Product Development Workflow offers a clear roadmap from prototype to a fully operational system.

Ensuring Adoption and Long-Term Value

A technically brilliant model is useless if nobody uses it. This simple truth is why change management and user adoption have to be core components of your plan, not afterthoughts.

User training isn't just a one-time webinar. It’s an ongoing process designed to build trust and prove, day in and day out, how this new AI tool genuinely makes people's jobs easier.

The stakes for getting this right are incredibly high. The AI industry is booming, with an estimated 97 million people working in the field globally. What’s more, 83% of companies now say AI is a top priority in their business plans. You can discover more insights about AI's global impact here.

An AI solution's true value isn't measured by its accuracy in a lab, but by its adoption and impact in the field. Effective deployment and user training are what turn a technical achievement into a business asset.

For organizations that want to sidestep the complexities of deployment, scaling, and maintenance, our AI Automation as a Service offering can manage the entire operational side. This ensures your solution not only goes live smoothly but continues to deliver value long after the launch party.

Common Questions About AI Planning

As you dive into planning an AI project, a lot of questions inevitably pop up. It’s a different beast than traditional software development. Here are a few of the most common questions we get asked, with some straightforward answers to help you navigate the process.

How Are AI Requirements Different From Traditional Software Requirements?

The fundamental difference comes down to certainty versus probability.

With traditional software, you write requirements that are deterministic. They're based on specific, predictable functions. For instance, "When a user clicks the 'Export' button, the system must generate a PDF report." It either works or it doesn't.

AI requirements, on the other hand, are probabilistic and built around data. You're not defining a hard-coded outcome but rather the desired performance. You'll focus on metrics like, "The model must identify fraudulent transactions with 90% precision," and you'll set standards for data quality and ethical boundaries. The system learns the outcome instead of being explicitly programmed for it.

What Is the Most Common Mistake in AI Requirements Planning?

By far, the biggest pitfall we see is a failure to properly translate a business problem into a well-defined machine learning problem.

It's tempting to start with a broad, fuzzy goal like "let's use AI to improve efficiency." But without pinning down exactly what that means, the project is almost guaranteed to drift and ultimately fail. True clarity is the bedrock of any successful AI initiative. You have to get specific about the metrics, the data, and the exact problem you're trying to solve.

How Much Data Do I Need for an AI Project?

This is the classic "it depends" question, but for a very good reason. The amount of data you need is directly tied to two things: the complexity of the problem you're solving and the type of algorithm you're using.

For a relatively simple model, you might get by with a few thousand well-labeled data points. But if you're tackling something much more complex, like medical image analysis using deep learning, you could easily need millions of examples to train a model that performs reliably.

For more in-depth answers to these kinds of questions, you can always explore our comprehensive FAQ page. If you're ready to start defining your own AI project, our AI requirements analysis tool can help you get started. You can also learn more about the people behind our success by meeting our team.