Creating Effective AI Use Cases for Your Industry | Boost Results

Learn how creating effective AI use cases for your industry can drive business success. Discover practical strategies to stay ahead and maximize AI benefits.

I've seen it happen time and time again: a company gets excited about AI, rushes to buy a generic, off-the-shelf tool, and ends up with an expensive failure. Why? Because they started with the solution, not the problem.

Crafting AI use cases that actually deliver value for your specific industry isn't about chasing the latest tech. It's about taking a hard look at your own business—your unique challenges, your data, your workflows—and asking a fundamental question: "What do we need to do better?" This guide will give you a practical framework to make that shift, ensuring every AI project you undertake is tied to real, measurable results.

Why Your AI Strategy Needs to Be More Than Just Tech

The pressure to adopt artificial intelligence is immense. Everyone wants a competitive edge, and the promise of AI-driven efficiency is tough to ignore. The problem is, too many organizations get it backward. They grab a shiny new AI tool and then desperately look for a problem to solve with it. It’s the classic "hammer in search of a nail" scenario.

This tech-first approach almost always leads to costly experiments with little to show for it. In my experience, the most successful AI initiatives start from a completely different place: a deep, practical understanding of the business's core challenges. It’s about grounding your AI strategy in reality.

The Power of a Problem-First Mindset

Adopting a "problem-first" mindset forces you to look inward before you look outward. Forget asking what a large language model can do for a moment. Instead, ask the questions that matter to your operations:

Where are our biggest, most frustrating operational bottlenecks?

What repetitive, low-value tasks are eating up our team's time?

What untapped data are we sitting on that could predict customer churn or equipment failure?

If we could predict one thing with 95% accuracy, what would have the biggest impact on our business?

Starting with these questions ensures that any AI project you launch has a crystal-clear purpose and a defined way to measure success. You move away from abstract potential and toward concrete value.

Key Takeaway: The goal isn't just to use AI. It's to solve a specific, high-value business problem with AI. That simple distinction is what separates a fun science project from a genuine strategic investment.

This subtle but critical shift in thinking is why starting with a problem, not a pre-packaged solution, makes all the difference.

Here’s a quick comparison of the two approaches:

Problem-First vs. Solution-First AI Adoption

Approach | Focus Area | Typical Outcome | Business Impact |

|---|---|---|---|

Problem-First | Specific business pain points and opportunities | A custom or tailored AI solution that directly addresses the need. | High ROI, improved efficiency, competitive advantage, and strong user adoption. |

Solution-First | A specific AI technology or platform | A struggle to find a meaningful application for the tool; "a solution looking for a problem." | Low ROI, wasted resources, poor user adoption, and potential project failure. |

Focusing on your problems first ensures you're building something people will actually use because it makes their jobs easier or the business stronger.

AI Isn't a Fad, It's a Fixture

The urgency to get this right is only growing as AI becomes woven into the fabric of modern business. This isn't just a trend for massive corporations anymore. By 2025, it's estimated that 78% of companies worldwide will have adopted AI technologies.

This adoption is happening across the board. On average, firms are deploying AI in three different business functions, and even 89% of small businesses now use AI tools for daily tasks. This widespread integration, backed by huge investment, makes having a smart, well-defined AI plan a competitive necessity, not a luxury.

Finding Your High-Impact AI Opportunities

Let’s be honest: vague brainstorming sessions are where good ideas go to die. They rarely uncover the kind of game-changing AI applications that actually produce a significant return. If you want to find genuinely effective AI use cases for your industry, you need to ditch the abstract ideation and adopt a structured discovery process. It’s about methodically mapping your business, pinpointing the real friction points, and figuring out where AI can deliver the most punch.

The first step? Get out of the boardroom and into the trenches. Your best sources of information aren't consultants; they're the department heads and frontline managers who wrestle with operational headaches every single day. The goal here is to get them talking about their biggest problems, not to pitch them on AI from the get-go.

Running Effective Discovery Workshops

Set up focused workshops with leaders from your key business units—think sales, marketing, operations, supply chain, and customer service. Your job is to listen and document, not to lead the conversation toward AI. Frame your questions around their daily reality.

Instead of asking, "How can we use AI?" try these instead:

"What are the most repetitive, soul-crushing tasks your team has to do?" This is how you find gold for automation. A finance team might confess they spend dozens of hours each month just matching invoices to purchase orders. Bingo.

"Where do the biggest delays or errors happen?" This question uncovers the bottlenecks that are costing you money. A logistics manager might point to inefficient delivery routes that are burning fuel and making customers angry.

"If you had a crystal ball for one business metric, what would it be?" This is my favorite question because it cuts straight to high-value predictive opportunities. A retailer might say, "I'd kill to know which products will be our top-sellers next quarter." That’s a direct line to an inventory forecasting project.

These workshops are more than just problem-finding missions. They are a form of AI co creation that builds buy-in from the very beginning. By getting department leaders involved early, you ensure the ideas are grounded in real-world needs, which dramatically increases your chances of success down the road.

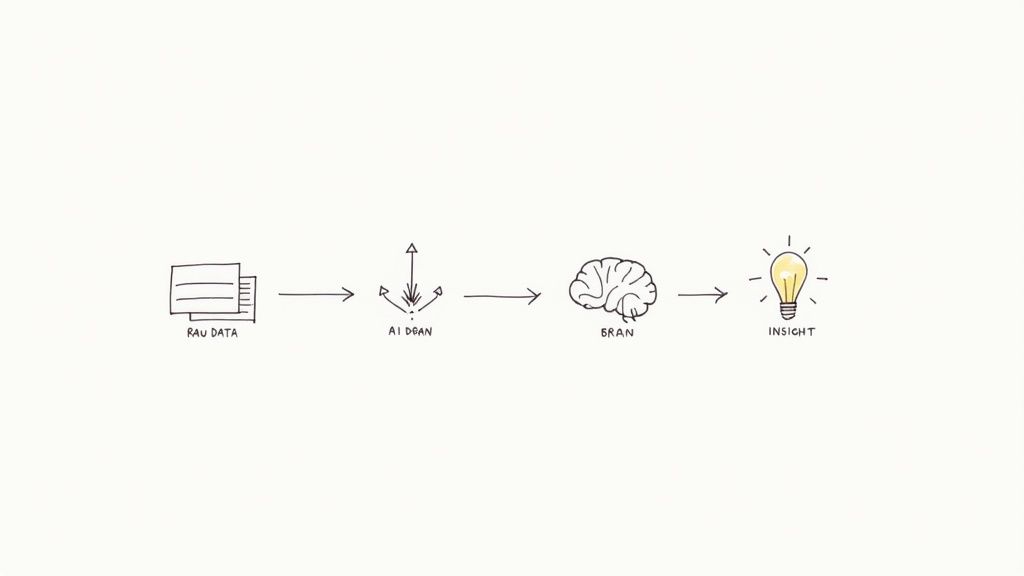

From Business Problem To Technical Blueprint

Okay, so you have a list of challenges. Now what? You have to translate those complaints into something a technical team can actually build. This is where so many AI initiatives fall flat. A business leader can describe a problem perfectly, but it needs to be framed in a way that developers and data scientists can understand and scope.

This is where a good AI requirements analysis tool can be a lifesaver. It helps you structure the problem by clearly defining:

The desired outcome (e.g., reduce shipping errors by 15%).

The data you have to work with (e.g., historical shipping manifests, driver logs).

The key stakeholders who need to be involved.

The current process and what it’s costing you.

This structured approach turns a vague complaint like "our deliveries are too slow" into a concrete project brief: "Develop a route optimization model using historical GPS and traffic data to reduce average delivery time and fuel consumption." See the difference?

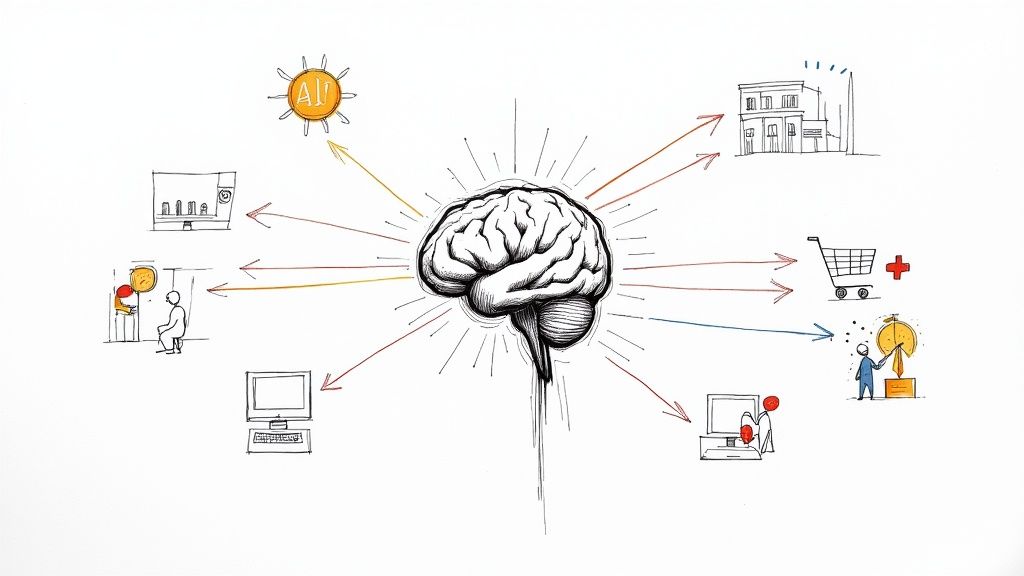

The financial incentive for getting this right is massive. Take the IT and telecom sector, where AI integration is projected to add $4.7 trillion in gross value by 2035. This huge number comes from AI's ability to optimize core functions like network planning and customer support. You can read more about these AI adoption trends and their financial impact to see how crucial data availability is for success.

Spotting the Patterns Across Industries

While every business feels unique, the high-impact opportunities often follow similar patterns. A manufacturer focusing on predictive maintenance for factory machinery is solving the same type of problem as a hospital trying to predict patient readmissions. At their core, both are using historical data to forecast a negative event and step in before it happens.

Likewise, a media company using AI to recommend content is tackling the same personalization challenge as an e-commerce store suggesting products. The underlying goal is identical: increase engagement and sales by understanding what an individual actually wants.

Thinking this way helps you see the common threads and learn from the real-world use cases already proven in other fields. This is a cornerstone of a solid AI strategy consulting approach—learning from others' wins and losses to move faster yourself.

Alright, you've run your discovery workshops and now you're sitting on a pile of promising AI ideas. That's the exciting part. Now for the hard part: which one do you tackle first?

It’s easy to get drawn to the most ambitious, game-changing concept on the list. But in my experience, jumping straight into a massive project is a surefire way to burn through your budget and demoralize your team before you see any results. A much smarter way forward is to get methodical. You need a simple, objective scoring model to cut through the noise and figure out where your resources will make the biggest splash.

This isn't just about picking a project; it's about building a defensible AI strategy. When you systematically weigh the potential payoff against the actual effort required, you transform a messy wish list into a clear, actionable roadmap.

This visualization really drives home the point. Every AI initiative needs to be tied to concrete metrics and KPIs. Otherwise, you're just working on cool tech projects, not delivering measurable business value.

The takeaway here is simple: link every use case to a clear performance indicator. This is how you move from abstract ideas to tangible, trackable outcomes.

Using An Impact vs. Effort Matrix

The Impact vs. Effort matrix is your best friend during this phase. It’s a straightforward visual tool that helps you sort every potential project into one of four buckets:

Quick Wins (High Impact, Low Effort): These are your low-hanging fruit. They deliver noticeable value without a massive investment of time or money. You should always start here to build momentum, prove the value of AI, and get some early wins on the board.

Major Projects (High Impact, High Effort): These are the big, strategic bets that could truly reshape your business. They demand serious resources but promise a huge return. You absolutely want to plan for these, but don't lead with them.

Fill-ins (Low Impact, Low Effort): Think of these as "nice-to-haves." They're worth doing if you have spare developer cycles or a gap in the schedule, but they should never pull focus from your main priorities.

Time Sinks (Low Impact, High Effort): Avoid these like the plague. They’re black holes for resources that offer very little in return.

To make this work, you need to score each use case against a consistent set of criteria. If you're feeling stuck, working with experts in a guided AI strategy workshop can be a great way to nail down your process and get an outside perspective.

How To Score Your AI Projects

Putting together a scoring system doesn't need to be an overwrought academic exercise. You're just trying to bring some objectivity to the conversation.

The table below breaks down the essential criteria I always recommend clients use. For each potential project, run it through these questions and assign a simple score from 1 (lowest) to 5 (highest).

Use Case Prioritization Matrix Criteria

Evaluation Criteria | Description | Key Questions to Ask | Scoring (1-5) |

|---|---|---|---|

Business Impact | The potential value this project will create for the business. | Will this increase revenue? Reduce operational costs? Improve customer satisfaction scores? Boost team productivity? | 1 = Negligible, 5 = Massive |

Implementation Effort | The total resources needed to bring this project to life. | How complex is the technology? Do we need new tools? How much work will our data scientists and engineers need to do? | 1 = High Effort, 5 = Low Effort |

Strategic Alignment | How well the project fits with the company's overarching goals. | Does this support a key strategic pillar for the year? Does it solve a problem our leadership team talks about constantly? | 1 = Poor Fit, 5 = Perfect Alignment |

Data Readiness | The availability and quality of the data required for the model. | Do we have the data we need? Is it clean and accessible? Are there any privacy or governance hurdles to overcome? | 1 = No Data/Poor Quality, 5 = Data is Ready |

After scoring each idea, plot it on your matrix. Those projects that land squarely in the "Quick Wins" quadrant are your top candidates for an initial pilot or proof-of-concept.

It's easy to see why this matters. The global push for AI is happening fast. Forecasts show a compound annual growth rate (CAGR) of about 35.9% from 2025 to 2030 in AI adoption. PwC adds that AI could contribute $15.7 trillion to the global economy by 2030. The financial stakes are enormous, and prioritizing correctly is how you ensure you get your slice of the pie.

The Power Of The Proof-of-Concept

Never, ever underestimate the power of starting small. A Proof-of-Concept (PoC) is your secret weapon. It’s a small, controlled experiment designed to test your biggest assumptions about a use case before you go all-in.

Think of it as a low-risk way to answer your most pressing questions. Does the data support our hypothesis? Can the model achieve the accuracy we need? A successful PoC builds incredible confidence across the organization and makes getting buy-in for those larger "Major Projects" a whole lot easier.

Charting Your Course: Building a Practical AI Implementation Roadmap

An idea is just an idea until you put a plan behind it. Once you've pinpointed your most promising AI use cases, the real work begins. This is where you build a practical, phased roadmap that turns a concept on a slide deck into a real-world tool that delivers value.

Without a clear execution plan, even the most brilliant AI initiatives get stuck. They fall victim to technical dead-ends, scope creep, and shifting business priorities. A well-structured roadmap is your guide, breaking the project into manageable pieces and keeping everyone moving in the same direction.

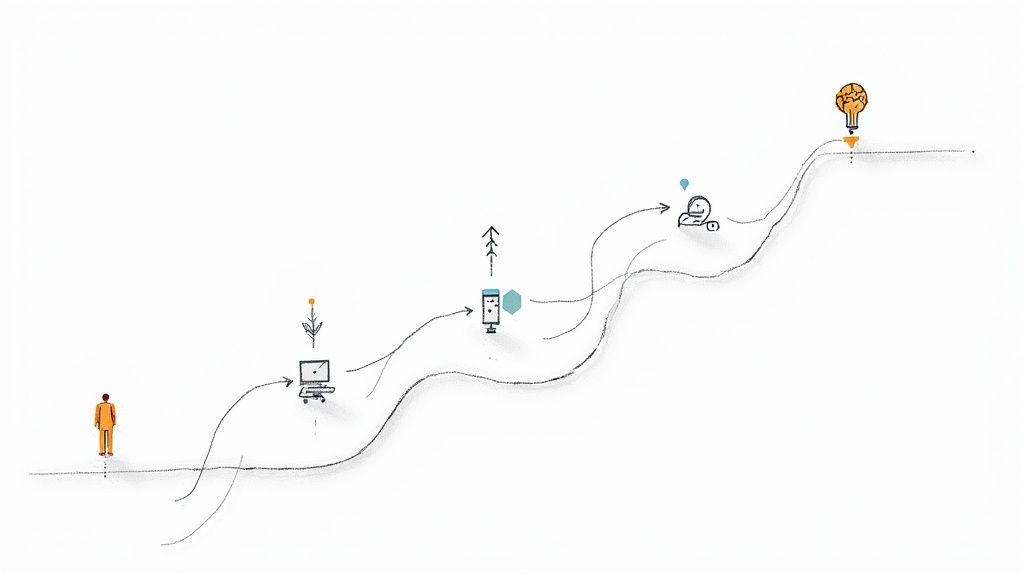

The Phased Rollout: From Data to Deployment

You wouldn't build a house without laying the foundation first, and the same logic applies to AI. A staged approach is crucial. It minimizes risk, provides natural checkpoints, and lets you learn and adapt as you go.

Here's how we typically see successful projects unfold:

Data Preparation: This is the unglamorous but non-negotiable starting point. It's all about gathering, cleaning, and labeling the data your model will learn from. It’s no surprise that 85% of Fortune 500 companies use AI; they’ve all mastered this foundational step.

Model Development: Now, the data scientists and engineers get to work. They start building and training the first version of the AI model, experimenting with different algorithms to see what sticks and best solves your specific business problem.

Integration and Testing: This is where the AI meets your existing world. The model is carefully integrated into current systems and workflows. In this controlled environment, you test everything—does it talk to your other software correctly? Is the user interface intuitive?

Deployment and Monitoring: The AI goes live. But the job isn't over. You have to constantly monitor its performance against the business KPIs you set out to improve. This ongoing vigilance tells you when the model is succeeding and when it needs a tune-up.

This phased approach gives you clear go/no-go decision points at every stage, a core principle of our AI strategy consulting work.

Assembling Your Cross-Functional "Dream Team"

AI is a team sport. It’s not just an IT project or a data science experiment happening in a vacuum. A siloed approach is a guaranteed recipe for failure.

To get this right, you need a mix of minds working together. Your team absolutely must include:

Domain Experts: These are the people from the business units who live and breathe the problem you’re solving. Their practical insights are what keep the project grounded in reality.

Data Scientists & Engineers: The technical experts who build, train, and deploy the models. They are the architects turning a business need into a functional solution.

IT & Operations: This team handles the integration into your tech stack, ensuring the final product is secure, stable, and runs smoothly.

True magic happens when these groups collaborate closely. This idea of AI co creation is what separates a technical proof-of-concept from a genuine business win. The best outcomes always emerge when business and tech leaders are in the trenches together, a process our expert team is built to facilitate.

Why an Agile Approach Is Your Only Option

Forget traditional, waterfall-style project management. It simply doesn't work for AI. The field moves too fast, and you’re guaranteed to uncover new challenges and opportunities as you build.

You need an agile mindset. It gives your team the freedom to adapt on the fly—to integrate user feedback, tackle unexpected data quirks, and adjust the strategy without derailing the entire project.

Key Insight: Don't think of your AI implementation as a rigid, unchangeable plan. Treat it as a series of well-informed experiments. Each sprint and every phase should teach you something, helping you refine the solution and maximize its business impact.

This adaptive method is essential for navigating the inherent uncertainty of AI development. If you want to see what this looks like in practice, exploring real-world use cases can offer some great inspiration on how others have pivoted to achieve success.

As we've seen time and again, this flexibility is what separates successful AI projects from the ones that stall out. Building a solid AI strategy framework from the beginning provides the guardrails your team needs to innovate with confidence and purpose.

Measuring And Scaling Your AI Success

So, you’ve launched your AI solution. Congratulations! But don't pop the champagne just yet. This isn't the finish line; it’s really the starting block. The true test of any AI use case comes after deployment, when you start measuring its real-world impact and figure out how to scale it. This is where a successful pilot transforms into a genuine organizational asset.

Initially, it's easy to get caught up in technical metrics like model accuracy. But for the business, those numbers mean very little on their own. The real measure of success is found in the KPIs that actually move the needle on your bottom line.

Defining KPIs That Drive Business Value

You have to shift your focus from pure technical precision to tangible business outcomes. This is the only way to truly validate the investment and get the buy-in you'll need for future AI initiatives.

Here’s what you should be tracking:

Improved Efficiency: How much time is the AI actually saving your team? A great example is the financial services firm Hiscox. They implemented an AI tool that took the time to process a new claim from one hour all the way down to just 10 minutes.

Cost Reduction: Are you seeing a real drop in operational expenses? Acentra Health managed to save nearly $800,000 by using AI to automate their nursing documentation.

Increased Revenue: Can you draw a direct line from the AI solution to sales growth or higher customer lifetime value?

Higher Customer Satisfaction: Are your customer satisfaction (CSAT) or Net Promoter Scores (NPS) climbing in the areas touched by the AI?

Enhanced Employee Experience: Is the tool taking tedious work off your team's plate, freeing them up for more strategic thinking? Don't underestimate this one—research shows 66% of CEOs are already seeing measurable benefits from generative AI, especially when it comes to operational efficiency and happier employees.

Establishing a System for Continuous Improvement

An AI model isn't a "set it and forget it" product. It’s a dynamic system that needs constant attention to stay effective. Market conditions change, customer behaviors evolve, and data patterns shift. This can lead to "model drift," where the AI's performance slowly degrades over time.

Key Insight: Your AI solution needs a feedback loop. Think of it as a living system that has to constantly learn and adapt to its environment. Without a plan for monitoring and retraining, even the best models will eventually become obsolete.

A robust monitoring system isn't a nice-to-have; it's non-negotiable. This system should track both your business KPIs and the model's technical performance in real time. When you spot a dip in performance, that’s your cue. It's a clear signal that the model needs to be retrained with fresh data—a core practice in MLOps (Machine Learning Operations).

From Pilot Project to Enterprise Scale

A successful pilot is a fantastic proof point, but the end game is to scale that success across the entire organization. Scaling is more than just a technical challenge; it's an exercise in change management. You need a smart rollout strategy.

Start by documenting everything from the pilot. What worked? What surprised you? What were the roadblocks you didn't see coming? Sharing these lessons learned is crucial for preparing other departments and building confidence for a wider rollout.

This critical phase is often where having an expert guide can make all the difference. Navigating the tricky waters of system integration and organizational change requires specialized experience. Our dedicated AI implementation support can help you navigate these complexities and ensure your solution delivers on its promise.

Ultimately, scaling is all about proving repeatable value. As you introduce the AI solution to new teams and business units, you’ll fine-tune your process, making each deployment faster and more effective than the last. The guidance of our expert team is a significant asset here, helping you build a sustainable, long-term AI program that keeps delivering results.

Your AI Use Case Questions Answered

Even with a great framework, you're going to hit some bumps and have questions when you start putting AI to work in your industry. That’s not just normal; it's part of the journey. Here are some of the most common questions we get, with straight-to-the-point answers from our experience in the field.

"How Can We Possibly Find AI Use Cases Without a Data Science Team?"

This is a big one, and it's a completely fair question. The great news is, you don’t need a room full of PhDs to get the ball rolling. The real experts are already walking your halls: they're your department heads, your operations managers, and your frontline staff.

These are the people who know exactly where the friction is. They feel the pain of repetitive tasks and costly mistakes every single day.

Your job is to get them talking—not about AI, but about problems. Host a workshop and ask them things like:

"What's the one repetitive task that eats up most of your team's week?"

"If you had a crystal ball to predict one thing in your workflow, what would it be?"

"Where do our most expensive errors happen?"

The answers you get are pure gold. These are your potential use cases. Once you have these well-defined problems, you can bring in a partner for AI strategy consulting to look at the technical side. An AI requirements analysis tool can also be a huge help here, translating those raw business needs into something a technical team can easily scope out.

"What’s the Biggest Mistake to Avoid With a First AI Project?"

Without a doubt, the single biggest mistake is going for the "moonshot" project right out of the gate. It's so tempting to chase a massive, game-changing idea that promises to reinvent your entire business. But these huge projects are notorious for long timelines, spiraling costs, and a high risk of outright failure. A public flop can kill your company's appetite for AI and make it impossible to get funding for future projects.

A much smarter approach is to stick to the prioritization framework we've discussed. Go for a genuine "Quick Win"—a project that delivers a clear, measurable result but is relatively low on the complexity scale.

A successful first pilot does way more than solve a single problem. It builds confidence across the entire organization, teaches you invaluable lessons, and proves tangible ROI. It's the key to unlocking the support you'll need for those bigger, more ambitious goals down the line.

"How Much Data Do We Really Need for an AI Project?"

There's no magic number here. The truth is, it completely depends on the problem you're trying to solve. An AI model built to predict customer churn might require thousands of historical records, while one designed for quality control on a manufacturing line could need tens of thousands of labeled product images.

But here’s the key: quality and relevance are far more important than sheer quantity.

You're always better off with a smaller, cleaner set of well-labeled data that directly addresses your business problem than a massive, messy data swamp full of noise.

This is why a data audit is a non-negotiable step during the validation phase. It helps you figure out:

What data you actually have.

The condition and cleanliness of that data.

If it's even sufficient for the use case you have in mind.

If you find out your data isn't quite ready, that's not a dealbreaker. It just means the first phase of your project should focus on building a solid data collection strategy.

"How Do We Keep Our AI Solution From Becoming Outdated?"

Thinking of your AI model as a "one-and-done" project is a recipe for failure. Markets shift, customer behavior changes, and supply chains get disrupted. The data patterns your model was first trained on will inevitably become stale. This is a well-known phenomenon called "model drift," and it will slowly degrade your model's accuracy and performance over time.

To get long-term value, you have to plan for this from day one by implementing a robust MLOps (Machine Learning Operations) strategy. This isn't an optional add-on; it's a core component of the project.

This means continuously monitoring the business KPIs you actually care about, not just the technical metrics. You need a set process for periodically retraining the model on fresh, relevant data. This is what turns your AI from a static, depreciating tool into a living, adapting asset. As we explored in our AI adoption guide, this lifecycle management is what separates lasting success from a failed experiment. Working with our expert team can help you build these crucial governance structures to future-proof your investment.

Ready to turn these answers into action? Ekipa AI can help you move from questions to execution. Our next-gen AI Strategy consulting tool and expert services are designed to help you uncover, validate, and implement high-impact AI use cases quickly and effectively.