Fraud Detection Using AI in Banking: The Ultimate Guide

Explore fraud detection using AI in banking and see how advanced techniques are enhancing security, detection, and prevention in financial services.

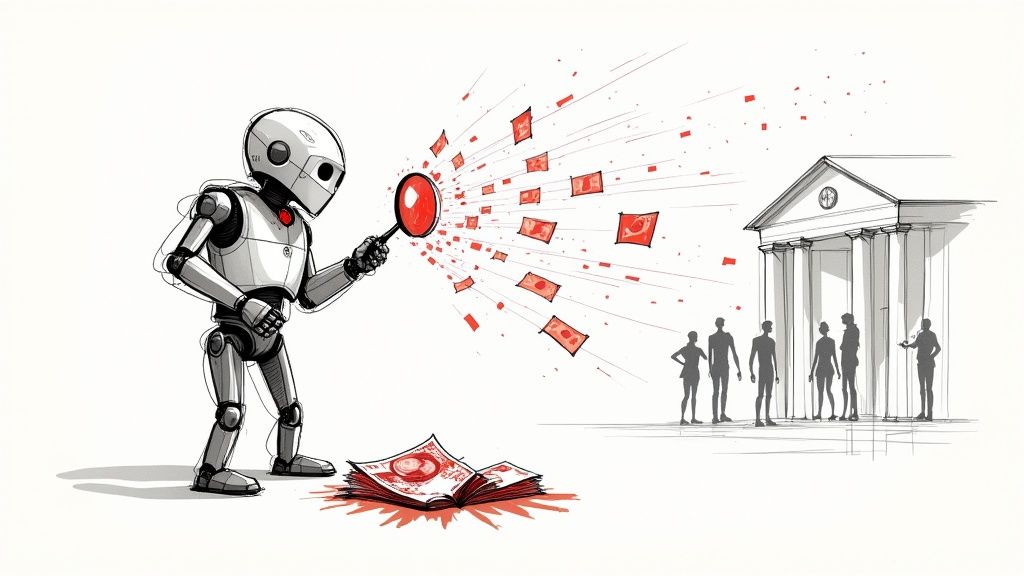

AI isn't just a nice-to-have for fraud detection in banking anymore; it’s the main line of defense. At its core, it's about using smart algorithms to sift through massive streams of transaction data in real time. These systems are designed to spot the faint, almost invisible patterns and oddities that signal a scam—something older, rule-based systems just can't do.

The New Frontline in Financial Security

The fight against financial crime has turned into a high-tech arms race. We're not just dealing with lone opportunists anymore. Fraud is now the work of sophisticated, organized rings using advanced tech to probe for any weakness they can find. This new reality has left legacy fraud detection systems dangerously outmatched. Their static, hard-coded rules (like "flag all transactions over $10,000") are too rigid and predictable to counter today's creative and fast-moving threats.

This is exactly why fraud detection using AI in banking has become so critical.

Think of it this way: a traditional system is like a security guard with a simple, fixed checklist. It can only catch what it’s explicitly told to look for. An AI-powered system, on the other hand, is like a team of seasoned detectives. They’re constantly learning, adapting their methods, and connecting seemingly unrelated clues to uncover the real story.

Shifting from Reaction to Proaction

The most significant change AI brings is the shift from a reactive to a proactive security stance. Instead of just cleaning up the mess after a fraudulent transaction occurs, AI systems are actively hunting for threats before they can do damage. They do this by analyzing millions of data points every second to build a rich, dynamic picture of what "normal" looks like for every single customer.

This deep understanding allows the AI to instantly flag tiny deviations that are often the first signs of fraud, such as:

- Behavioral Anomalies: A customer who has never once bought anything from a gaming website suddenly makes several large purchases on one.

- Geographical Impossibilities: A debit card is physically swiped in a shop in Paris, but just five minutes later, it’s used for an online purchase that's traced to an IP address in Tokyo.

- Device Irregularities: Someone tries to log into an account from a brand-new device with an unusual software configuration that doesn't match the customer’s known digital footprint.

By spotting these red flags instantly, AI doesn't just catch fraud after the fact. It often stops it cold, blocking the suspicious transaction before a single dollar is lost.

To get a clearer picture of this shift, let's compare the old and new approaches side-by-side.

Traditional vs. AI-Powered Fraud Detection

The table below breaks down the fundamental differences between the rigid, rule-based systems of the past and the dynamic, intelligent systems of today.

| Feature | Traditional Systems | AI-Powered Systems |

|---|---|---|

| Method | Relies on static, pre-defined rules (e.g., transaction limits). | Uses machine learning to analyze behavior and identify anomalies. |

| Adaptability | Slow and manual. New rules must be written to counter new threats. | Learns and adapts continuously from new data in real time. |

| Accuracy | High rate of false positives, annoying legitimate customers. | Significantly lower false positives, improving customer experience. |

| Data Scope | Analyzes a limited set of structured transaction data. | Processes vast, complex datasets, including unstructured data. |

| Speed | Often works in batches, leading to detection delays. | Analyzes transactions instantly, enabling real-time intervention. |

| Threat Detection | Catches known, pre-defined fraud patterns only. | Uncovers novel and sophisticated fraud tactics it has never seen before. |

As you can see, the move to AI isn't just an upgrade—it's a complete change in philosophy, moving from a fixed set of walls to an intelligent, adaptive immune system.

The Evolving Threat Landscape

The need for AI is driven by the sheer speed at which financial crime is evolving. As of 2025, artificial intelligence has become a cornerstone of bank security, with over 90% of financial institutions worldwide using AI-driven solutions to detect fraud. What's truly alarming is that fraudsters themselves are now using generative AI. It's estimated that these tools, which create hyper-realistic deepfakes and synthetic identities, are behind more than 50% of modern fraud cases.

In response, banks have had to hit the accelerator. Roughly two-thirds have integrated these advanced technologies in just the last two years. Making this switch is no longer a choice; it's essential for survival. AI gives these institutions the speed and analytical power needed to fight back effectively.

At Ekipa, our unique AI co creation model helps financial institutions build these next-generation defenses. We believe in working collaboratively, ensuring the final solution is perfectly matched to a bank’s specific risks and challenges. By partnering with experts, banks can confidently navigate the complexities of AI and stay one step ahead of the criminals.

Understanding the AI Technologies at Play

So, how does AI actually catch fraudsters? It’s not one single piece of technology. Think of it more like an elite security team, where each member is a specialized AI model with a unique skill set. Framing it this way helps move from abstract ideas to a practical picture of fraud detection using AI in banking.

Imagine your bank’s security department is staffed by this AI team. Each one has a specific job, and together they build a powerful defense. This is a core principle we often discuss in our AI strategy consulting work—building a robust system means having the right specialists for the right tasks.

The Veteran: Supervised Learning

First up is supervised learning, the seasoned detective on the team. This model has been trained on mountains of historical data where every transaction is already labeled as either "fraud" or "legitimate." It's like a senior investigator who has pored over thousands of old case files—they know the classic criminal patterns inside and out.

- How it Works: You essentially show it endless examples, saying, "This was fraud," and "This was perfectly fine." After seeing millions of these, it learns to recognize the tell-tale signs of a scam.

- Banking Example: It might learn that a series of small, rapid-fire purchases followed by one big one, all from a new location, is a classic stolen card pattern. When it spots this sequence in real-time, it immediately raises a red flag.

Supervised learning is fantastic at catching known fraud types with incredible accuracy. Its weakness? It can be caught off guard by brand-new scams it has never seen before.

The Rookie: Unsupervised Learning

Next, we have unsupervised learning. This is the sharp-eyed rookie who spots things that everyone else misses. Unlike the veteran, this model isn't given any labeled data. Its sole job is to sift through all the transaction data and find the weird outliers—the activities that just don't fit in.

This makes it exceptionally good at sniffing out completely new fraud tactics.

- How it Works: It groups similar transactions together to build a baseline of what "normal" activity looks like for a customer. Anything that falls way outside those typical clusters gets flagged as suspicious.

- Banking Example: Imagine an account that has only ever received simple domestic transfers. Suddenly, it starts receiving a series of small, convoluted international payments from several different countries. Unsupervised learning flags this strange deviation, alerting analysts to a possible money laundering scheme.

Its ability to spot the unknown is what makes it a vital part of any modern fraud detection setup.

The real magic happens when you combine these two. Supervised learning handles the threats you know, while unsupervised learning acts as a safety net for the ones you don't. This dual approach creates a much more resilient defense against constantly evolving criminal tactics.

Advanced Specialists in the AI Toolkit

Beyond these core players, the team has other specialists for highly specific jobs.

Reinforcement Learning (The Strategist): This model learns by doing. It runs countless simulations, getting "rewarded" for correctly identifying fraud and "penalized" for false alarms. It’s like a chess master that plays millions of games to perfect its strategy, making it incredibly good at adapting when fraudsters are actively trying to fool the system.

Deep Learning (The Network Analyst): Using intricate neural networks, deep learning can comb through massive, interconnected datasets to uncover highly sophisticated fraud rings. It can link seemingly random accounts and transactions across different banks to reveal a coordinated criminal operation that a simpler model would completely miss.

Natural Language Processing (The Communicator): Often called NLP, this specialist understands human language. In a bank, it can scan emails, support chats, or internal notes to detect phishing attempts, social engineering tactics, or even signs of internal fraud based on the specific words and phrases being used.

Together, these AI technologies form a layered defense system. The first critical step is understanding which tools are right for which problems. Our AI requirements analysis tool can actually help you start framing your specific needs and figuring out which of these AI specialists your organization needs most.

The Real-World Impact of AI on Banking Security

When we move past the theory, the real-world impact of AI in banking security is both immediate and easy to measure. We’re seeing financial institutions make substantial gains by taking AI models out of the lab and putting them to work on the front lines. The most common use case, fraud detection using AI in banking, boils down to one thing: analyzing immense datasets with a level of precision that was previously impossible.

These AI systems are trained to meticulously examine customer behavior, transaction histories, and even device data. Their job is to spot the subtle anomalies that scream "fraud." This leads to a huge reduction in false positives—a critical improvement that stops legitimate customers from having their cards incorrectly declined, which is a major source of frustration and a quick way to lose business.

Tangible Improvements in Detection Rates

The benefits here aren't just hypothetical. They’re backed by hard numbers from some of the biggest names in finance. For instance, American Express saw a 6% increase in fraud detection accuracy after rolling out its advanced AI models. In a similar vein, PayPal boosted its real-time fraud detection by 10% by continuously monitoring its global transactions with AI.

These wins come from the AI's ability to process multiple streams of data at once. It looks at a customer’s typical spending habits, their purchase history, device information, and recent transaction patterns to flag anything that looks out of place. It's a holistic view that older, rule-based systems simply could never match.

Expanding Security to New Frontiers

AI's role in security is also pushing beyond just credit and debit cards. It’s proving to be an essential tool for securing newer, and often more volatile, areas of finance.

- Cryptocurrency Security: AI algorithms are now being deployed to trace illicit funds across incredibly complex blockchains. They can spot the tell-tale signs of money laundering, like rapid-fire transfers designed to confuse human analysts.

- Identity Verification: AI-powered chatbots are now a common sight in customer service. These bots use sophisticated language analysis to pick up on subtle phishing cues and identity theft attempts during conversations, adding a quiet but effective layer of security.

As we explored in our AI adoption guide, showing these kinds of concrete results is the key to getting buy-in from the rest of the organization. When leaders see how these systems directly improve both security and efficiency, the case for investment becomes a lot easier to make. You can find more examples across different industries in our library of real-world use cases.

AI's growing role does more than just beef up a bank's defenses against fraud, both online and off—it also creates new hurdles. The real challenge is balancing these powerful capabilities with transparency, privacy, and regulatory compliance, which is a constant focus as the technology evolves.

At the end of the day, the goal isn't just to stop fraud. It's about creating a safer, smoother banking experience for everyone. The success stories we're seeing prove that AI is more than a defensive tool; it’s a way to drive better business outcomes. For those looking to see how these technologies can solve their own unique challenges, talking with our expert team is a great first step to turn that potential into a reality.

Your Strategic Roadmap for AI Implementation

Bringing a powerful AI fraud detection system online is a significant project, but it doesn't have to be a headache. The key is to break the entire process down into a clear, manageable roadmap. By following a step-by-step plan, your institution can move methodically from a great idea to a fully functioning system that acts as a powerful shield against financial crime.

Think of it as building a house—every stage has to be done right for the final structure to be strong. For a more guided experience, our AI strategy consulting services can help you navigate each one of these phases.

Here’s a look at the six essential stages for a successful implementation.

Stage 1: Define Clear Objectives

Before anyone even thinks about code, you have to know what you're aiming for. What does "success" actually look like for your team?

Are you trying to slash false positives by a specific number, say 25%, to keep good customers happy? Or is the real mission to catch a new, tricky type of synthetic identity fraud that keeps slipping through your current nets? Vague goals won't cut it. You need to set specific, measurable, achievable, relevant, and time-bound (SMART) objectives. These goals will be your north star for every decision that follows.

Stage 2: Collect and Prepare Your Data

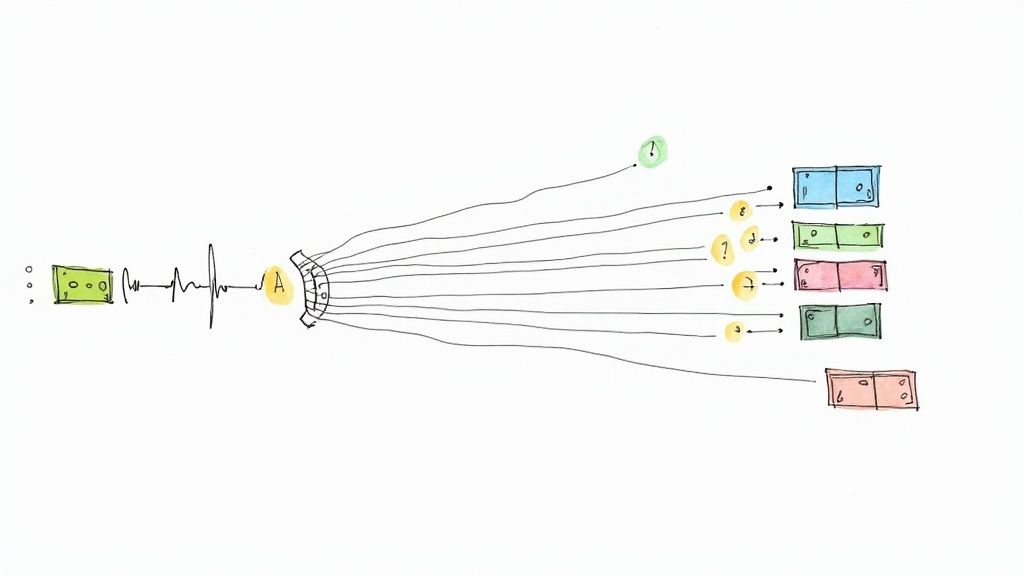

An AI model is a bit like a student—it can only be as smart as the books it studies. This stage, focused on data, is often the most time-consuming part of the whole project, but it’s absolutely critical for getting fraud detection using AI in banking right.

Your team will need to pull together massive amounts of historical transaction data. But just having the data isn't enough; it needs to be clean, organized, and properly labeled. This usually involves a few key steps:

- Data Cleansing: Getting rid of duplicates, fixing typos, and figuring out what to do with missing information.

- Feature Engineering: This is where the real expertise comes in. It's about creating new data points (features) from the raw data that help the model see the subtle clues of fraud.

- Data Labeling: Going back through historical transactions and accurately marking each one as either "fraudulent" or "legitimate." This is how the model learns to tell the difference.

This process is what turns raw, messy data into actionable intelligence.

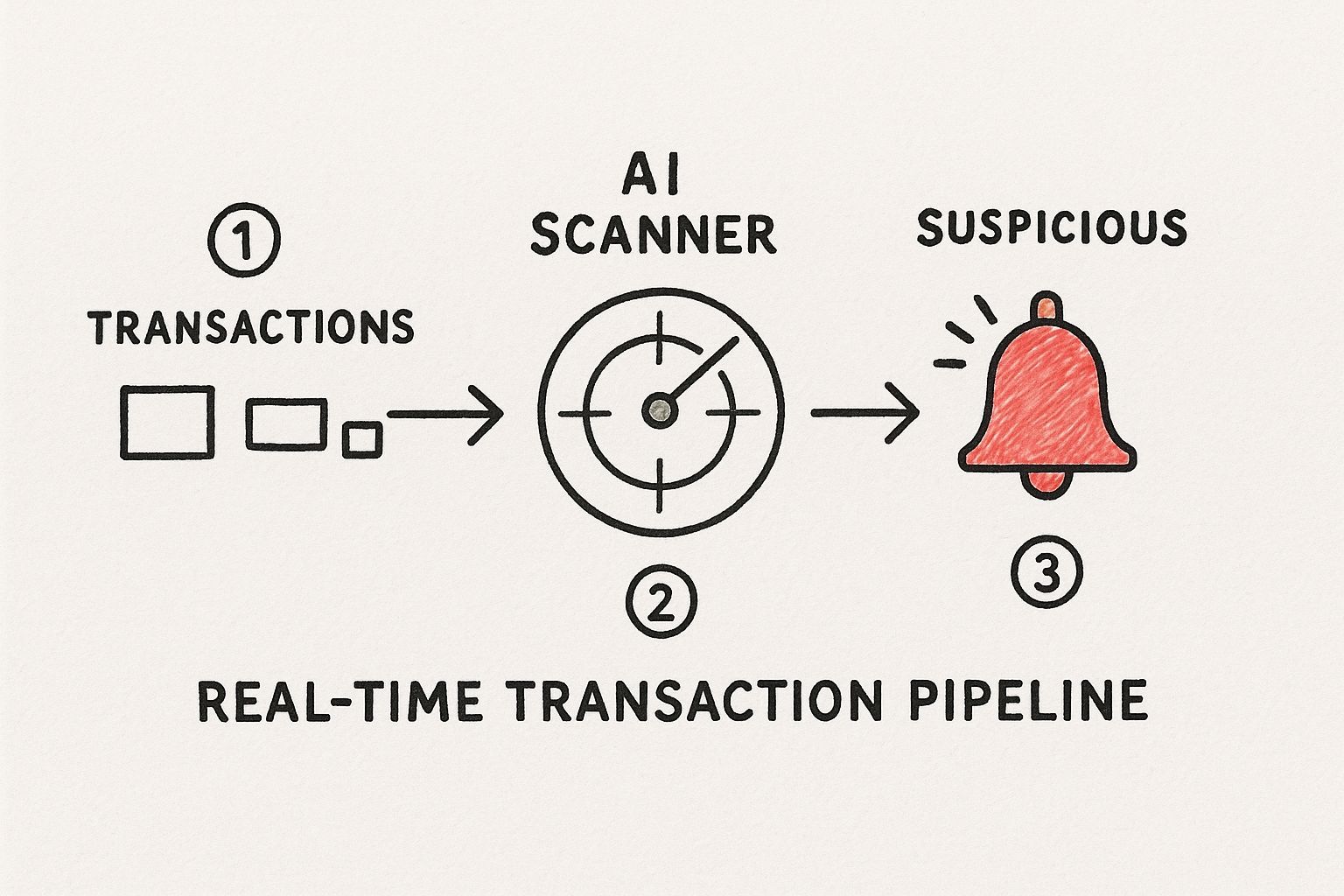

As you can see, the idea is to funnel millions of transactions through this AI pipeline in real-time, allowing the system to instantly flag anything that looks out of place for a closer look.

Stage 3: Select and Develop the Model

With your pristine data ready to go, it’s time to pick the right tools for the job. As we've discussed, this isn't about finding one "perfect" algorithm. It's usually about creating a hybrid system that uses supervised learning to spot known fraud patterns while leveraging unsupervised learning to sniff out brand-new, never-before-seen threats.

This part of the process is all about experimentation. Data scientists will test different algorithms, tweak their settings, and train them over and over until they hit the performance targets you set back in Stage 1. For a detailed blueprint, a Custom AI Strategy report can provide a tailored plan for model selection.

Stage 4: Test and Validate Rigorously

A model might look great in the lab, but how will it perform in the wild? Before you let it anywhere near live customer transactions, it needs to be put through its paces. This means more than just checking for accuracy—it's about making sure the model is reliable, fair, and doesn't have any hidden biases.

We do this by testing it on a "holdout" dataset, which is a chunk of data the model has never encountered before. This gives us a true sense of how it will perform in the real world.

A crucial practice here is implementing a 'human-in-the-loop' validation process. This means having your expert fraud analysts review the model's decisions, especially the close calls. Their instincts are invaluable for spotting subtle mistakes and confirming the model's logic is sound before it goes live.

Stage 5: Deploy and Integrate in Phases

Whatever you do, don't just flip a switch and turn the new system on all at once. A "big bang" deployment is a recipe for disaster. A phased rollout is much smarter and safer.

A great way to start is by running the new AI system in "shadow mode." It analyzes transactions and flags potential fraud just like it would if it were live, but it doesn't actually take any action. This lets you compare its results directly against your old system in a completely safe environment. Once you're confident it's working as expected, you can start rolling it out for real, perhaps beginning with lower-risk customer segments.

Stage 6: Monitor and Refine Continuously

Getting the system launched feels like the finish line, but it's really just the beginning. Fraudsters never stop evolving their methods, a problem we call "concept drift." To stay effective, your AI models need constant attention.

This final stage is all about creating a continuous feedback loop. You'll need to set up dashboards to monitor the model's performance, get alerts if its accuracy starts to dip, and have a plan to periodically retrain it with fresh data. This ensures your defenses are always learning and adapting, staying one step ahead of the bad guys.

Navigating the Challenges of AI Fraud Detection

While AI offers a powerful defense against financial crime, the road to a successful fraud detection system is paved with some very real challenges. Getting it right means being honest about these hurdles from the get-go, from the technical snags to the thorny ethical questions.

One of the biggest balancing acts is dealing with false positives. This is when your AI model flags a perfectly normal transaction as fraudulent. Of course, you want to catch criminals, but a system that's too aggressive can quickly become a nightmare for your customers. Think declined cards during a holiday dinner or frozen accounts right before rent is due. It’s a fast way to damage trust and send customers looking elsewhere.

Then there's the growing pressure from regulators. They don't just want to know if your model works; they want to know how it works. This brings us to the challenge of Explainable AI (XAI).

The Black Box Problem

Many of the most powerful AI models, especially in deep learning, can feel like a "black box." They ingest data and spit out incredibly accurate answers, but peering inside to see their reasoning process is often next to impossible. This opacity is a massive red flag for auditors and regulators who need to verify that your system is fair, unbiased, and compliant.

For any bank serious about fraud detection using AI in banking, building models that are both accurate and understandable is non-negotiable. You have to be able to explain exactly why a transaction was flagged, not just for compliance but also to handle customer disputes effectively. This is where getting expert help can make all the difference, and our dedicated AI implementation support can bridge this critical gap between raw performance and necessary transparency.

Data Privacy and Adversarial Threats

AI runs on data, and in banking, that data is intensely personal and sensitive. This immediately puts data privacy front and center. Fraud models need huge volumes of customer information to learn effectively, so banks have to walk a fine line, using techniques like federated learning and iron-clad data governance to protect privacy without starving the model.

At the same time, fraudsters aren't just sitting back and waiting to be caught. They're actively developing their own adversarial AI techniques to outsmart the very systems designed to stop them.

An adversarial attack is a deliberate attempt to fool an AI model. For instance, a criminal might subtly alter transaction data in ways that are invisible to a human but are just enough to make a fraudulent transfer look like a legitimate purchase, tricking the AI into approving it.

This dynamic creates a constant cat-and-mouse game. Security teams must continuously retrain and adapt their models to stay ahead of these evolving criminal tactics. A solid governance framework is the only way to manage these risks, ensuring you're transparent with both regulators and customers about how you're using AI.

A Global Perspective on Financial Crime

Financial crime doesn't respect borders. It operates with startling efficiency across jurisdictions, creating a complex global web. Today’s fraud schemes are rarely local; you might have criminals in one country targeting victims in another, all while using digital infrastructure scattered across a third.

This borderless reality means any fraud detection using AI in banking strategy simply has to be global. A regional mindset just won't cut it.

Of course, different parts of the world face their own unique challenges. A country experiencing a digital payment boom might see a spike in account takeover fraud. Meanwhile, another region might be battling sophisticated trade-based money laundering schemes. The key takeaway? An AI model trained exclusively on North American data will be completely unprepared for fraud patterns common in Southeast Asia or Western Europe.

The Advantage of Diverse Global Datasets

This is precisely where AI’s ability to work at scale becomes a game-changer. AI systems get smarter and more effective with more varied data. By feeding them global datasets, financial institutions can build a defense that is both incredibly robust and highly adaptive. An AI that has learned to spot fraud in dozens of countries is far better at catching new and unexpected attacks, regardless of where they come from.

A global perspective allows the AI to connect the dots and identify complex, cross-border fraud rings. It can piece together seemingly unrelated events happening thousands of miles apart—a feat impossible for a single institution with a limited view.

This international approach is fundamental to modern security. As we explored in our AI adoption guide, the breadth and scope of your data directly dictate the quality of your results.

A Hotspot Illustrates the Trend

The urgent need for a proactive, globally-aware AI defense is perfectly illustrated by recent trends. The Asia-Pacific region, for instance, has become the world’s leading hotspot for banking fraud losses, which have ballooned to $221.4 billion. An unbelievable $190.2 billion of that comes directly from payments fraud alone.

This staggering financial drain tells a clear story: as economies digitize, fraud evolves right alongside them, often outpacing older security measures. In response, banks in the region are making a much-needed strategic shift. They're moving away from simply reacting to fraud and are now focused on proactive prevention powered by integrated AI analytics, behavioral biometrics, and real-time transaction monitoring. This evolution is essential for safeguarding customers in the world's fastest-growing markets. You can see how your own strategy stacks up against global benchmarks with our AI Strategy consulting tool.

Ultimately, if fraud is a global problem, the solution must be too. Building an effective AI defense system demands international cooperation, shared intelligence, and a deep commitment to understanding regional nuances. This is how financial institutions can finally stop playing defense and start anticipating and neutralizing threats on a worldwide scale. The expertise behind these strategies is critical, and you can learn more by meeting our expert team.

Frequently Asked Questions About AI in Fraud Detection

It's natural to have questions when you're exploring how AI can protect your bank. Let's tackle some of the most common ones that come up when discussing fraud detection using AI in banking. We'll keep the answers straightforward, building on what we've already covered.

If you have more questions after this, our complete AI FAQ page is a great resource.

How Does AI Handle New and Unseen Types of Fraud?

This is really where AI shines and leaves older, rule-based systems in the dust. Think of it this way: traditional systems look for known threats, like a security guard with a specific list of suspects. If someone isn't on the list, they get by.

AI, particularly with unsupervised learning, acts more like a seasoned detective. It learns what "normal" activity looks like across millions of transactions. When a new fraud tactic appears, the AI doesn't need a name for it; it just flags the behavior as a bizarre anomaly—something that just doesn't fit. This allows banks to spot and stop brand-new criminal strategies in real time, long before they become widespread problems.

What Is the First Step for a Small Bank to Start Using AI?

Don't try to boil the ocean. The smartest move is to start small and targeted. Pick one specific, nagging problem—maybe it's a spike in fraudulent credit card applications or a rash of account takeovers. Pinpointing a narrow use case makes it far easier to define what success looks like and measure your return on investment.

From there, you don't need a huge in-house data science team right away. Look into partnering with a third-party AI provider that specializes in your chosen problem area. This approach lets you get a powerful solution running quickly, prove its value to stakeholders, and then build momentum for broader AI initiatives.

How Can We Ensure AI Fraud Detection Is Fair and Unbiased?

This is a huge and non-negotiable part of the process. Building a fair system isn't an accident; it's a deliberate effort. It comes down to a few key practices:

- Audit Your Data: You have to comb through your historical data for hidden biases. If past practices accidentally disadvantaged certain groups, the AI will learn those same biases. This data needs to be cleaned and corrected.

- Use Explainable AI (XAI): Don't accept "black box" decisions. You need tools that can explain why the AI flagged a certain transaction. This transparency is absolutely critical for satisfying regulators and building trust with your customers.

- Keep Humans in the Loop: Always have human experts in place to review the AI's most sensitive or ambiguous decisions. This provides a crucial layer of common sense and ethical judgment that a machine simply can't replicate.

Consistent bias checks and clear governance aren't just best practices; they're essential for staying compliant and maintaining your reputation.

Does AI Replace Human Fraud Analysts?

Not at all. It makes them better. The goal is to augment your human experts, not replace them. AI is a tireless assistant that can sift through mountains of data in seconds, flagging the handful of cases that genuinely need a human eye.

This partnership frees up your talented analysts to focus on high-value work that only people can do:

- Running deep, complex investigations that require intuition and context.

- Thinking strategically about the bank's overall fraud prevention posture.

- Navigating the tricky gray areas and edge cases where pure data isn't enough.

The real power isn't in just the machine or just the person—it's in the collaboration between the two. Meet our expert team to see how we combine cutting-edge technology with deep industry experience to get the job done right.

Ready to build a smarter, more resilient defense against financial crime? At Ekipa, we turn complex AI strategies into scalable impact. Discover your organization's AI opportunities and get a tailored plan without the traditional cost or complexity. Learn more about our approach and meet our expert team.