A Guide to AI Use Case Development

Master AI use case development with our guide. Learn to identify, validate, and scale AI solutions that deliver real business impact and measurable ROI.

Diving headfirst into AI without a clear plan is a surprisingly common mistake. The real secret to getting AI right isn't about chasing the latest hype; it's about following a structured, strategic process that focuses on tangible results. This means getting everyone on the same page from day one, ensuring your tech initiatives are perfectly aligned with real-world business goals.

Building Your Foundation for AI Success

Starting with AI can feel overwhelming. Many organisations are sold on the promise of artificial intelligence but get stuck when it comes to turning that potential into applications that actually create value. The most important first step is a mental shift: stop chasing technology and start solving specific business problems.

This foundational stage isn't about writing code or training models. It’s all about strategic alignment. You need to take a hard look at your operations, find the inefficiencies, and pinpoint the growth opportunities where AI could genuinely make a difference. This kind of work is best done through a process of AI co creation, where business leaders, domain experts, and tech teams come together to build a shared vision.

Why a Strategic Approach Matters

Thinking strategically from the very beginning helps you sidestep common traps, like building a project that’s technically cool but has no clear business case. A structured approach ensures every single initiative you consider is:

Thinking strategically from the very beginning helps you sidestep common traps, like building a project that’s technically cool but has no clear business case. A structured approach ensures every single initiative you consider is:

Problem-Focused: It has to solve a known pain point or help achieve a strategic objective.

Data-Informed: It must be based on the reality of your available data and its quality.

Value-Driven: It needs a clear link to a measurable outcome, like cost savings, revenue growth, or happier customers.

Feasible: It has to be realistic in scope and something your organisation can actually handle right now.

Without this discipline, it’s far too easy for teams to waste time and money building solutions that are looking for a problem.

Overcoming the Adoption Hurdle

Despite the obvious benefits, getting AI off the ground can be a slow process. For example, in 2023, AI adoption in German companies was just 12%, barely moving the needle from the previous two years. This kind of stagnation shows a common challenge: businesses see the potential but get bogged down in the practical steps of integration and prioritisation. You can dig deeper into these trends with ZEW's findings.

This is exactly where a formalised process for AI use case development becomes so critical. It provides the clarity and confidence needed to move from talking about AI to actually doing something with it.

Key Takeaway: A successful AI journey starts with strategy, not technology. Your primary goal is to build a robust business case before you even think about building an algorithm. This focus ensures your efforts are aimed at initiatives that will deliver real, measurable business impact.

The initial phase is all about asking the right questions. What are our most pressing business challenges? Where are the biggest bottlenecks in our operations? How can we create a better experience for our customers? Exploring these questions is how you start to uncover a pool of potential real-world use cases.

Ultimately, building a strong foundation means setting up a clear methodology for how your organisation will find, evaluate, and pursue AI opportunities. This might begin with an AI strategy framework to guide your thinking or even using an AI Strategy consulting tool to streamline the process. As we explored in our AI adoption guide, having these structures in place turns AI from an abstract idea into a concrete business strategy. The guidance of our expert team can be invaluable here, helping you navigate these early stages and set your organisation on a path to sustainable success.

Finding and Prioritising High-Impact AI Opportunities

Getting started with AI isn't about waiting for a single, brilliant idea to strike. From my experience, the most successful projects begin with a far more deliberate process: discovering the right opportunities. This means shifting away from vague brainstorming and instead running structured workshops to uncover specific operational bottlenecks or strategic growth areas where AI can genuinely make a difference.

The first real goal is to build an "opportunity backlog"—a dynamic, growing list of potential AI projects. Think of it less as a simple list of ideas and more as a pipeline of possibilities you can evaluate systematically. Every single idea, no matter how small it seems, is a potential starting point for creating real business value.

This methodical approach is your best defence against chasing shiny new trends. It helps you build a foundation for innovation that’s directly connected to your organisation’s most urgent needs and long-term ambitions.

Structured Ideation and Opportunity Mapping

Forget those unstructured, "what if" sessions that go nowhere. For this to work, ideation needs to be a focused activity. I always recommend starting with workshops that bring together cross-functional teams. You need department heads in the room, but you also need frontline employees and your IT specialists. Their diverse perspectives are absolutely essential for spotting the real-world challenges that are often missed from the top down.

To get the ball rolling, focus the discussion around pointed questions like these:

What manual, repetitive tasks are eating up the most staff time?

Where are we constantly hitting roadblocks with data or seeing delays in our decision-making?

What are the most common friction points our customers complain about?

Which of our current processes, if we could improve it by just 10%, would have the most significant impact on our bottom line?

This line of questioning helps unearth pain points that are often invisible at a high level. For example, your finance team might be drowning in manual invoice processing, while your marketing team is overwhelmed trying to analyse customer feedback. Both are prime candidates for your opportunity backlog.

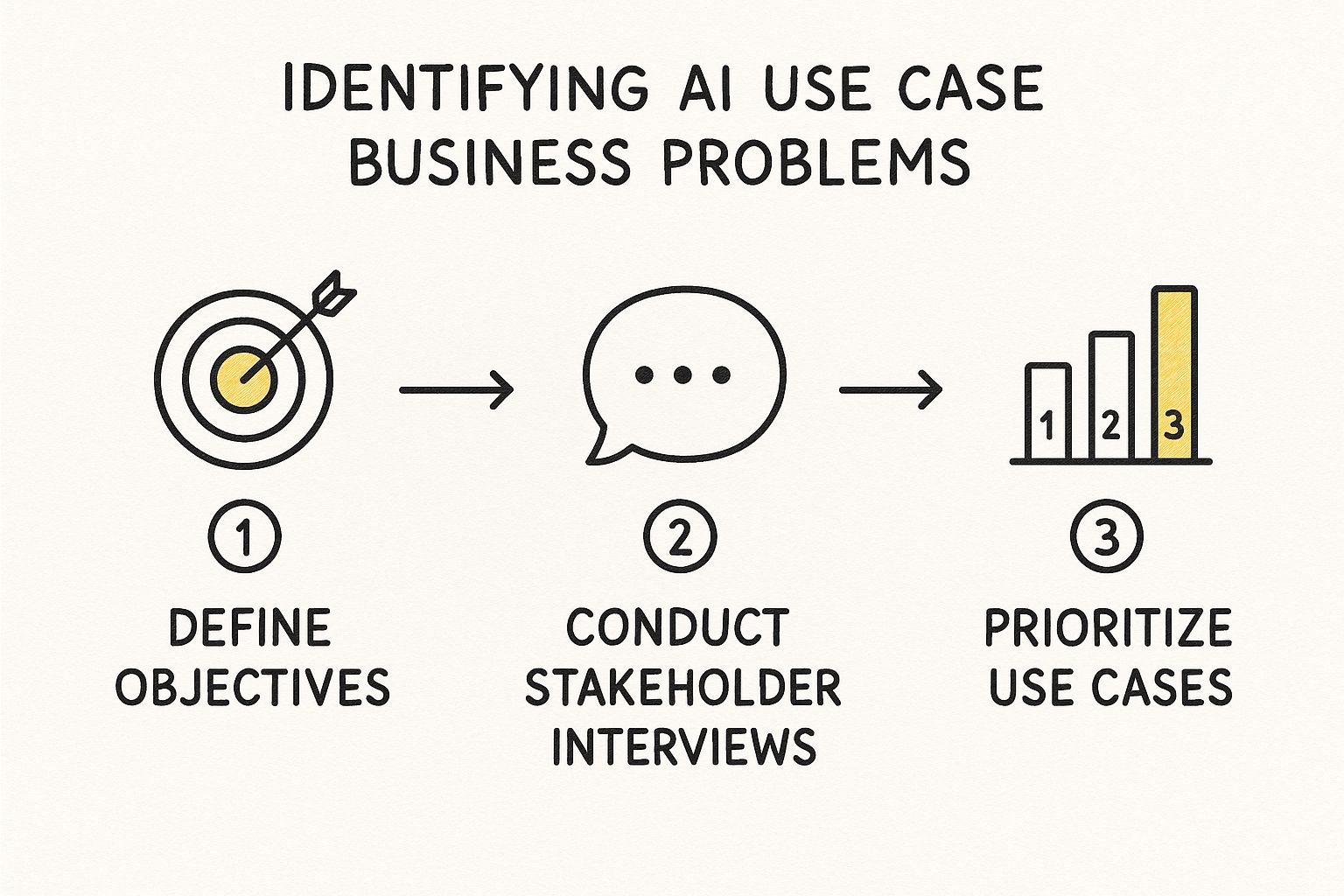

This visual gives a great overview of how to move from initial objectives to selecting the final, most promising use cases.

As the diagram shows, a structured flow is critical. It ensures that every idea you explore is properly validated against clear, measurable business goals.

From Long List to Shortlist

Okay, so you’ve built a healthy backlog of ideas. Now comes the hard part: prioritisation. It’s a simple truth that not all opportunities are created equal. This is where a scoring model becomes your best friend, helping you rank ideas based on a few core criteria. The goal is to distil that long list of possibilities into a manageable shortlist of projects with the highest potential.

This is a challenge even at a national scale. Take Germany, for instance. It has incredible AI research capabilities, but that hasn't led to widespread adoption in its crucial manufacturing sector. This paradox shows that even with a strong technical base, a lack of clear prioritisation and strategy can seriously stall progress.

Expert Tip: Create a simple prioritisation matrix. Score each potential use case (from 1 to 5) across key dimensions: business impact, technical feasibility, data availability, and strategic alignment. The ideas with the highest total scores automatically rise to the top of your list.

To make this practical, here is a simple framework you can adapt.

AI Use Case Prioritisation Matrix

A framework to evaluate and score potential AI use cases based on key business and technical criteria, helping teams make informed decisions.

Use Case Idea | Business Impact (1-5) | Technical Feasibility (1-5) | Data Availability (1-5) | Strategic Alignment (1-5) | Total Score |

|---|---|---|---|---|---|

Automate Invoice Processing | 4 | 5 | 5 | 4 | 18 |

Customer Churn Prediction | 5 | 4 | 4 | 5 | 18 |

Dynamic Product Pricing | 5 | 3 | 3 | 5 | 16 |

Content Personalisation Engine | 4 | 4 | 3 | 4 | 15 |

Using a matrix like this removes the guesswork. It forces a data-driven conversation, making it clear which projects offer the best combination of high value and achievability.

A well-defined prioritisation process like this is a central piece of any effective AI strategy framework. It guarantees that your finite resources—your budget, your time, and your best people—are channelled into the projects most likely to succeed and deliver a meaningful return. This focused approach is truly the key to turning a simple list of ideas into a powerful engine for business growth.

Don't Commit Until You Validate Your AI Use Case

So, you’ve got a fantastic idea for an AI project, one that’s topped your priority list. That's a great start, but an idea on a spreadsheet is a long way from a viable, value-generating solution. This next part, the validation phase, is where the rubber truly meets the road.

This is the point where you mercilessly separate the practical, game-changing concepts from the expensive dead ends. I’ve seen teams get excited and rush this stage, and it almost always leads to wasted time and money. Do it right, and you’ll build the unshakeable confidence needed to go all-in.

Your first move is a rigorous AI requirements analysis. This isn't just about ticking off technical boxes. It's a deep dive to define the exact business problem you're solving and what the AI absolutely must do to be called a success. What does the system need to accomplish? How will you measure its impact? Who is actually going to use it day-to-day?

If you skip this, you risk building a technical marvel that solves nobody's real-world problem. Getting this clarity upfront aligns everyone, from the C-suite to the development team, on the same clear objectives from day one.

From Theory to Reality with a PoC or MVP

With your requirements clearly defined, it's time to test your core assumptions without betting the farm. You’ll do this by building either a Proof of Concept (PoC) or a Minimum Viable Product (MVP). People often use these terms interchangeably, but they serve very different purposes in your validation journey.

Proof of Concept (PoC): A PoC is a focused experiment designed to answer a single, critical question: "Is this technically possible with our data and our tech?" It’s all about testing the core hypothesis. For example, can an algorithm actually achieve the needed accuracy on your specific dataset? It’s an internal project, not something you’d show to an end-user.

Minimum Viable Product (MVP): An MVP takes it a step further. It's the most stripped-down version of your product that you can actually give to a small group of users to get their feedback. The question here is: "Do people find this valuable?" An MVP tests not just the technology but also whether there's a real desire for the solution.

How do you choose? It all comes down to your biggest risk. If you’re not sure the tech can even do what you want, start with a PoC. If you’re confident in the technology but uncertain if anyone will actually want to use it, an MVP is the way to go. Diving into these validation techniques in a structured setting, like our AI project planning workshop, can help your team make the right call.

Defining What Success Actually Looks Like

You can't validate what you can't measure. It’s that simple. Before a single line of code is written for your PoC or MVP, you have to define, in quantifiable terms, what a "win" looks like. These metrics need to be crystal clear and tied directly back to the business problem you’re trying to solve.

My Experience: The most successful AI projects look beyond just technical accuracy. While model performance is a piece of the puzzle, the real measures of success are business outcomes. Did you lower costs? Did you speed up a process? Did you make customers happier? That's what matters.

Let’s say you’re building a PoC to automate invoice processing. Your success metrics might look something like this:

Achieve 95% accuracy in extracting key fields like vendor name, amount, and date.

Cut the manual processing time for each invoice by at least 50%.

Successfully process a test batch of 1,000 invoices without any critical system failures.

Metrics like these create a clear finish line. They kill ambiguity and give you a solid, data-backed reason to move forward, pivot your approach, or wisely pull the plug.

This early validation builds an iron-clad business case, which is absolutely essential for getting the budget and stakeholder buy-in you need for full development. It transforms a good idea into a de-risked opportunity, proving its potential and building momentum throughout the organisation. By validating first, you ensure your AI efforts are grounded in reality, not just wishful thinking.

From Bright Idea to Business Impact: Building Your AI Solution

You've done the hard work of finding and validating a promising AI use case. Now for the exciting part: actually building it. This is where your thoroughly vetted concept starts to become a real, tangible tool that can genuinely move the needle for your business. It’s the moment the AI use case development process shifts from theory into practice.

Bringing an AI solution to life isn't like typical software development. It's less about following a rigid blueprint and more about navigating a cycle of discovery. You'll move through data gathering, model training, and eventually, deployment into your daily operations. Think of it as an iterative loop of building, testing, and refining—not a straight line to a finish line.

Why an Agile Approach is Non-Negotiable for AI

Traditional, waterfall-style project management simply doesn’t work well for AI. Why? Because you’re stepping into the unknown. You're not just assembling pre-defined components; you're experimenting to find the best possible solution. This inherent uncertainty is precisely why an agile development methodology is the gold standard here.

Agile breaks the project down into small, manageable sprints, letting your team make steady, iterative progress. Instead of waiting months for a single big-bang launch, you're focused on delivering small wins and incremental improvements along the way. This has some massive advantages:

You can pivot quickly. If you hit a data snag or uncover a new insight, you can adjust your course without derailing the whole project.

You get feedback early and often. Stakeholders can see and play with working parts of the solution sooner, providing crucial feedback that keeps the project aligned with real business needs.

You start seeing value faster. It’s often possible to deploy parts of the solution early, meaning you can begin realising the benefits long before the final version is complete.

This collaborative, iterative rhythm is a cornerstone of effective AI co-creation, where your technical and business teams work in lockstep to build something truly valuable.

The Core Rhythm of AI Development

Once you’ve committed to building, the real technical work can kick off. While the specifics will always depend on your unique project, the development journey generally follows a well-established pattern.

Sourcing and Preparing Your Data: Be prepared to spend a lot of time here—it’s easily the most critical and often most time-consuming stage. High-quality, relevant data is the fuel for any AI model. This involves gathering data from all your different systems, cleaning it up to fix errors and inconsistencies, and labelling it so the model has clear examples to learn from.

Choosing and Training the Model: With clean data in hand, your tech team will select the right kind of algorithm for the task at hand—maybe a regression model for forecasting sales or a classification model for sorting customer support tickets. They then feed your prepared data into this model, allowing it to chew through the information and learn the patterns needed to do its job.

Evaluating and Fine-Tuning: No AI model gets it perfectly right on the first try. After that initial training run, it’s time for rigorous testing. You’ll measure its performance against the success metrics you defined earlier, specifically testing it on data it has never seen before to check if its knowledge is transferable. Based on how it does, the team will then fine-tune the model to boost its accuracy and reliability.

Remember, the ultimate goal isn't just to build a technically perfect algorithm. It's to create a tool that your team will actually use and benefit from. A brilliant model that's a nightmare to use or disrupts existing workflows is a failed project, no matter how accurate it is.

This human-centred focus is a common theme you’ll see in successful real-world use cases, where the technology is built to serve the people, not the other way around.

From a Smart Model to a Seamless Solution

A powerful AI model sitting on a developer's computer is worthless to the business. The final, and arguably most important, step is integration.

This is all about weaving the AI into the fabric of your company—embedding it within your existing software and business processes so it can work its magic automatically. It could mean building a new dashboard for your sales team or connecting the model to your CRM through an API to provide real-time insights.

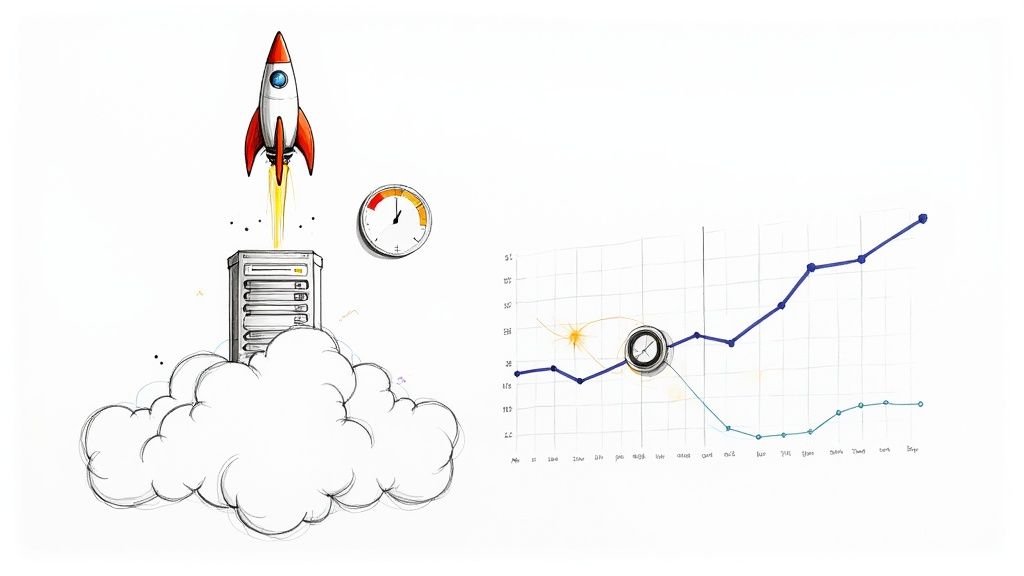

The German artificial intelligence market is set to explode, with a projected compound annual growth rate of 30.2% from 2025 to 2030, and software is leading the charge. This boom is being fuelled by sectors like manufacturing and e-commerce, where pioneers like Otto are using AI to transform their operations and customer experiences. It’s proof that well-integrated AI solutions deliver incredible value. You can read more about the German AI market's trajectory for a deeper look.

In the end, turning an AI concept into a reality is a blend of technical expertise, strategic oversight, and a grounded understanding of what your business truly needs. It’s a challenging journey, but one that transforms a great idea into a powerful engine for your company's growth.

How to Measure and Scale Your AI Initiatives

Getting your first AI solution out the door is a huge win, but it's not the finish line. In my experience, it's really just the starting gun. The true, lasting value of AI isn't found in a single launch; it's built by creating a continuous loop of measuring, learning, and then strategically scaling what actually works.

The process of developing an AI use case doesn’t end when you hit 'deploy'. This is where you start gathering the cold, hard data needed to justify more investment and prove the project's worth to the rest of the company.

Defining KPIs That Truly Matter

To get a real sense of success, you have to look past the purely technical stats. Yes, things like model accuracy, precision, and recall are critical for your data science team, but they mean very little to your leadership. Your Key Performance Indicators (KPIs) have to tie directly back to tangible business outcomes.

You need to be ready to answer the questions coming from the C-suite: "How did this move the needle on our bottom line?" and "Are we genuinely more efficient now?"

The best way I've found to do this is with a balanced scorecard of metrics:

Operational Efficiency: Measure the direct impact on your team's workflow. This could be the hours saved per week by an automated process, a drop in the average handling time for customer support tickets, or an increase in the number of tasks an employee can complete.

Financial Impact: Follow the money. Track direct cost savings from automation, new revenue streams unlocked by AI-powered recommendations, or even a bump in customer lifetime value (LTV) thanks to smarter personalisation.

Customer Experience: Keep a close eye on how the solution affects your customers. Look at your Customer Satisfaction (CSAT) scores, Net Promoter Score (NPS), or a noticeable reduction in customer churn rates.

Model Performance: You still need to monitor the technical health. This means tracking model accuracy over time and watching for concept drift to ensure the AI's predictions don't go stale.

This approach gives you a complete, 360-degree picture of the AI's real-world impact, making it far easier to build a rock-solid business case for whatever you want to do next.

Establishing a Robust Monitoring Framework

An AI model isn't a slow cooker you can just "set and forget". The world it operates in is always changing. Customer behaviour shifts, market dynamics evolve, and new data patterns emerge that your original model was never trained on. This slow degradation in performance is a real phenomenon known as model drift.

That’s why a solid monitoring framework isn't a nice-to-have; it's essential. Think of it as an early warning system that flags a performance dip before it starts costing you money or frustrating your customers.

Key Takeaway: Continuous monitoring is a non-negotiable part of managing any live AI solution. It protects your initial investment by ensuring the model stays effective, reliable, and aligned with business goals long after launch day.

Without this oversight, you risk making major business decisions based on faulty AI predictions, which completely wipes out all the value you worked so hard to build.

Creating a Roadmap for Scaling Success

With strong KPIs in your back pocket and a solid monitoring plan in place, you’re perfectly positioned to scale. A successful pilot project is your best internal marketing tool, proving the value of AI to the entire organisation. Now it’s time to use that momentum to map out a strategy for a wider rollout.

This is the point where your AI strategy framework becomes your North Star. Use what you learned from your first project to find and prioritise the next wave of high-impact opportunities.

Look for similar problems: Are other departments grappling with challenges that could be solved with the same AI solution, perhaps with a few tweaks?

Build on your foundation: Can you use your first model as a building block to tackle a more complex, related problem?

Champion a data-first culture: Share your success stories far and wide. Use data, charts, and testimonials to get other teams excited about finding their own AI wins.

Scaling AI is a serious undertaking that sits at the intersection of technology, strategy, and people. For particularly knotty challenges or to get moving faster, getting guidance from our expert team can provide the strategic clarity and technical horsepower to turn that first success into a company-wide capability.

Common Questions About AI Use Case Development

Even with a solid plan, it's natural to have questions pop up along the way. I've found that navigating the AI use case development process can feel a bit daunting at first, but knowing the common hurdles and questions ahead of time makes everything run much more smoothly. Let's tackle some of the most frequent ones I hear from leaders just like you.

How Do We Identify the Best First AI Use Case?

My advice is always the same: aim for a "high impact, low complexity" project. Your first goal isn't to change the world overnight; it's to secure a clear, undeniable win that gets everyone excited and builds momentum.

Start by looking for a well-understood business problem where you already have access to the necessary data. The potential return on investment should be easy to calculate and explain. I always tell teams to resist the urge to go for a big "moonshot" project right out of the gate. A fantastic starting point is often automating a highly repetitive, time-sucking manual task that everyone in the department agrees is a major bottleneck. This approach delivers immediate, tangible value and makes it much easier to get support for your next AI initiative.

What Are the Most Common Reasons AI Projects Fail?

It might surprise you, but most AI projects don't fail because of the technology. The algorithms and models are just one piece of the puzzle. Success or failure usually comes down to the business fundamentals surrounding the project.

From my experience, the biggest culprits are almost always:

No Clear Business Goal: The project is a solution looking for a problem, rather than tackling a real, urgent business need.

Poor Data Quality: The data is a mess—it's not clean, relevant, or there simply isn't enough of it to train an effective model.

Lack of Stakeholder Buy-in: Key leaders and the people who will actually use the tool aren't on board, which means no support when you need it most.

Ignoring Change Management: There's no plan for how to integrate the new AI tool into daily workflows and train the team to use it properly.

A great algorithm is not enough. You need a rock-solid business case, clean data, and a clear plan for getting people to actually use the thing from day one.

Do We Need to Hire Data Scientists to Start?

Not necessarily, especially when you're just starting out. The initial discovery and prioritisation phases of AI strategy consulting can be led effectively by business leaders who have a strategic mindset and a deep understanding of the company's challenges. You can get a long way with your current team just by exploring and validating ideas.

When you get to the validation and development stages, you have options. You could upskill your existing technical talent, use some of the fantastic low-code AI platforms available, or bring in external specialists for a specific project. As your AI initiatives become more complex, you'll almost certainly need dedicated data science expertise, but it shouldn't stop you from getting started.

How Long Does a Typical AI Development Cycle Take?

This really depends on the project's complexity. A simple proof-of-concept for a clearly defined problem might only take 4-8 weeks. On the other hand, a full-scale, enterprise-wide system, like a sophisticated predictive maintenance model, could easily take 6-12 months or even longer.

The key is to use an agile approach with clear, regular milestones. This allows your team to deliver value in small, incremental chunks instead of making everyone wait for one big, far-off launch date. It keeps the momentum going and allows for adjustments along the way. For more detailed answers to your questions, you can explore our comprehensive AI FAQ page or connect with our expert team to discuss your specific needs.