Navigating the Ethical Challenges of AI in Healthcare

Explore the critical ethical challenges of AI in healthcare, from data bias to patient privacy. Learn to build a responsible AI strategy for a safer future.

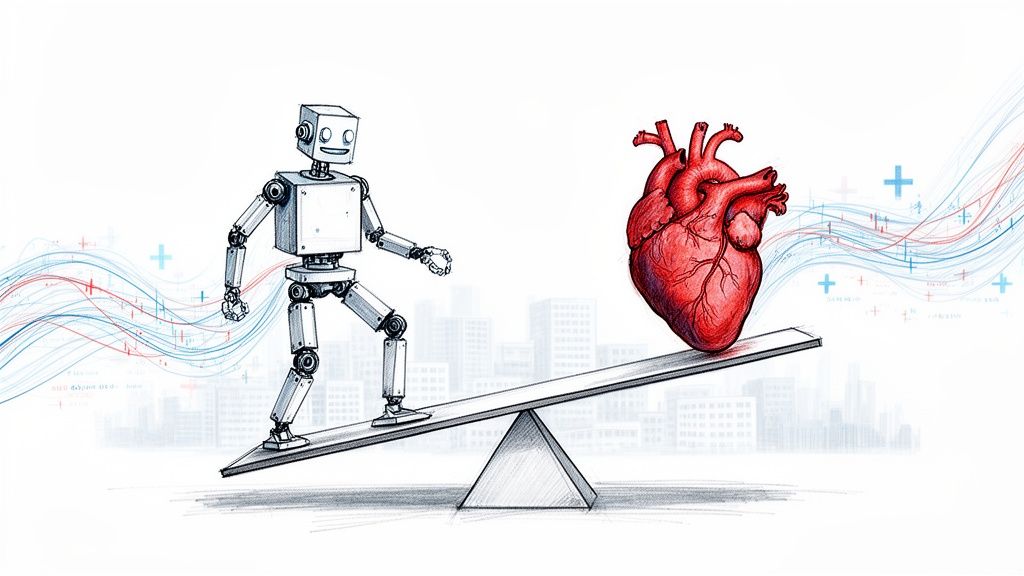

At the heart of the conversation about AI in healthcare lies a delicate balance: how do we protect patient safety, guard privacy, and stamp out algorithmic bias that could make health disparities even worse? It’s a high-stakes tightrope walk between incredible technological progress and fundamental human rights. One slip can shatter patient trust and undermine the very care we’re trying to improve.

The High-Stakes Balancing Act of AI in Healthcare

Think of AI in medicine as a brilliant surgeon with unparalleled skill but no sense of right and wrong. That’s the double-edged sword we’re holding. On one hand, AI is a monumental force for good, promising to reshape diagnostics, tailor treatments to an individual’s DNA, and make hospital operations more efficient than ever before.

But this incredible potential comes with a shadow. The same data that makes these algorithms so powerful can also bake in subtle, dangerous biases that lead to unequal care. The push to innovate can run headfirst into our sacred duty to protect a patient's most sensitive information.

Why Ethical AI Is a Business Imperative

For any leader in the healthcare space, getting this right isn't just about ticking a compliance box. It’s about building the trust that underpins the entire patient relationship and ensuring your technology investments have a future. Ignoring these ethical landmines can lead to serious consequences.

- Reputational Damage: A single story about a biased algorithm or an unsafe AI tool can permanently tarnish a hospital's name.

- Legal and Financial Risks: The rulebook for AI is still being written. This uncertainty creates a minefield of potential liability if a system makes a mistake.

- Barriers to Adoption: Doctors, nurses, and patients will simply refuse to use tools they don’t understand or trust, turning a multimillion-dollar investment into shelfware.

This isn't just a hypothetical problem. Public trust is already on shaky ground. A KFF Health Misinformation Tracking Poll revealed that over 50% of adults do not trust AI-generated health information. That level of skepticism is a massive roadblock for any new technology trying to gain a foothold.

Turning Hurdles into Cornerstones

The point of this guide isn’t to scare you away from AI. Far from it. Our goal is to give you a real-world playbook for navigating this complex new world.

By tackling the ethical challenges of AI in healthcare head-on, you can turn these potential obstacles into the very foundation of a responsible and effective AI strategy. We’ve seen how thoughtful planning builds a powerful synergy between technology and compassionate care, which is a key focus for our work with AI in the healthcare industry.

Let's walk through the steps to build solutions that aren't just powerful, but are also safe, fair, and true to the core mission of medicine: to heal.

Unmasking Algorithmic Bias in Medical AI

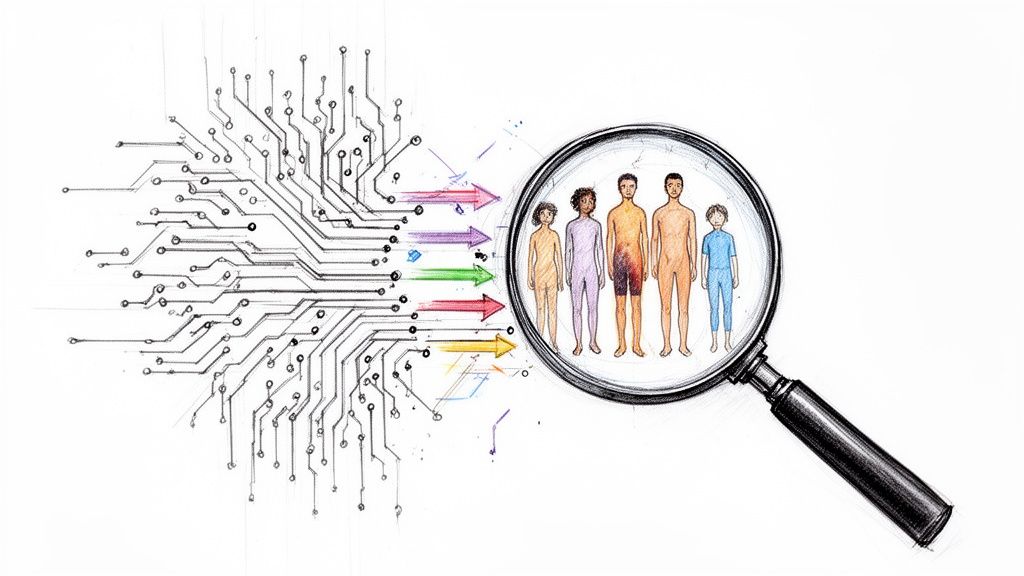

Of all the ethical tightropes we walk with AI in healthcare, algorithmic bias is arguably the most treacherous. This isn't some far-off, theoretical problem; it’s happening right now. When we train AI systems on healthcare data that reflects generations of societal and medical inequalities, the algorithms don't just learn medicine—they learn our prejudices, too.

What comes out the other side is a dangerous feedback loop where biased technology makes existing health disparities even worse.

Think about an AI built to spot skin cancer. If it's trained almost exclusively on images of light skin, it will inevitably struggle to identify cancerous lesions on darker skin tones. This isn't a simple bug. It's a critical failure that can lead to missed diagnoses, delayed treatment, and tragic outcomes. It's a clear example of how a seemingly neutral technology can encode and scale up human blind spots.

The Technical Roots of Healthcare Inequity

To fix the problem, we have to understand where it starts. Bias doesn’t just spontaneously appear in the code. It’s fed into the system through the data we use and the choices we make during development.

This is why, for business leaders, a thorough AI requirements analysis is non-negotiable before the project even gets off the ground. It’s a core principle we emphasize in our AI strategy consulting work.

Several types of bias are notorious for creeping into medical AI solutions:

- Historical Bias: This is a direct reflection of societal prejudices. If a community has historically been underdiagnosed for a certain disease due to lack of access or cultural factors, an AI trained on that historical data will learn to continue overlooking them.

- Measurement Bias: This happens when data is captured inconsistently. For instance, if a blood pressure cuff gives systematically higher readings for people with larger arms, the data it produces is fundamentally flawed and biased.

- Representation Bias: This is one of the most common culprits. It occurs when the training data doesn’t mirror the real-world patient population. An AI trained on data from a single affluent, suburban hospital is almost guaranteed to fail when deployed in a diverse, inner-city medical center.

The challenge of data bias is one of the biggest ethical roadblocks to widespread AI adoption. A particularly jarring example comes from algorithms used to guide decisions for cardiac surgery and kidney transplants. These models required patients of color to be significantly sicker than white patients to receive the same recommendation for care. You can dig deeper into these findings in research about responsible AI for all communities.

How Algorithmic Bias Manifests in Healthcare

It's one thing to talk about bias in the abstract, but it's another to see how it plays out in the real world. The table below connects the technical sources of bias to their tangible, often devastating, consequences for patients.

| Type of Bias | How It Occurs | Real-World Consequence in Healthcare |

|---|---|---|

| Historical Bias | Training data reflects long-standing societal prejudices or unequal access to care. | An AI model for predicting heart failure risk systematically underestimates the risk for women because historical data underdiagnosed them. |

| Representation Bias | The training dataset doesn't reflect the diversity of the patient population it will be used on. | A dermatology AI trained on mostly light-skinned individuals fails to accurately detect melanoma on patients with darker skin. |

| Measurement Bias | Inconsistent data collection methods or faulty equipment across different groups. | A diagnostic tool calibrated primarily on male physiology provides less accurate readings for female patients, leading to misdiagnosis. |

| Selection Bias | The data used for training is not a random sample of the target population. | An algorithm predicting sepsis risk is trained only on ICU data, making it perform poorly when used on patients in the general ward. |

Understanding these pathways is the first step toward building systems that don't just replicate the flaws of the past but actively work to correct them.

Real-World Consequences and Strategic Mitigation

Let's be clear: the fallout from unchecked bias is immense. We're talking about incorrect diagnoses, harmful treatment plans, and a complete erosion of patient trust. For any healthcare organization, this translates directly into massive legal and reputational risk. You simply can't afford to get this wrong.

You can explore several real-world use cases where maintaining data integrity was the cornerstone of a successful and ethical deployment.

Key Takeaway: Algorithmic bias isn't a technical glitch you can patch later. It's a fundamental strategic risk that demands attention from day one. The fairness and effectiveness of your AI system hinge entirely on the quality and diversity of your training data.

Tackling this requires a deliberate, proactive strategy. It’s about more than just finding a "clean" dataset. It involves a commitment to sourcing diverse data, rigorously testing models across all demographic groups, and even learning from other fields—like the methods for identifying and correcting biased questions in data collection used in survey design.

Only by baking fairness into the DNA of our AI systems can we ensure they fulfill their promise of improving care for everyone, not just a select few.

Balancing Patient Privacy with Medical Progress

For AI to learn, diagnose, and predict, it needs a constant diet of high-quality data. In healthcare, though, this isn't just an abstract resource—it's the deeply personal, sensitive information of real people. This creates a fundamental tension: the push for medical breakthroughs versus the non-negotiable right to patient privacy.

The old way of thinking about data protection often feels like it puts the brakes on progress. Regulations like HIPAA and GDPR are absolutely essential, but just ticking compliance boxes misses the point. A more sophisticated AI strategy consulting approach sees privacy not as a hurdle, but as a core design principle that builds trust and leads to better, more secure technology in the long run.

Why Old Methods Like Anonymization Fall Short

For years, the go-to method for protecting patient data was anonymization—simply stripping out obvious details like names and addresses. But modern data science has poked some serious holes in this approach. It turns out that with enough overlapping data points—think age, zip code, and hospital visit date—it's become alarmingly easy to "re-identify" individuals. The illusion of privacy can be shattered.

This means we have to go deeper. Relying on basic anonymization is like locking your front door but leaving all the ground-floor windows wide open. It just isn't secure enough anymore.

Privacy-Preserving AI Techniques That Actually Work

Fortunately, a new wave of privacy-enhancing technologies (PETs) lets us have our cake and eat it too. We can now train powerful models without ever exposing raw patient data. These methods are a game-changer for any organization building secure internal tooling.

Two of the most powerful approaches are:

Federated Learning: Picture this: you need to train an AI model using data from ten different hospitals, but legal and privacy rules prevent them from sharing data directly. Instead of bringing all the sensitive data to one central place, federated learning sends the AI model out to each hospital. The model learns from each hospital's private data locally, and only the anonymous, combined insights—never the patient data itself—are sent back to improve the main model. It's like sending a researcher to ten different libraries; they come back with new knowledge, but the books never leave their shelves.

Differential Privacy: This clever technique adds a tiny, carefully measured amount of statistical "noise" to a dataset before it's analyzed. The noise is just enough to make it mathematically impossible to tell if any single person's information was part of the analysis, but not so much that it messes up the important patterns. It offers a rock-solid mathematical guarantee of privacy, protecting individuals while still letting researchers find valuable trends.

Privacy by Design: This isn't a feature; it's a philosophy. "Privacy by Design" means building data protection into the very foundation of your systems from day one. Privacy isn't a patch you add later—it's part of the core architecture of your entire AI Product Development Workflow.

This proactive mindset is at the heart of our AI Automation as a Service, where we engineer systems with data security baked right in. It’s all about making smart, early choices that protect patients while still driving innovation.

In the end, this isn't a zero-sum game. By embracing advanced techniques and a "privacy by design" culture, healthcare organizations can build deep trust with patients, sail through regulatory hurdles, and unlock the incredible potential of AI solutions. It turns a potential risk into a real advantage, proving to patients that their data is not only safe but is also helping to shape the future of medicine.

Preserving Patient Autonomy in an AI-Driven World

When an AI system flags a potential diagnosis or suggests a specific treatment, who is really making the final call? This question gets right to the heart of patient autonomy, one of the most pressing ethical challenges we face as AI becomes more integrated into healthcare. The trusted, delicate balance in the doctor-patient relationship is changing, forcing us to ask what "informed consent" truly means in an age of intelligent algorithms.

It’s no longer enough for a patient to just agree to a procedure. For that consent to be genuinely informed, both the patient and their doctor need to clearly understand the AI's part in the process. This demands a level of transparency that many of today's "black box" AI systems just can't provide.

Redefining Informed Consent

At its core, informed consent has always been a conversation between a doctor and a patient about the risks, benefits, and available alternatives. But AI introduces a powerful, complex third party into that conversation. For consent to stay meaningful, the process has to evolve to answer some critical new questions.

- What was the AI's specific contribution? Was it the primary diagnostic tool or just a second opinion?

- What data fueled the AI's recommendation? Patients have a right to know what information is shaping their care.

- What are the system's known limitations or error rates? Being upfront about the AI's fallibility is non-negotiable.

- Can the doctor override the AI's suggestion? This is key to confirming that human expertise remains the final authority.

Getting this right is especially crucial for teams involved in custom healthcare software development, where ethical guardrails must be baked into the design from day one, not tacked on later. To keep patient autonomy front and center, it's vital to revisit the foundational principles of informed consent, which give us a solid baseline for these expanded discussions.

The Slippery Slope of Automation Bias

A major threat to the autonomy of both patients and clinicians is automation bias. It’s our natural human tendency to put a little too much faith in the outputs of automated systems, sometimes even when our own gut feeling or expertise is screaming otherwise. Imagine a busy doctor, under pressure to see more patients. It’s easy to see how they might be tempted to accept an AI’s recommendation without the deep, critical thought they would normally apply.

This is how an AI can subtly shift from being an advisory tool to a de facto decision-maker. Left unchecked, this erodes the professional judgment of skilled clinicians and can sideline the patient’s voice entirely.

Empowering Clinicians and Patients Together

Protecting autonomy isn’t about shunning powerful AI. It's about defining its role properly. The goal should be a collaborative dynamic where AI acts as a guide, not a dictator. It can augment a doctor's expertise by crunching massive datasets and spotting patterns the human eye might miss, freeing up the clinician to focus on what humans do best: empathy, communication, and shared decision-making.

This requires a deliberate focus on explainable AI and keeping a "human in the loop." When we ensure doctors can understand—and, when needed, challenge—an AI’s output, we empower them to remain the ultimate advocate for their patient. In turn, a well-informed clinician can have a much richer, more honest conversation with their patient, truly honoring the spirit of informed consent.

Defining Accountability When AI Systems Make Mistakes

When a medical diagnosis goes wrong, the lines of accountability are usually pretty clear. But what happens when an AI system is part of that error? Suddenly, healthcare organizations find themselves in a murky and dangerous gray area, facing one of the most critical ethical challenges in modern medicine.

If an algorithm's suggestion leads to a misdiagnosis, who's on the hook? Is it the doctor who trusted the AI's output? The hospital that bought and installed the system? Or the tech company that built the software in the first place? This is often called the "black box" problem, where even the creators can't fully explain the AI's reasoning, creating a massive legal and reputational risk.

The Limits of Traditional Liability Frameworks

Our traditional medical malpractice laws were designed for a world of human decisions. They simply weren't built to assign blame when the decision is split between a person and a complex, often opaque, algorithm.

Trying to apply old rules to this new reality is like trying to navigate a modern city with a map from the 1950s—you're going to get lost. The existing legal frameworks just can't untangle this complicated web of responsibility, leaving organizations dangerously exposed.

The heart of the matter is that accountability requires transparency. If you can't explain why an AI made a specific recommendation, you can't effectively defend the decision, investigate what went wrong, or stop it from happening again.

This lack of clarity can stifle innovation and destroy trust with both clinicians and patients. Without a clear accountability structure, doctors might hesitate to use powerful new AI tools for business, afraid they'll be left holding the bag if something goes sideways.

Explainable AI as a Cornerstone of Accountability

The answer isn't to ditch AI, but to demand more from it. This is where Explainable AI (XAI) comes in as an absolutely essential part of any responsible AI strategy. Think of XAI as a set of methods that help human users understand and trust the outputs from machine learning algorithms.

Instead of a black box, XAI creates an audit trail. It can show a clinician the key data points the algorithm prioritized in its recommendation. This turns an opaque suggestion into a transparent piece of evidence that a human expert can then validate or question. For example, our own diagnostic aid tool, Diagnoo, is being built on these principles to ensure its outputs are not just accurate, but also understandable.

Building a Proactive Accountability Strategy

For any leader in this space, waiting for a mistake to happen is not a viable strategy. You have to establish clear governance and solid protocols to avoid potentially devastating legal and reputational damage. It’s about shifting accountability from a reactive panic into a proactive plan.

A forward-thinking approach needs to cover a few key bases:

- Clear Governance Policies: Spell out the roles and responsibilities for everyone involved—from the data scientists building the models to the doctors using them.

- Robust Audit Trails: Put systems in place that log not only the AI's recommendations but also how clinicians interact with them, especially when they override a suggestion.

- Continuous Monitoring: Actively watch how the AI performs in the real world. This helps catch unexpected behavior or a drop in performance with certain patient groups.

- Defined Override Protocols: Empower and train clinicians on when and how to overrule an AI's suggestion. This reinforces that human expertise is, and must remain, the final authority.

By proactively defining these protocols, you can ensure that ethical considerations and clear lines of accountability are baked into your AI initiatives from day one, building a foundation of safety and trust.

Your Framework for Building Ethical AI in Healthcare

Wading through the ethical minefield of AI in healthcare takes more than good intentions. It demands a structured, proactive plan. Ethical AI doesn't happen by accident; it's the product of intentional design, rigorous testing, and a constant commitment to doing what's right. For business leaders, that means weaving ethical considerations into every single step of the AI lifecycle, from the back-of-the-napkin idea to long-term monitoring.

This isn't just about dodging lawsuits. It’s about building tools that clinicians feel confident using and patients can actually trust. Without that foundation of safety and reliability, you’ll never see the true return on your investment.

Establishing the Ground Rules

First things first: you need a strong governance structure. And no, this isn't just a job for the IT department. You need a cross-functional ethics committee that brings different voices to the table to steer your AI initiatives.

- Form a Cross-Functional Ethics Committee: Pull together clinicians, data scientists, legal experts, patient advocates, and ethicists. Their mission is to define the ethical guardrails for all AI projects.

- Conduct Ethical Impact Assessments: Before anyone writes a single line of code, you have to run a thorough assessment to sniff out potential risks around bias, privacy, and patient safety.

- Define Clear Principles: This committee needs to hammer out a clear, actionable set of ethical guidelines that genuinely reflect your organization's mission and values.

Putting this governance in place ensures ethical oversight isn't an afterthought—it's a core component of your entire AI strategy.

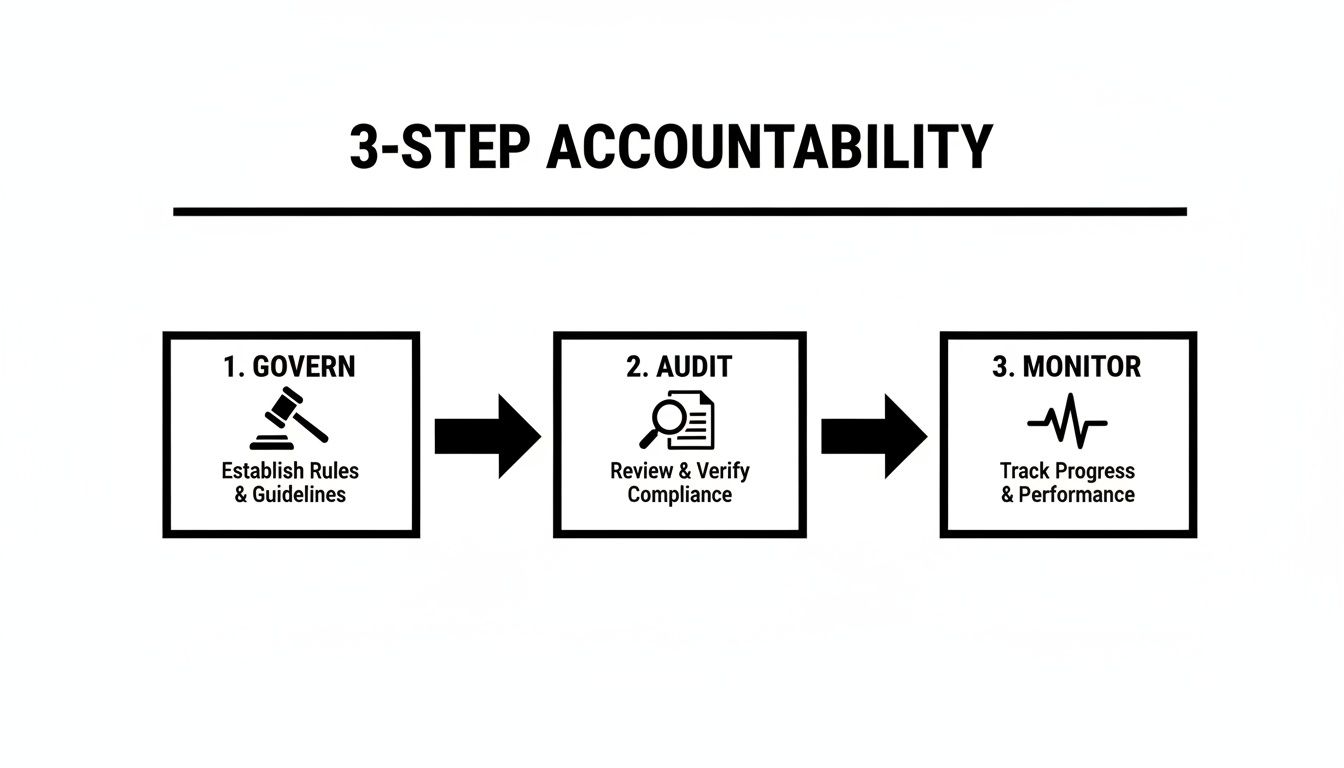

To keep everyone accountable, a straightforward three-step process is key: govern, audit, and monitor.

This model makes it clear that ethical AI isn’t a one-and-done compliance checkbox. It’s a continuous cycle. You set the rules, verify they're being followed, and then watch how things play out in the real world before starting the loop all over again.

Implementing Human-Centric Systems

Let's be clear: technology alone isn't the solution. The best, most ethical AI systems are the ones that enhance human expertise, not try to replace it. That means keeping a human in the loop for critical decisions.

A well-designed human-in-the-loop (HITL) system guarantees that a qualified clinician always gets the final say, especially when it comes to high-stakes diagnoses or treatment plans. This approach not only provides a vital safety net but also preserves the irreplaceable value of clinical judgment. This philosophy is fundamental to a transparent AI product development workflow, which puts safety and clinician empowerment first.

Ultimately, building ethical AI is a journey, not a destination. The field is always shifting, and your framework has to be flexible enough to keep up. By taking a proactive and principled approach, you can create solutions that aren't just powerful but are also equitable, safe, and worthy of the trust you're asking patients to place in them.

Frequently Asked Questions

Wading through the ethical questions surrounding AI in healthcare can feel overwhelming. Let's tackle some of the most common—and critical—questions that leaders are asking right now.

How Can We Build Unbiased AI If Our Historical Data Is Flawed?

This is the million-dollar question, and it starts with accepting a simple truth: all historical data has its flaws and biases. You can't just feed it into an algorithm and hope for the best. Instead, you need a deliberate, multi-pronged strategy.

First, you can't just work with what you've got. Use techniques like data synthesis to create more balanced datasets, especially for underrepresented patient groups. Then, choose algorithms specifically designed to detect and counteract bias as they learn. Most importantly, the work doesn't stop once the AI is live. Constant monitoring and auditing across all demographics are essential to catch and correct biases that emerge over time. A solid AI Strategy consulting tool will help you build this kind of proactive bias mitigation plan from the very beginning.

What Is The Single Most Important First Step To Address AI Ethics?

Create a dedicated AI governance committee. And I don't mean just your data scientists and engineers. This group needs a 360-degree view, which means bringing clinicians, ethicists, legal experts, and even patient advocates to the same table.

Their first job? To draft a clear set of ethical principles that align with your organization's core mission and values. As we explored in our AI adoption guide, this isn't just a document that sits on a shelf. It becomes the foundation for every AI project, ensuring ethics are part of the conversation from day one, not a checkbox exercise at the end.

Who Is Legally Liable If An AI Tool Makes A Diagnostic Error?

Welcome to one of the thorniest issues in health AI. The short answer is: it's complicated, and the law is still catching up. Liability doesn't just fall on one person's shoulders; it could be shared among several parties.

Think of it as a chain of responsibility. The AI developer, the hospital that deployed the system, and the doctor who acted on its suggestion could all be found partially liable. The final decision often depends on factors like:

- How transparent was the AI's decision-making process?

- What was the required level of human oversight?

- What did the contracts between the developer and the hospital say?

To protect everyone involved, you need clear documentation, detailed audit trails for every AI-assisted decision, and explicit protocols for when and how a clinician can override the AI's recommendation. These safeguards aren't just good practice; they're essential for managing risk.

Ready to build AI solutions that are powerful, ethical, and secure? At Ekipa AI, we help you navigate these complexities with confidence. Discover how our AI strategy consulting can turn your ethical framework into a competitive advantage. You can also explore our Custom AI Strategy report or book a consultation with our expert team.