How to Implement AI in Healthcare

A practical guide to implement AI in healthcare. Learn to build your strategy, ensure data readiness, navigate compliance, and measure the real-world impact.

Before you can successfully implement AI in healthcare, you need a solid game plan. The first step isn't about the technology itself—it's about zeroing in on the specific clinical or operational headaches where AI can make the biggest, most measurable difference. The goal is to move past the hype and pinpoint real-world workflow bottlenecks, ensuring your first projects build momentum and deliver tangible value.

Building Your Healthcare AI Strategy

Getting into AI is a major strategic commitment for any healthcare organization, not just another IT upgrade. The upsides are huge, but so are the complexities. You're juggling patient safety, tight regulations, and the challenge of getting clinicians on board. Without a clear roadmap, even the most promising AI tools can fall flat, leading to wasted money and frustrated teams. The trick is to build a realistic plan that starts with small, measurable wins and grows from there.

The financial motivation to get this right is undeniable. The AI healthcare market has absolutely exploded, growing from around $1.1 billion in 2016 to $22.4 billion in 2023. That’s a staggering 1,779% increase. With forecasts showing the market could hit nearly $188 billion by 2030, it’s no longer a question of if AI will be adopted in healthcare, but how to do it smartly.

Identify High-Impact Opportunities

The first move is to shift from the abstract idea of "using AI" to finding concrete problems it can solve. Don't start with a cool algorithm; start with your team's pain points. Where are the real bottlenecks in your daily operations?

- Clinical Support: Think about diagnostic processes. Could AI help radiologists analyze medical images faster and more accurately? Or maybe it could predict which patients in the ICU are at the highest risk of deteriorating.

- Operational Efficiency: Are your administrative staff drowning in repetitive tasks? AI is brilliant for automating things like patient scheduling, claims processing, and prior authorizations.

- Patient Engagement: How can you better connect with patients? AI-powered chatbots can handle common questions, deliver post-discharge instructions, and help triage non-urgent issues, freeing up nurses for more critical tasks.

A great way to unearth these opportunities is by getting key people in a room together. We've found that structured sessions, like an AI discovery workshop, are invaluable for bringing together different perspectives and uncovering the needs that matter most.

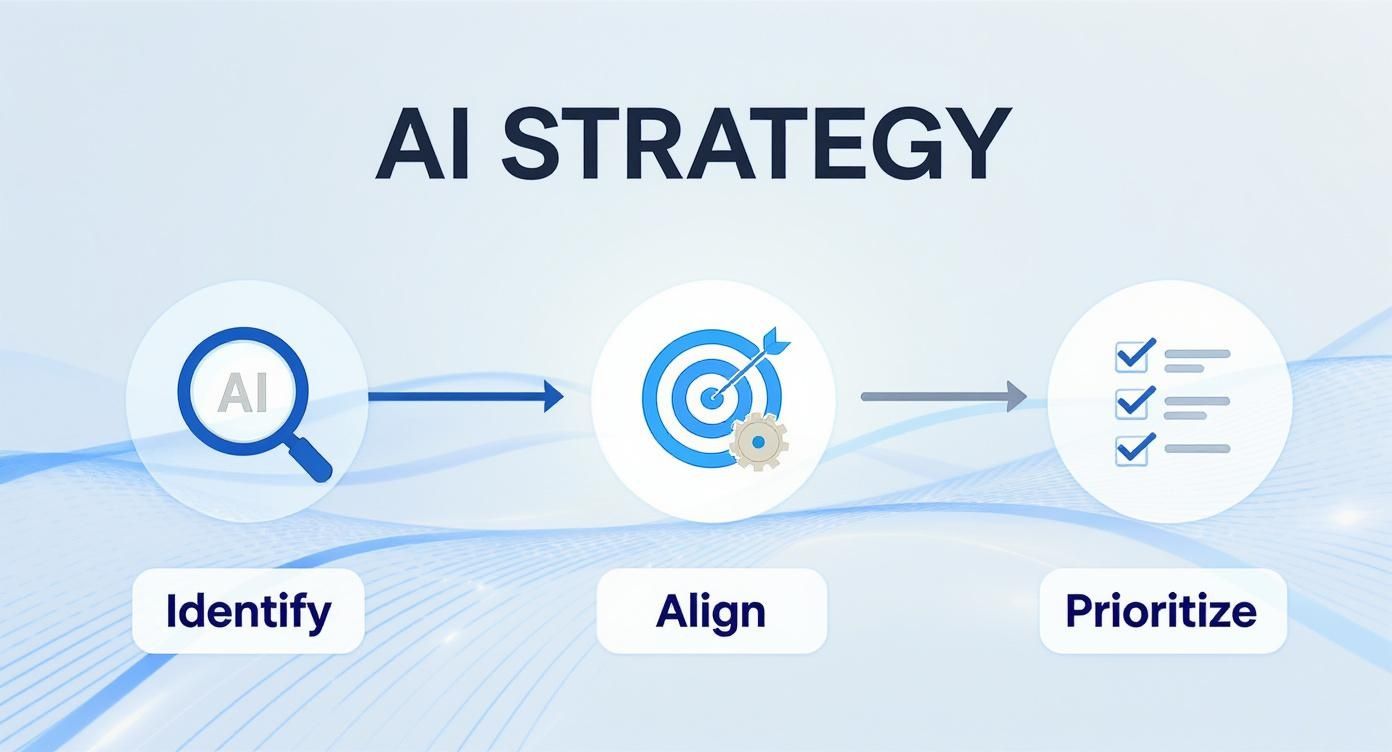

Align AI Initiatives with Core Goals

Once you have a long list of potential AI projects, you have to connect them to what the organization truly cares about. An AI initiative is much more likely to get the green light and the necessary funding if it clearly supports a major strategic goal, whether that's improving patient outcomes, slashing operational costs, or creating a better patient experience.

This visual lays out the simple, powerful process of identifying, aligning, and prioritizing your AI initiatives to build a strategy that actually works.

This foundational work ensures that every AI project you launch is purpose-driven and tied to real business value right from the start.

Prioritize for Early Wins

You can't do everything at once. Smart prioritization is what separates successful AI programs from those that stall out. To make this easier, we often help organizations create a simple scoring framework.

AI Opportunity Prioritization Framework for Healthcare

This framework helps you evaluate potential AI projects by weighing their clinical and operational impact against how difficult they'll be to implement.

| Priority Area | Potential AI Application | Key Success Metric | Implementation Complexity |

|---|---|---|---|

| Operational Efficiency | Automated prior authorization submissions | 50% reduction in staff time spent on authorizations | Low to Medium |

| Clinical Diagnostics | AI-assisted mammogram screening | 15% improvement in early cancer detection rates | High |

| Patient Throughput | Predictive patient discharge planning | 10% reduction in average length of stay | Medium |

| Resource Management | OR scheduling optimization | 20% increase in operating room utilization | Medium to High |

By mapping out your options this way, you can easily spot the "quick wins"—projects with high value and lower complexity. These are your ideal starting points. Of course, a successful AI strategy also depends on robust underlying technology and support, which is where specialized IT services within Health Care Services become critical partners.

Key Takeaway: Your first AI projects should be visible, valuable, and achievable. A successful pilot acts as powerful proof that this works, making it far easier to get the buy-in and resources you'll need for more ambitious projects down the road.

Getting Your Data House in Order: Governance and Preparation

Before a single line of AI code is written, you have to get to grips with the one thing that fuels it all: data. In healthcare, data isn't just a string of ones and zeros; it's the digital reflection of a patient's life and well-being. Getting your data strategy right isn't just a best practice—it's a clinical and ethical necessity.

Let's be blunt: feeding an AI model poor-quality data is like asking a world-class surgeon to operate with rusty tools. The results will be messy, unreliable, and potentially dangerous. Biased algorithms, flawed predictions, and a complete breakdown of trust with clinicians are the direct consequences of a weak data foundation. You need to build on solid ground.

Start with a Deep-Dive Data Audit

First things first: you need to know exactly what you're working with. A comprehensive data audit is your treasure map, showing you where all the valuable information is buried across your organization. This goes far beyond the EHR. We're talking about everything—imaging archives (PACS), lab information systems (LIS), billing records, pharmacy data, even scheduling software.

The goal here is to answer a few critical questions:

- Where is our data? Is it neatly organized in structured databases, or is it locked away in unstructured clinical notes, PDFs, and siloed departmental systems?

- What’s the quality like? Be honest. Are you dealing with incomplete records, duplicate entries, inconsistent formatting, or outdated information?

- Can we actually get to it? Identify the technical and bureaucratic roadblocks. Are there APIs? Do you need special permissions? Is the data in a format that’s even usable?

This process is always illuminating. You might find that the cardiology department's records are formatted completely differently from oncology's, making it impossible to get a unified view of a patient's journey. Uncovering these issues early is what separates successful AI projects from expensive failures.

Build a Rock-Solid Governance Framework

Once you know what data you have, you need to establish the rules for how it's handled. A data governance framework isn't about creating red tape; it's about creating clarity, accountability, and long-term data integrity. Think of it as the hospital's safety protocol, but for information.

A strong framework will always include:

- Clear Data Ownership: Every dataset needs a designated owner. The head of radiology, for example, is the natural owner of all imaging data. They become the go-to person responsible for its quality, security, and appropriate use.

- Role-Based Access Controls: Not everyone needs to see everything. Implement strict policies that grant access based on a person's role. A clinician needs a patient's full chart, but a data scientist training a model may only need access to a de-identified version.

- Data Lifecycle Management: Define clear rules for data retention, archival, and secure deletion. This isn't just good housekeeping; it's a core compliance requirement.

A Word of Advice: Data governance is never "done." It’s an ongoing discipline. Set up a dedicated governance committee with leaders from clinical, IT, legal, and operational teams to keep policies relevant and address new challenges as they arise.

Moving from Raw Data to AI-Ready Assets

Raw clinical data is almost never ready to be fed directly into an AI model. It needs to be cleaned, standardized, and most importantly, protected. This prep work can be tedious and is often the most time-consuming part of any AI initiative, but skipping it is not an option.

Take unstructured data, for instance. A physician’s free-text notes contain a goldmine of information, but an algorithm can’t make sense of a narrative. This is where specialized tools like an AI-powered data extraction engine become invaluable, automatically pulling out structured data points like diagnoses, medications, and key findings. This step alone can massively improve the quality of your input data.

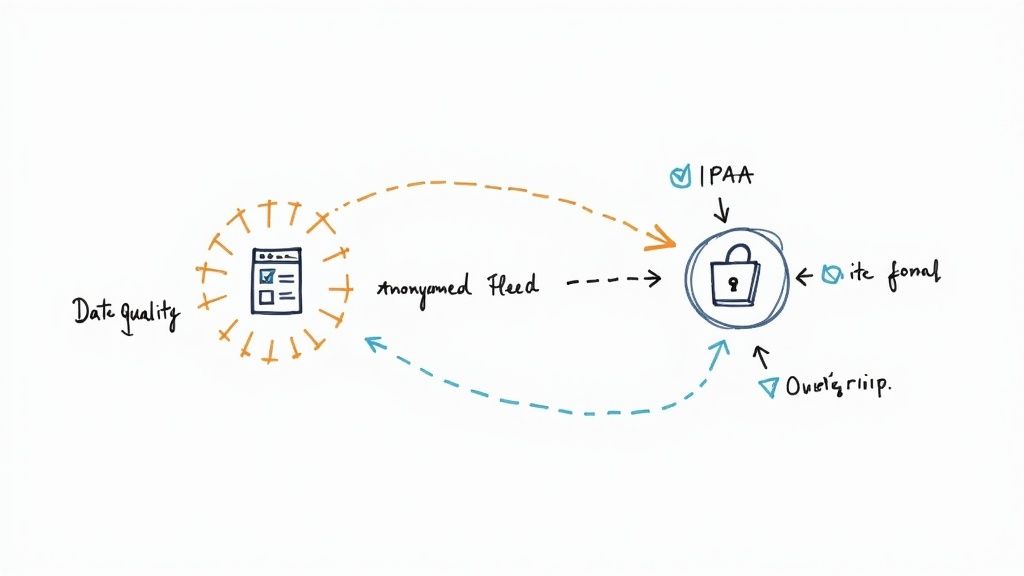

Finally, you must rigorously anonymize and de-identify the data. All Personally Identifiable Information (PII) has to be scrubbed from datasets used for model training. Techniques like tokenization or data masking help protect patient privacy while ensuring the data remains useful for analysis. A pristine, well-governed data foundation is what allows powerful AI to flourish, and it’s your best defense against privacy incidents and regulatory fines.

Getting Through Clinical Validation and Regulatory Hoops

Putting an AI tool into a live clinical environment is a massive responsibility. This is where the conversation shifts from what’s technically possible to what’s safe for patients. Before any algorithm gets near a diagnosis or treatment plan, it has to go through a gauntlet of validation to prove it's both effective and safe. This isn't just about checking a box; it's about building the fundamental trust that makes clinical AI work.

The path from a working model to a validated clinical tool is a deliberate, multi-step journey. It all starts with retrospective validation. Think of this as looking in the rearview mirror. You test your model against a huge set of historical, de-identified patient data to see how it would have performed. This is a critical first step that gives you a performance baseline in a completely controlled, risk-free environment.

Proving Performance Under Pressure

Once a model looks good on paper (or, in this case, on old data), it’s time to see how it handles the real world. This is where a prospective study comes in. The goal here is to mimic actual clinical situations as closely as you can. Typically, this means running the AI tool in a "shadow mode" on new patient data as it comes in. The AI makes its predictions, but they aren't used for patient care; instead, they're compared against the decisions made by clinicians.

During this phase, your team needs to be laser-focused on the metrics that truly matter for patient safety:

- Sensitivity: How good is the model at catching what it's supposed to catch? For a cancer screening tool, high sensitivity is non-negotiable because you can't afford to miss a diagnosis.

- Specificity: How good is the model at correctly identifying patients who don't have the condition? High specificity is just as important. It prevents the flood of false positives that cause patient anxiety and trigger expensive, often invasive, follow-up tests.

You have to know what "good" looks like. A solid AI requirements analysis should have already defined the minimum acceptable performance for these metrics well before you get to this point.

Key Insight: An AI model is a reflection of the data it learned from. If your training data isn't diverse, your model won't be either. You have to intentionally design validation studies that include a broad mix of patient populations—different ages, ethnicities, and genders. An algorithm that works perfectly for one group but fails another isn't just a technical problem; it's a clinical failure.

Mastering the Regulatory Maze

Running parallel to your clinical validation efforts is the tangled web of regulatory compliance. In healthcare, this means playing by a very strict set of rules. The two big ones you’ll encounter are HIPAA and, for anything that could be considered a medical device, the FDA.

HIPAA (Health Insurance Portability and Accountability Act) is table stakes. It’s the law governing the privacy and security of all protected health information (PHI). Every single piece of your AI system, from how you store data to who can access it, must be buttoned up and compliant. There's no gray area here.

For a lot of AI tools that help with diagnosis or guide treatment, the FDA will classify them as Software as a Medical Device (SaMD). This label kicks things into a higher gear, demanding a mountain of documentation that covers the entire AI Product Development Workflow. You'll need to show them everything—your design process, your risk analysis, and every scrap of validation data. It’s an incredibly detailed process, and it’s why many health systems bring in partners who specialize in custom healthcare software development to make sure nothing gets missed.

As we explored in our AI adoption guide, getting clinicians on board is everything. The single best way to earn their trust is by being completely transparent about this rigorous validation process. When doctors see the evidence that an AI tool has been put through its paces and proven safe, they’re much more willing to actually use it. And that, after all, is the entire point. This hard work is what turns interesting AI solutions into tools that actually make a difference in patients' lives.

Weaving AI into Clinical Workflows and EHRs

An AI tool is only as good as its adoption rate. If it's clunky, disruptive, or feels like just another box to check, clinicians will ignore it—and a brilliant algorithm becomes a wasted investment. The real test of any healthcare AI initiative isn't the tech itself, but how seamlessly it can be woven into the daily fabric of clinical work and existing Electronic Health Record (EHR) systems.

This is where the rubber meets the road. True integration means the AI feels less like a separate tool and more like a natural extension of a clinician's own mind. It should anticipate needs and deliver insights right when they're needed most, without adding friction to an already demanding day. Getting this right requires a deep, empathetic understanding of how healthcare professionals actually work.

Finding the Path of Least Resistance

Before you even think about code, you need to map the existing workflow. I mean really map it out. Shadowing clinicians is one of the most powerful things you can do. Watch their rounds, see how they wrestle with the EHR, and pinpoint the exact moments they hit a bottleneck or face a tough decision. This firsthand observation is gold—it shows you the least disruptive entry points for an AI-powered assist.

You've got a few different ways to approach this, each with its own trade-offs:

- Embedded EHR Alerts: This is a classic. Think AI-driven warnings for sepsis risk or potential drug interactions that pop up directly within the patient’s chart. It’s effective because it meets clinicians exactly where they are.

- Standalone Applications: Sometimes, a separate app is unavoidable, especially for complex jobs like advanced medical imaging analysis. If you go this route, the user experience has to be flawless. Smooth single-sign-on (SSO) and an easy way to push data back into the EHR are non-negotiable.

- Smart Dashboards: For charge nurses or department heads, an AI-powered dashboard can be a game-changer. It can provide a real-time, bird's-eye view of the floor, flagging patient flow jams in the ER or predicting staffing needs for the next shift.

The right strategy depends entirely on the problem you're trying to solve. Don't force a square peg into a round hole.

The Clinician-Centric Design Imperative

A confusing interface will kill adoption, period. The design must be intuitive, fast, and genuinely helpful, not another source of cognitive load for an already stretched-thin clinical team.

A huge part of this is explainability. Clinicians are trained to be skeptical; they need to understand the "why" behind an AI's suggestion. Instead of just spitting out a risk score, the interface should provide a concise, understandable rationale.

For instance, incorporating effective Voice Recognition Software for Healthcare can be a massive win, cutting down on the manual data entry that everyone hates. Imagine a radiologist dictating their findings naturally, while an AI simultaneously analyzes the image and structures the report. That's the kind of practical value we build into our own internal tooling.

Pro Tip: Bring your clinicians to the design table from day one. Let them play with prototypes, give raw feedback, and help shape the tool. When they feel like co-creators, they become your most powerful champions at launch.

Tackling the Interoperability Challenge

Let's be honest: getting a new tool to talk to your EHR can be a technical nightmare. Healthcare data is notoriously siloed. Thankfully, modern standards like Fast Healthcare Interoperability Resources (FHIR) are making a real difference. These APIs act as universal translators, allowing different systems to exchange information securely and efficiently. Your integration's success hinges on a solid technical foundation built around these modern protocols.

Looking ahead, this kind of deep integration is only going to become more common. It's predicted that by 2025, roughly 80% of hospitals will be using AI to enhance everything from triage and diagnostics to patient engagement.

This is how theoretical AI solutions become indispensable clinical assets. Our own Clinic AI Assistant, for example, was built from the ground up to augment clinical workflows, taking on administrative tasks so practitioners can get back to focusing on patients. In the end, the technical work is only half the story. The real victory is making a clinician's job easier and empowering them to deliver even better care.

Getting Your Team On Board and Proving It Worked

Getting an AI tool live is a huge accomplishment, but let's be honest—that's where the real work begins. The success of any effort to implement AI in healthcare comes down to what happens after the launch. This is where the tech meets the messy, fast-paced reality of a hospital floor. It's all about convincing busy clinicians to actually use the new tool and then proving the whole project was worth the time and money.

If you don't have a solid plan for managing this change, even the most brilliant AI will end up as expensive shelfware. Clinicians have been burned before by tech that promised to simplify their lives but only added more clicks and headaches. You have to convince them this is different.

From Tool to Teammate

First things first: position the AI as an expert assistant, not a replacement. Your entire training should be built around this idea of partnership and support. This isn't about teaching people how to use software; it’s about showing them how this new capability can magnify their skills and take the tedious, soul-crushing work off their plates.

A training plan that actually works needs a few key ingredients:

- Role-Specific Scenarios: Forget generic demos. You need to show people how the tool fits into their day. A radiologist’s workflow is a world away from an ER nurse's.

- Find Your Champions: Every department has respected doctors and nurses who are open to new ideas. Get them on board early. Their enthusiasm and ability to coach their peers will be far more effective than any memo from the C-suite.

- Create an Open Dialogue: Set up a dead-simple way for users to flag problems, ask for help, or suggest a better way of doing things. When people see their feedback leads to real changes, they shift from being users to being partners.

This is how you turn skepticism into a sense of ownership. You want the AI to feel like their tool, something that helps them provide smarter, faster care. Many health systems find that a comprehensive AI strategy consulting partner is crucial for building out this human-focused adoption roadmap right from the start.

Tracking What Truly Matters

You can't claim a victory if you never defined what winning looks like. Before a single user logs in, you must establish clear Key Performance Indicators (KPIs) that tie the AI's function directly to the hospital's biggest goals. These metrics are what turn feel-good stories into the hard data that gets an executive's attention.

Key Takeaway: You need a balanced scorecard. Measure the wins across clinical quality, operational speed, and financial impact. Focusing on just one gives you a dangerously incomplete picture of the AI's real value.

Your KPI dashboard should give you a full 360-degree view, tracking metrics like:

- Clinical Outcomes: Are diagnostic accuracy rates improving? Are we seeing fewer medical errors or lower 30-day readmission rates?

- Operational Efficiency: Have patient wait times in the ED gone down? Is imaging report turnaround faster? How many administrative hours are we saving each week?

- Financial Returns (ROI): What are the direct cost savings from automation? Are we increasing patient throughput in key service lines?

This is how you make the business case to go bigger. When you can walk into a budget meeting with a clear ROI analysis backed by undeniable data, you're not just justifying the pilot project. You're building the momentum for the next wave of innovation.

You can explore a variety of real-world use cases on our portal to get a better sense of what’s possible. If you need help navigating this, our expert team is here to guide you through the process.

Common Questions About Implementing AI in Healthcare

Even with a detailed roadmap, you’re going to have questions. That’s a given with any major initiative, especially one as complex as bringing AI into a clinical setting. This last section tackles some of the most common—and toughest—questions healthcare leaders ask. The goal is to give you straight answers to help you navigate these big decisions with confidence.

What’s the Single Biggest Barrier to AI Adoption in a Hospital?

You might think it’s the tech—things like messy data or getting systems to talk to each other. While those are certainly major hurdles, the real showstopper is almost always cultural. The hardest part is getting clinicians to trust the new tool and figuring out how to fit it into their already chaotic day.

Let’s be honest: any new tool that adds clicks, sows confusion, or interrupts a physician’s flow is dead on arrival. It doesn't matter how brilliant the algorithm is. Real success comes from a deep, almost ethnographic, understanding of how clinicians actually work. It requires robust training that proves the AI is a helpful co-pilot, not another administrative burden. A good AI strategy consulting partner helps you map out and get ahead of these human-centric challenges right from the start.

How Do We Make Sure Our AI Models Are Ethical and Unbiased?

This isn’t a one-and-done checkbox. Ensuring AI fairness is a constant commitment, a cycle of diligence that has to be baked into your governance from day one.

It all starts with your training data. The datasets you use must reflect the actual patient population you serve. If they don't, you risk building demographic or socioeconomic biases right into the model's core logic. Then, as you develop the model, you have to actively audit its performance across different patient groups using specific fairness metrics.

Transparency is also key. Clinicians don't need to become data scientists, but they do need a clear, high-level picture of how the AI reaches its conclusions—what we call explainability. Finally, you absolutely need a multidisciplinary AI governance committee. Pull in clinicians, data scientists, and ethicists to provide continuous oversight and monitor how these tools perform in the real world. You can also use our AI Strategy consulting tool to navigate these complexities.

Crucial Insight: An ethical AI framework isn't a static document; it's a living system. It demands constant attention, regular audits, and an open feedback loop with the clinicians using the tools every single day. This is a non-negotiable part of doing AI responsibly.

Should We Build Our Own AI Solution or Buy One?

The classic "build vs. buy" question really comes down to a trade-off between your organization's unique needs, your available resources, and your team's technical depth. There's no universal answer, but a frank evaluation will point you in the right direction.

- Building a custom solution gets you a perfect, tailor-made fit for your specific workflows. But be prepared—it demands a huge investment in talent, time, and infrastructure.

- Partnering with a vendor for a proven AI Automation as a Service offering can get a compliant, reliable tool into clinicians' hands much, much faster.

More often than not, a hybrid approach makes the most sense. You might buy an off-the-shelf AI tools for business for a common task like medical imaging analysis, while building your own internal tooling to solve a thorny operational problem unique to your hospital. The best first step is always a thorough AI requirements analysis to get crystal clear on your needs before you make the call.

How Do We Actually Measure the ROI of an AI Project?

Calculating the Return on Investment (ROI) for AI in a clinical environment means looking beyond the balance sheet. The real value is a mix of hard numbers and qualitative improvements that ripple across the entire organization.

You have to tell a story that balances both sides of the value equation:

- Quantitative Wins: These are the tangible metrics you can count. Think direct cost savings from automation, a reduction in patient length-of-stay, or lower staff turnover in administrative roles.

- Qualitative Value: This is where the true impact lies. We're talking about better patient outcomes, higher clinician satisfaction, and improved patient safety—all things that have massive, if indirect, financial benefits down the line.

To make a compelling business case, you have to connect the dots. Frame it in terms your CFO will understand: "Reducing diagnostic report turnaround time by 20% will allow us to increase patient throughput by X." A Custom AI Strategy report can be instrumental in helping you identify the most meaningful metrics to track and build that comprehensive business case.

At Ekipa AI, we know that bringing AI into healthcare is a journey filled with tough decisions. Our expert team is here to provide the strategic guidance and technical know-how you need to turn your vision for AI into a clinical reality.