Responsible AI: A Leader's Guide to Trust and Growth

Discover how responsible ai guides practical frameworks, principles, and roadmaps to build trust and drive growth for your business.

Responsible AI is far more than just a set of ethical guidelines or a box to tick for compliance. It's a core business strategy that directly impacts sustainable growth. The goal is to ensure that the artificial intelligence systems you build and deploy are safe, fair, and transparent—turning AI from a potential liability into one of your most powerful assets for building brand trust and lasting customer loyalty.

Why Responsible AI Is Your New Business Imperative

In the rush to integrate artificial intelligence, it’s easy to focus only on speed and capability. But many companies are learning the hard way that overlooking trust is a critical mistake. Responsible AI isn't about abstract ideals; it's a practical framework that prevents your innovations from creating legal, reputational, or financial headaches down the road.

Think of it this way: launching an AI without a responsible framework is like building a skyscraper without inspecting the foundation. It might look impressive at first, but hidden flaws can lead to catastrophic failure. Today, your customers, regulators, and even investors demand more than just powerful tech—they demand technology they can rely on. Committing to Responsible AI signals that your company prioritizes fairness, transparency, and accountability.

From Cost Center to Competitive Advantage

The real shift happens when you stop seeing Responsible AI as a cost and start seeing it as an opportunity. Many leaders initially view it as a compliance burden—a series of checks that slow things down. That perspective is quickly becoming obsolete. In reality, proactively adopting these principles creates a clear competitive advantage in a very crowded market.

When your AI solutions are built on a foundation of trust, you unlock real business benefits. These include:

Stronger Brand Reputation: Companies known for ethical AI attract and keep customers who care about data privacy and fairness.

Lower Regulatory Risk: With regulations like the EU AI Act becoming law, a solid governance framework minimizes the risk of steep fines and legal challenges.

Deeper Customer Loyalty: When customers trust how your AI works, they stick around. Transparency is the bedrock of confidence.

Attracting Top Talent: The best AI professionals want to work for companies making a positive impact, not just a quick profit.

This strategic pivot turns a potential weakness into a durable strength, making your business more resilient and adaptable.

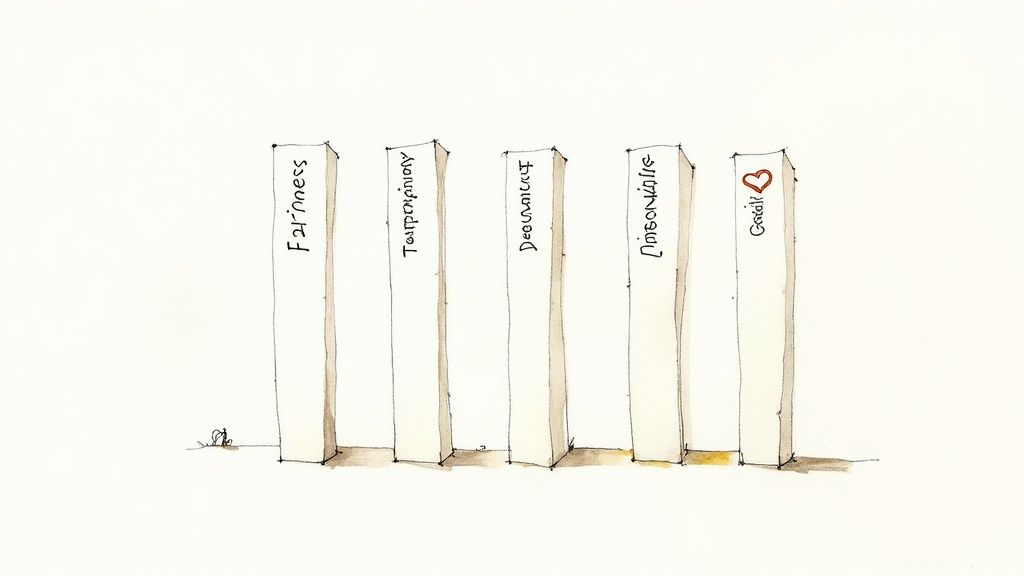

The Core Pillars of Responsible AI at a Glance

To make this concrete, let's break down the fundamental principles. These pillars form the bedrock of any solid Responsible AI framework, giving executives a clear roadmap for what to focus on.

| Pillar | Core Principle | Business Impact |

|---|---|---|

| Fairness | Systems should treat all individuals and groups equitably, avoiding unfair bias or discrimination. | Expands your customer base, improves brand perception, and reduces the risk of discriminatory outcomes. |

| Transparency | It should be clear how AI systems make decisions. This includes explainability and interpretability. | Builds customer trust, simplifies debugging and auditing, and meets regulatory requirements for explainability. |

| Privacy & Security | AI systems must protect user data, ensuring confidentiality, integrity, and compliance with privacy laws. | Prevents costly data breaches, strengthens customer confidence, and ensures compliance with laws like GDPR. |

| Robustness & Reliability | AI models must perform consistently and safely, even when faced with unexpected or adversarial inputs. | Minimizes system failures, ensures dependable performance, and protects against security vulnerabilities. |

| Accountability | Clear lines of responsibility must be established for the outcomes of AI systems. | Clarifies ownership when things go wrong, improves governance, and ensures someone is answerable for the system's impact. |

These pillars aren't just technical goals; they are business imperatives. Each one directly contributes to building a more trustworthy, resilient, and successful organization.

The Tangible Business Impact

Embedding responsibility into your AI lifecycle isn't just about dodging bullets; it's about hitting new targets. Think about the direct impact on your bottom line. An AI hiring tool that is fair and unbiased widens your talent pool and improves diversity, which is proven to lead to better business outcomes. A transparent loan-processing algorithm that can explain its decisions reduces customer complaints and builds lasting trust.

A Responsible AI Framework isn’t a cost—it’s a competitive advantage. It reduces compliance risks, streamlines operations, prevents costly failures, and builds customer trust. Forward-thinking businesses are embedding responsible AI to unlock efficiency, resilience, and long-term value.

Ultimately, Responsible AI is the bridge between what’s technically possible and what’s ethically and commercially sound. It ensures your technological advancements align with your business values and society's expectations, creating a sustainable path for innovation and growth.

Understanding the Five Pillars of Trustworthy AI

To get AI right, we have to move past the buzzwords and dig into what "Responsible AI" actually means day-to-day. At its heart, this is about turning a powerful technology into a trusted partner for your business. This entire effort stands on five foundational pillars: Fairness, Transparency, Privacy, Robustness, and Accountability.

Think of it like building a new piece of critical machinery for your company. You wouldn't just flip the switch and hope for the best. You'd need to be certain it works correctly, safely, and for everyone it's meant to serve. These five pillars are the blueprint for that essential quality control.

Pillar 1: Fairness

Fairness in AI is all about making sure our systems don't become massive engines for amplifying human bias. An AI model is only as smart as the data it learns from, and if that data is full of historical prejudice, the model will learn those same bad habits and apply them at scale.

Take an AI-powered hiring tool. If it's trained on decades of company data where men were hired for leadership roles more often than women, it might start to unfairly penalize highly qualified female candidates. A fair system is one that has been deliberately designed and stress-tested to deliver equitable outcomes, regardless of a person's background. That means a ton of work goes into data selection, auditing, and bias correction.

Pillar 2: Transparency

Transparency is the only real cure for the infamous "black box" problem, where nobody can figure out how an AI made its decision. It’s about having the ability to pop the hood and explain why a model came to a specific conclusion.

Let's say a bank uses an AI to review mortgage applications. If someone is denied, transparency means the loan officer can give a clear reason, like, "The model flagged that the applicant's debt-to-income ratio was above our set limit." This builds trust with customers and gives them a clear path to appeal or reapply. It’s why tools offering model explainability, like our VeriFAI platform for validating AI models, are becoming non-negotiable for any business that needs to stand behind its AI-driven decisions.

Pillar 3: Privacy and Security

This one's a big deal. Privacy is the commitment that an AI system will handle personal data with respect, honoring user consent and locking down sensitive information. In a world full of data protection laws, this pillar is absolutely non-negotiable.

Imagine an AI used in custom healthcare software development to analyze patient charts and predict disease risks. The need to protect that data is immense. A responsible AI would use techniques like data anonymization and heavy-duty encryption to make sure patient confidentiality is never, ever compromised. Security is the shield that makes privacy a reality.

Gaining public confidence is still a major hurdle. A global study from KPMG found that only 46% of people are willing to trust AI systems. That number alone shows just how urgent it is to get these practices right.

Pillar 4: Robustness

You can also call this one reliability. Robustness is an AI's ability to perform correctly and consistently, even when it encounters weird, unexpected, or even malicious data. A robust model is resilient and doesn't get easily thrown off course.

For instance, the image recognition system in a self-driving car must be robust enough to know a stop sign is a stop sign, even if it's partially hidden by a tree branch or has graffiti on it. If a small, real-world imperfection causes the system to fail completely, it isn't robust. Building robust AI means putting it through hell—testing it under every conceivable condition to make sure it holds up.

"A robust AI model is like a seasoned expert. It doesn't just perform well under ideal conditions; it maintains its composure and makes reliable judgments even when things get messy and unpredictable."

Pillar 5: Accountability

Last but not least, accountability is about drawing clear lines of responsibility for what an AI system does. If an AI makes a critical mistake, who’s on the hook? The software developer? The company that deployed it? The person who was using it?

A solid accountability framework figures all that out from the very beginning. It ensures there are humans in the loop who have the authority and the responsibility to oversee the system, step in when needed, and fix any harm that's been done. This pillar is what keeps technology serving people, not the other way around.

Charting the Course Through Global AI Regulations

The global rulebook for AI is being written as we speak, and it’s no longer a conversation confined to the legal department. If you're a business leader, getting a handle on this tangled web of regulations is critical for market access, protecting your brand, and ensuring your company's future. Keeping up isn't just about ticking compliance boxes; it's about smart, competitive positioning.

Trying to navigate this landscape can feel like aiming at a moving target. We're seeing major frameworks like the EU AI Act set a global pace with its risk-based approach, while in the U.S., the NIST AI Risk Management Framework offers a voluntary—but incredibly influential—guide for creating trustworthy AI. The big picture here isn't about memorizing every single law. It’s about recognizing the trend: governments everywhere are pushing for more accountability, transparency, and fairness in AI.

Turning Compliance into Your Competitive Advantage

The smartest companies are flipping the script on regulation. Instead of seeing it as another costly burden, they're treating it as a golden opportunity to build trust and get a leg up on the competition, especially in international markets. When you align your AI development with these globally recognized standards, you're sending a clear signal that you're committed to doing things right. That makes your products far more appealing to savvy enterprise customers and partners.

This proactive approach transforms a potential roadblock into a serious differentiator. Building your systems with compliance baked in from the start saves you from expensive, painful retrofits down the line and lowers the risk of getting shut out of major economies. As we explored in our AI adoption guide, weaving regulatory awareness into your development cycle early on is a hallmark of a truly mature AI strategy.

And things are moving fast. A recent Stanford HAI report found that legislative mentions of AI across 75 countries jumped by 21.3% in a single year, which is a ninefold increase since 2016. In the United States alone, federal agencies rolled out 59 new AI-related regulations in one year—more than double the year before. You can dive deeper into these numbers in the full AI Index Report.

Why a Proactive Strategy Isn't Optional Anymore

Sitting back and waiting for regulations to be finalized before you act is a surefire way to get left behind. The core ideas driving these new rules—fairness, transparency, and accountability—are already shaping what your customers expect from you. If you're always reacting, your best innovations could get stuck in compliance limbo, delaying launches and costing you market share.

This is exactly why proactive AI strategy consulting has become essential for any business with global aspirations. Partnering with experts can help you:

See Around the Corner: Get a clear picture of which emerging regulations will likely hit your industry first so you can prepare.

Build a Solid Foundation: Create internal governance that lines up with major international standards like the EU AI Act and NIST frameworks.

Dodge Potential Bullets: Find and fix compliance gaps in your current AI solutions before they blow up into real problems.

Market Your Integrity: Turn your commitment to responsible AI into a powerful sales tool, showing customers that your tech is built on a foundation of trust.

In the end, a sharp regulatory strategy ensures your AI program isn't just powerful, but also respected and resilient enough to compete on the world stage. Think of it as an investment in future-proofing your business against the inevitable rise of global AI standards.

How to Build Your AI Governance Framework

Putting principles into practice is where Responsible AI gets real. Think of a governance framework as the central nervous system for your entire AI strategy—it’s what translates lofty ideals into the daily decisions your teams make. Without this structure, even the best intentions can go astray, leaving you exposed to serious ethical and reputational risks.

This isn't about wrapping your teams in red tape. It's about building smart guardrails that give them the confidence to innovate safely. The whole point is to weave responsible practices into the very fabric of your company, not just tack them on as an afterthought.

Assemble Your AI Ethics Board

First things first: you need a dedicated, cross-functional AI Ethics Board or Council. This group acts as the ultimate authority on AI governance. They should have the teeth to review, approve, and, if necessary, halt projects that don't align with your company's ethical standards. This is definitely not just a job for IT or legal; you need a mix of voices in the room.

Your board should pull in people from across the business:

Executive Leadership: To keep everything aligned with core business strategy and provide top-down support.

Legal and Compliance: To help you navigate the tricky and ever-changing regulatory waters.

Data Science and Engineering: To ground the conversation in what's technically possible and what the real-world limitations are.

Product Management: To be the voice of the customer and the market.

Ethics and Human Resources: To champion fairness, employee well-being, and the human side of the equation.

This team’s main job is to draw your ethical red lines in the sand and make sure no AI initiative crosses them. To get a head start, you can use tools like a free responsible AI policy generator to help lay down the foundational policies for your framework.

Define Roles and Responsibilities

A framework is useless if nobody knows who’s supposed to do what. Clear ownership is everything. While the Ethics Board sets the overall strategy, you need to assign specific roles to handle the day-to-day work.

Consider creating a few key positions:

AI Product Owner: This person owns the AI product from start to finish, including its performance and its ethical impact.

Data Steward: They are accountable for the quality, integrity, and ethical sourcing of the data that fuels your models.

AI Risk Officer: Their job is to constantly scan the horizon for risks, assess their potential impact, and figure out how to manage them.

Defining these roles builds accountability right into your AI Product Development Workflow, beginning with the initial AI requirements analysis. It creates a clear chain of command and responsibility from the first spark of an idea all the way to deployment.

Conduct Rigorous Risk Assessments

Before a single line of code gets written, every single AI project needs a thorough risk assessment. It’s a simple, proactive step that helps you spot potential ethical, legal, or reputational disasters before they become expensive, embarrassing problems. Using a standardized template keeps everyone on the same page and forces your teams to think hard about the potential downsides.

A proactive risk assessment is your first line of defense. It shifts the conversation from "Can we build this?" to "Should we build this?" and forces a deeper consideration of the potential consequences for your customers and society.

For leaders who want to formalize this, creating a Custom AI Strategy report is a powerful move. It helps translate your high-level principles into concrete operational policies that guide your teams every day, with risk assessment sitting right at the heart of it all.

Here’s a practical template you can adapt to guide your risk assessments.

AI Project Risk Assessment Matrix Template

This template is a starting point to help your teams systematically identify, evaluate, and plan for potential risks before an AI project gets off the ground.

| Risk Category | Potential Impact (Low/Medium/High) | Likelihood (Low/Medium/High) | Mitigation Strategy |

|---|---|---|---|

| Data Bias | High | Medium | Perform a bias audit on training data; use de-biasing techniques; ensure diverse data sources. |

| Lack of Transparency | Medium | High | Implement explainability tools (XAI); document model decision logic; prepare plain-language explanations for users. |

| Privacy Violation | High | Low | Anonymize or pseudonymize personal data; implement strict access controls; conduct a Data Protection Impact Assessment (DPIA). |

| Security Vulnerability | High | Medium | Conduct adversarial testing to find weaknesses; implement robust monitoring for unusual activity; secure data pipelines. |

| Unintended Use | Medium | Medium | Define clear terms of service and usage limitations; monitor for misuse; build in technical safeguards to prevent off-label use. |

By walking every project through a process like this, you’re not just avoiding problems—you’re actively building an AI ecosystem that’s resilient, reliable, and worthy of your customers’ trust.

Your Practical Roadmap to Implementing Responsible AI

A well-designed governance framework is just a document until you bring it to life. The real work is translating your responsible AI principles into concrete actions, and for that, you need a clear, phased roadmap. This approach keeps the process manageable, builds momentum, and weaves these new practices into your existing operations instead of blowing them up.

The key is to treat implementation as a continuous journey, not a one-time project. Think of it in four distinct, logical stages that guide you from an initial health check to ongoing improvement.

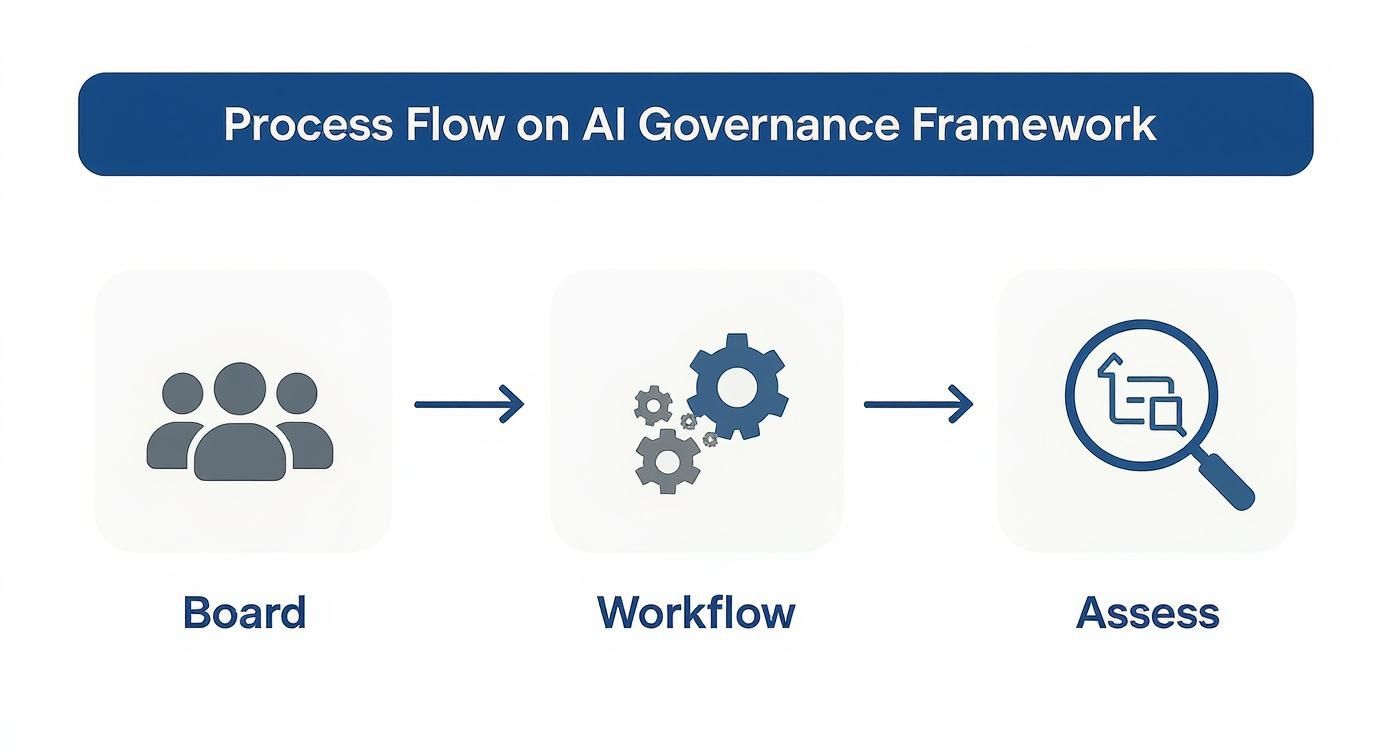

This flow chart gives a bird's-eye view of how to establish and maintain a solid AI governance framework.

What this shows is the critical dance between high-level oversight from the board, the structured workflows that put policies into practice, and the constant assessment needed to make sure everything is working as it should.

Stage 1: Discovery and Assessment

First things first: you need to know your starting point. You can't draw a map to your destination if you don’t know where you are. This phase is all about taking a complete inventory of your current AI landscape and shining a light on potential blind spots.

Here's what to do:

Catalog all existing AI systems: Get a list of every model, tool, and algorithm you're currently using. This includes everything from your customer-facing chatbots to your internal tooling.

Identify key stakeholders: Who owns these systems? Who is impacted by them? Pull together a cross-functional team from legal, IT, product, and the business side.

Conduct an initial risk analysis: Take a hard look at each system through the lens of responsible AI. Where are your biggest risks when it comes to fairness, transparency, or privacy?

This audit gives you the baseline data you need to make smart, informed decisions in the next stage.

Stage 2: Strategy and Framework Design

With a clear picture of where you stand, you can now design a strategy that actually fits your business. This is where you formalize your company's commitment to responsible AI and create the specific policies and procedures that will guide your teams.

During this stage, you will:

Establish your AI Ethics Board: Make it official. Create the committee responsible for oversight.

Draft your AI Principles: Define what "responsible" means for your organization in clear, actionable terms. No fluff.

Develop risk assessment protocols: Build the templates and workflows your teams will use to vet new AI projects before they ever get off the ground.

Putting responsible AI into practice is gaining speed, but it’s not always a smooth ride. A recent industry report found that 74% of Fortune 500 companies had to hit pause on at least one AI project last year because of unexpected issues. In response, 56% now list AI as a risk factor in their annual reports—a massive jump from just 9% the year before.

Stage 3: Pilot Implementation

Now it's time for theory to meet practice. Instead of a disruptive, company-wide rollout, you’ll pick a single, high-impact project to test-drive your new framework. This is your chance to learn, iterate, and build a success story that gets everyone else on board.

Choosing the right pilot is crucial. Select a project that is visible but not mission-critical, allowing you to work out the kinks in a lower-risk environment. An early win is the best catalyst for change.

Start by applying your new governance process from start to finish on a carefully selected project. Whether you're vetting a new AI Automation as a Service provider or developing a predictive model, document every step. Our expert guidance on creating an effective AI implementation workflow can provide a valuable structure here.

Stage 4: Monitoring and Scaling

Responsible AI isn't a "set it and forget it" discipline. Once your pilot is done and you’ve ironed out the wrinkles in your process, the focus shifts to continuous monitoring and a gradual, organization-wide rollout. As you build out your strategy, you can even start thinking about more advanced applications, like how AI is creating new AI solutions for cognitively impaired users.

This final stage is an ongoing loop that includes:

Implementing monitoring tools: Use technology to keep an eye on model performance, drift, and bias in real-time.

Training and education: Roll out training programs to make sure every employee understands their role in responsible AI.

Iterating your framework: Regularly review and update your policies based on new regulations, emerging technologies, and the lessons you've learned along the way.

This four-stage approach turns a daunting challenge into a series of achievable steps, helping you build a resilient and trustworthy AI program that can grow with your business.

You Don't Have to Go It Alone

Building a responsible AI program from the ground up is a serious commitment, but it's not a journey you have to take by yourself. As this guide has laid out, Responsible AI isn't a one-and-done project; it’s a continuous process and a core part of any modern business strategy. Having the right partner can be a game-changer.

Turning broad principles into real-world business practices is a tricky balancing act. It demands both a high-level strategic vision and deep technical know-how. You're essentially weaving ethical guardrails into every single step of your AI lifecycle, from the initial AI requirements analysis all the way to monitoring systems live in the wild. This is how you ensure your innovations aren't just powerful, but also fair, transparent, and accountable.

From Good Intentions to a Real Competitive Edge

When you get Responsible AI right, it’s about more than just ticking compliance boxes. You’re actually turning your ethical commitments into a powerful competitive advantage by building genuine trust with customers and stakeholders. This is where expert guidance really proves its worth.

The real win with a Responsible AI program isn't just avoiding risks. It's about opening up new possibilities by creating technology that people genuinely trust. That trust is what builds lasting brand loyalty and sustainable growth.

At Ekipa, our specialty is helping organizations close that gap between ambition and execution. Our expert team provides the strategic guidance and hands-on support you need to make these principles a part of your company's DNA.

Whether you're starting with a comprehensive Custom AI Strategy report or you need help integrating new AI tools for business, we've got your back.

Our approach isn't just theoretical. We blend deep expertise in AI strategy consulting with practical, sleeves-rolled-up implementation. We'll help you design governance frameworks that actually work, pick the right tech for the job, and give your teams the confidence to innovate responsibly. Don't let the complexity of it all hold you back.

Connect with our expert team today and let's start building your responsible AI future, together.

Frequently Asked Questions about Responsible AI

When it comes to putting responsible AI into practice, leaders always have great questions. Let's tackle some of the most common ones to help you bridge the gap between your strategy and a successful rollout.

If you have more questions, we've compiled a much longer list over on our complete FAQ page.

Where on Earth Do We Start?

The best starting point is always a candid look in the mirror. You need a thorough AI maturity and risk assessment. Before you can even think about building a framework, you have to get a clear picture of what you're working with. What AI systems do you already have? Where are the hidden risks, like biased data lurking in your training sets? Most importantly, what does "responsible" even mean for your company and your industry?

Putting together a cross-functional team to lead this audit is a fantastic first move. If you want to get a running start, engaging in AI strategy consulting can help establish that crucial baseline before you invest heavily in a full-blown governance structure.

Is This Just a "Big Company" Problem?

Not at all. Responsible AI is for everyone. While a massive corporation might be under a brighter regulatory spotlight, startups and smaller businesses have a golden opportunity to build incredible brand trust from day one.

Think of it as a competitive advantage. For a smaller company, embedding fairness and transparency into your culture right from the beginning is a powerful way to stand out. It’s a scalable approach that customers, investors, and partners are actively looking for.

How Do We Actually Measure the ROI?

Calculating the return on investment for responsible AI isn’t just about the hard numbers—it’s about the value you create and the disasters you avoid.

The Tangible ROI: You can directly measure things like reduced compliance fines, lower customer churn because people trust you more, and faster project timelines because you've already sorted out the risks.

The Intangible ROI: This is where the magic happens. You’ll see it in your brand’s reputation, in fierce customer loyalty, and even in your ability to attract top talent who want to work for a company that does the right thing.

Honestly, the biggest ROI is often in the catastrophic costs you don't pay. A single ethical misstep or public scandal can be devastating, and this is your best insurance policy against that.

Are There Tools to Help Automate This?

Yes, thankfully! A whole ecosystem of AI tools for business has emerged to help automate and monitor these practices. You can find platforms that detect bias in real-time, explainability (XAI) tools that make "black box" models transparent, and privacy solutions that handle things like data anonymization automatically.

The key is choosing tools that fit your specific governance framework, not the other way around. A good AI Strategy consulting tool can help you pinpoint the right tech for your unique needs and the systems you already have in place.

What are some real-world use cases of responsible AI?

Responsible AI is being applied across many sectors. In finance, it's used to create fairer loan application models that reduce historical bias. In healthcare, it helps develop diagnostic tools that are tested on diverse population data to ensure accuracy for everyone. You can explore more real-world use cases to see how different industries are implementing these principles.

How can I build trust with my team and stakeholders?

Building trust starts with transparency and education. Involve your team in creating the governance framework from the beginning. Share your company's AI principles openly and provide ongoing training. For external stakeholders, be transparent about how your AI systems work and what data you use. Demonstrating accountability by having a clear point of contact, like our expert team, can also reinforce expertise and trust.

Ready to build a responsible AI framework that doesn't just check a box, but creates real business value? At Ekipa AI, we turn these complex principles into clear, actionable strategies. Start your AI transformation with us today.