A Guide to Regulatory Compliance for AI in Medical Devices

Discover the essentials of regulatory compliance for AI in medical devices, including risk management, approvals, and post-market obligations.

Getting regulatory compliance right for AI-powered medical devices means working through a complex web of rules from bodies like the FDA in the U.S. and various authorities in the EU. The goal is always the same: ensure patient safety and prove the product actually works.

But here's the twist. Unlike a physical piece of medical hardware, AI software is designed to learn and adapt. This calls for a totally different regulatory mindset—one that looks at the entire product lifecycle, from the quality of the data it's trained on to how it's monitored long after it's in the market. Nailing this proactive approach isn't just about getting a product approved; it's fundamental to earning and keeping public trust.

The New Reality of AI Medical Device Regulation

The rulebooks of yesterday simply weren't written for the adaptive algorithms of today. Regulating a scalpel is one thing; its function and risks are predictable and fixed. But how do you regulate software that constantly learns from new data? It's less like managing a tool and more like managing a living system, which presents a whole new class of challenges for both developers and the agencies tasked with ensuring safety. This is the new reality everyone in the healthcare AI space is facing.

This shift requires a modern game plan, one that moves past the idea of a one-and-done approval and embraces continuous oversight. A solid compliance strategy isn’t just the last box to check; it’s a non-negotiable part of the entire AI Product Development Workflow.

The Rise of Software as a Medical Device

At the center of this new regulatory world is a concept called Software as a Medical Device (SaMD). This isn't software that just runs on a device; it is the device.

Think of a smartphone app that analyzes a photo of a mole to check for signs of skin cancer, or a cloud-based algorithm that flags early signs of patient deterioration from their electronic health records. These are SaMD, and they're regulated with the same seriousness as any traditional medical hardware.

The core challenge is that SaMD can change its performance with every new piece of data it processes. This "learning" capability, while powerful, introduces risks like algorithmic bias and performance drift that traditional regulations were not designed to handle.

The market data makes this evolution crystal clear. The FDA has seen explosive growth in approvals for AI-enabled medical devices, with the cumulative total projected to hit over 1,250 devices by July 2025. Digging into the numbers, a critical analysis found that a staggering 96.7% of these devices were cleared through the 510(k) pathway, which hinges on proving the new device is substantially equivalent to one already on the market.

Key Global Regulators Shaping the Future

To succeed, you have to think globally, because different regions have their own distinct rulebooks. As we get deeper into this evolving field, following conversations around topics like the future of AI in teleradiology can provide valuable context on the challenges and opportunities ahead. Getting to know the key players is the first step toward building a compliance strategy that works across borders.

To help you get started, here's a quick look at the primary international authorities and what they're focused on.

Key Global Regulatory Bodies for AI Medical Devices

| Regulatory Body | Region | Primary Focus for AI/ML |

|---|---|---|

| U.S. Food and Drug Administration (FDA) | United States | Focuses on a total product lifecycle approach, including Predetermined Change Control Plans (PCCPs) to manage algorithm updates post-approval. |

| European Union (MDR & IVDR) | European Union | Enforces stringent requirements under the Medical Device Regulation (MDR) and In Vitro Diagnostic Regulation (IVDR), with the upcoming AI Act set to add another layer of oversight. |

| International Medical Device Regulators Forum (IMDRF) | Global | Develops harmonized guidance documents on SaMD classification, clinical evaluation, and quality management to promote regulatory convergence. |

Keeping these different frameworks in mind is crucial as you plan your product's journey from an idea to a tool that clinicians can rely on.

Building Your Core Compliance Framework

A solid regulatory strategy isn't some last-minute checklist you power through before launch. It's the foundation you build your product on, right from day one. When you approach compliance this way, it stops being a hurdle and starts becoming a competitive advantage. Thinking about these core elements early is a non-negotiable part of developing effective AI solutions.

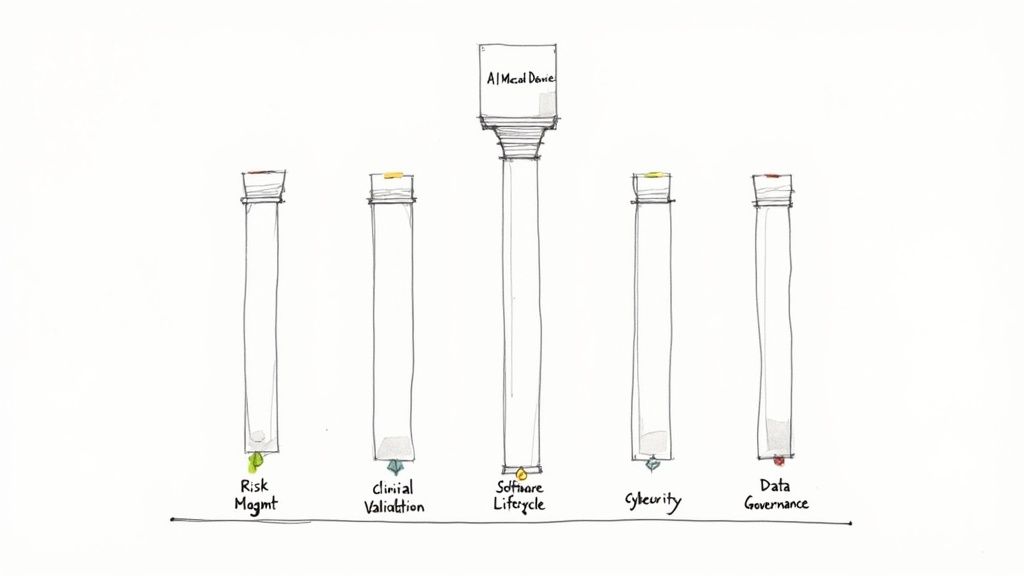

These five pillars give you the structure needed to prove your device is both safe and effective. Each one tackles a unique challenge AI presents in a clinical setting, from sizing up risks before you write a line of code to monitoring performance long after it’s in use.

Risk Management (ISO 14971)

When it comes to medical devices, risk management is all about the international standard ISO 14971. Traditionally, this meant thinking about physical failures—a part breaking or a mechanical glitch. But with AI, the whole concept of "harm" gets a lot bigger, bringing a new class of algorithm-specific risks into the picture.

Think about an AI tool designed to analyze medical scans. A classic risk might be the software crashing. An AI-specific risk, however, is far more subtle and dangerous: algorithmic bias. This is where the model performs poorly for certain groups of people because the data it was trained on wasn't representative. A proper risk management plan has to see these digital-age dangers coming and have a plan to stop them, making it a critical part of any AI requirements analysis.

Clinical Validation and Performance

You can't just tell regulators your AI model works. You have to prove it with solid clinical evidence. This is all about demonstrating that your device delivers a real, meaningful benefit in a clinical setting and performs exactly as you claim it will. And it's about much more than just hitting a certain accuracy score.

Regulators need to see that you've validated your model against a "clinical ground truth," which is usually determined by expert human clinicians. It also means proving the model is reliable across different patient populations, in various clinical environments, and with different types of equipment. A meticulously documented validation process is your ticket to market approval and a key focus of our AI Strategy consulting tool.

At its core, clinical validation is about answering a simple question with complex data: "Does this AI tool reliably help improve patient outcomes in the messy, unpredictable environment of actual healthcare?"

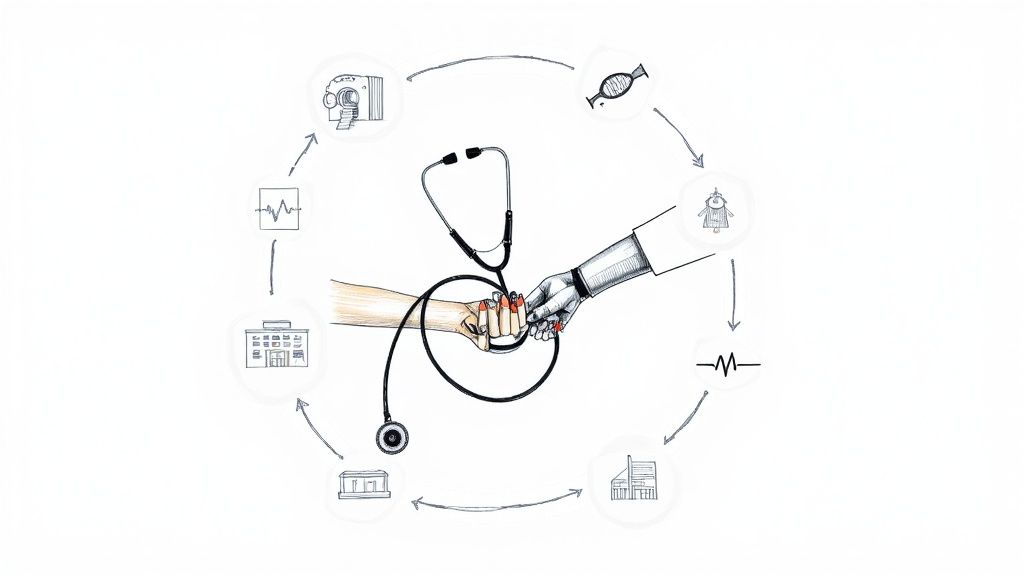

Software Lifecycle Management (IEC 62304)

The standard IEC 62304 lays out the rulebook for managing software from the first spark of an idea all the way to its retirement. This standard is especially critical for AI because the "software" isn't a fixed product; it’s designed to learn and evolve.

Your lifecycle process needs to cover a few key areas:

- Data Management: How are you collecting, labeling, and protecting the datasets used for training and testing?

- Model Training: What's your process for documenting the architectures, parameters, and methods used to build the model?

- Change Control: What is your ironclad process for updating or retraining the model without accidentally introducing new risks?

This disciplined approach ensures every single change is intentional, thoroughly tested, and safe. It's the backbone of a development process that earns trust, and properly managing this lifecycle is a service offered through AI Automation as a Service.

Cybersecurity

Network-connected AI medical devices are a tempting target for cyberattacks. A security breach could expose sensitive patient data or, in a nightmare scenario, alter how the device functions and cause direct patient harm.

Regulators, especially the FDA, now demand a robust cybersecurity plan that includes threat modeling, vulnerability management, and secure coding practices. This isn't something you can bolt on at the end. Security has to be baked into the device from the ground up, and building powerful internal tooling can make managing these security protocols much more efficient.

Data Governance

Data is the fuel for any AI system, so it’s no surprise that how you manage it is under a regulatory microscope. This final pillar covers your entire data pipeline, ensuring you maintain quality and integrity while protecting patient privacy under regulations like HIPAA and GDPR.

Strong data governance means you have clear, documented rules for how data is acquired, de-identified, stored, and used. It also means you’re actively looking for and correcting biases in your datasets to make sure your AI tool is fair for everyone. A detailed Custom AI Strategy report can guide you in systematically tackling these five pillars, helping you build a compliance-ready foundation from the start.

Mastering Data Governance and Cybersecurity

When it comes to AI medical devices, think of data as the fuel and security as the steel chassis. Get either one wrong, and you're headed for a crash. Regulators scrutinize these two areas more than almost any other because they tie directly to patient safety and trust. A compromised dataset or a security breach isn't just a technical glitch; it's a full-blown clinical crisis waiting to happen.

This is why regulatory bodies want to see more than just promises on paper. They demand a solid, well-documented game plan for both data governance and cybersecurity. Strong data governance is the bedrock of any safe and effective AI, and this foundational work is a key component of comprehensive AI strategy consulting. Without it, even the most brilliant algorithm is built on quicksand.

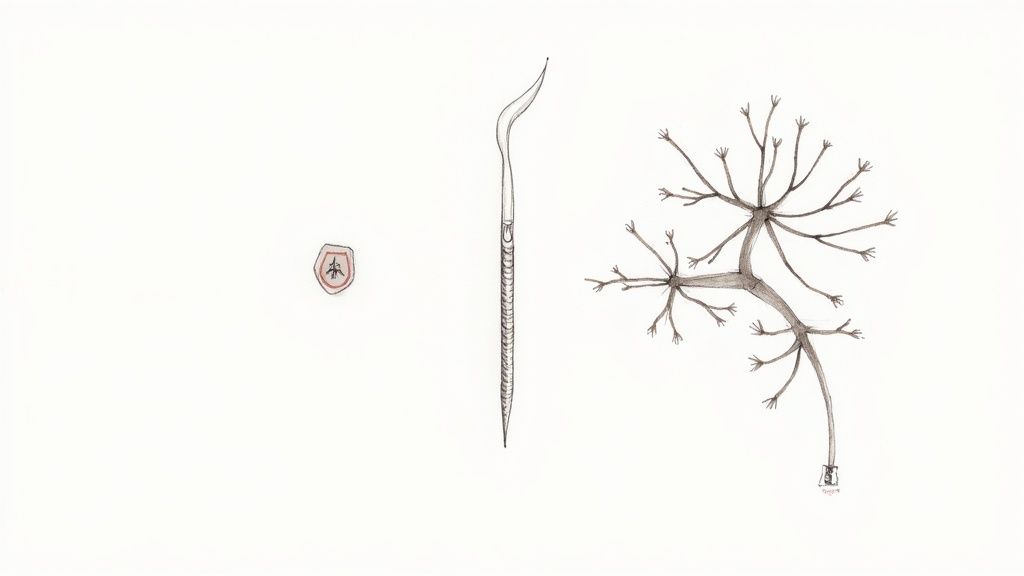

The Mandate for High-Quality, Unbiased Data

We’ve all heard the old saying, “garbage in, garbage out.” In medical AI, this isn't just a tech cliché; it’s a serious warning. Feeding an algorithm poor-quality or biased data can lead to skewed results, missed diagnoses, and glaring health inequities. Regulators are all too aware of this risk, making data integrity an absolute must-have for compliance.

This means your datasets can’t just be big. They need to be:

- Representative: The data has to mirror the real-world patient population you intend to serve, covering a full spectrum of ages, genders, ethnicities, and clinical presentations.

- Accurately Annotated: The "ground truth" labels you use to train and test your model must be spot-on. This often means getting multiple clinical experts to verify them for precision and consistency.

- Traceable: You need a crystal-clear audit trail. Where did the data come from? How was it cleaned and processed? How was it used to build the final model?

Regulators across the globe are putting these principles into law. The Medical Device Coordination Group (MDCG) and the European AI Act (AIA) now explicitly require datasets used for training, validation, and testing to have the right statistical properties and be rigorously vetted for bias. You can get more perspective on FDA oversight on the Bipartisan Policy Center's website.

Navigating Patient Privacy in the AI Era

Beyond data quality, protecting patient privacy is a sacred trust. Strict regulations like the Health Insurance Portability and Accountability Act (HIPAA) in the U.S. and the General Data Protection Regulation (GDPR) in Europe lay down the law for handling Protected Health Information (PHI). When you're building your compliance framework, it's essential to dig into the specifics, like understanding HIPAA compliance for AI voice agents.

Your data governance framework has to ensure every piece of patient data is properly de-identified before it ever touches a training model. This isn't just about removing names; the process must be irreversible to prevent re-identification, a major concern as massive datasets get combined.

Ignoring these privacy laws doesn’t just put you in legal jeopardy; it shatters the trust of patients and clinicians. Your commitment to protecting data needs to be transparent and verifiable, just as we outline in our detailed privacy policy.

Building a Fortress Around Your AI Medical Device

Cybersecurity can’t be a feature you tack on at the end. It has to be baked into the device's DNA from day one. The FDA has set clear expectations for manufacturers to shield their devices from ever-evolving cyber threats that could expose patient data or, far worse, hijack the device’s functionality.

This means adopting a Secure Product Development Framework (SPDF)—a structured process for managing security risks from concept to retirement. A core part of any good SPDF is threat modeling, where your team actively brainstorms what could go wrong and designs defenses before a single line of code is written.

Think of it like designing a secure building. You don't construct the whole thing and then just add a lock to the front door. You plan for security from the blueprint stage, considering everything from reinforced windows and alarm systems to fire escapes.

A robust cybersecurity plan must include:

- Secure Design: Weaving security controls directly into the software and hardware from the very beginning.

- Risk Management: Actively identifying, evaluating, and mitigating cybersecurity risks.

- Verification and Validation: Putting the device through its paces with rigorous testing to make sure the security controls actually work.

- Post-Market Monitoring: Keeping a constant watch for new threats and being ready to deploy patches and updates quickly.

By mastering data governance and cybersecurity, you build a foundation of trust and safety. It’s what satisfies regulators, earns the confidence of clinicians, and ultimately protects the very patients your device is meant to help.

Your Practical AI Compliance Implementation Checklist

Turning a mountain of regulations into a clear, step-by-step plan is the real secret to getting your AI-powered medical device to market. Instead of tackling compliance as one giant, intimidating task, it’s much smarter to break the journey down into manageable phases.

This checklist will walk you through the process, from the initial "what if?" idea all the way to post-market success. Think of it as a roadmap for your team to build a solid, confident approach.

The diagram below shows how everything is connected. It’s not just about building a great algorithm; it’s about a continuous flow from clean data to a secure model, all leading to a protected device out in the real world.

What this really drives home is that security isn't something you bolt on at the end. It starts the moment you select your first piece of data and runs through the entire life of the product.

Phase 1: Pre-Development Planning

This is where you lay the foundation. Getting these early steps right will save you from expensive rework and frustrating regulatory delays later on. You have to know your destination before you start building the car.

- Define a Crystal-Clear Intended Use: Be incredibly specific. Is your AI tool meant for initial diagnosis, simple screening, or complex treatment planning? This single decision dictates its risk classification and the entire regulatory path you'll follow.

- Conduct a Preliminary Risk Analysis: Right out of the gate, start identifying potential hazards using ISO 14971 as your guide. This isn't just about software bugs; it includes algorithmic bias and data privacy risks. This early assessment will shape everything you do next.

- Establish a Quality Management System (QMS): Set up a QMS that meets standards like ISO 13485. This is the operational backbone that will govern all your processes, from design controls to document management.

Phase 2: Development and Validation

With a solid plan in hand, you can shift your focus to actually building and proving your AI model. This phase is all about meticulous documentation and generating the hard evidence you need to show your device is safe and effective. A structured AI Product Development Workflow is non-negotiable here.

- Curate and Document Datasets: You need to be able to trace every piece of data. Meticulously document the source, selection criteria, and characteristics of your training, testing, and validation sets. Most importantly, ensure they truly represent the patient population you plan to serve.

- Perform Algorithmic Bias Assessment: Don't just hope for fairness—prove it. Actively test your model's performance across different demographic subgroups (age, gender, ethnicity) to find and fix any hidden biases.

- Conduct Clinical Validation: Design and run studies that produce robust clinical evidence. You need to show that your device performs as promised and delivers a real, meaningful clinical benefit.

- Implement a Secure Product Development Framework (SPDF): Build cybersecurity in from the start. This means integrating practices like threat modeling and vulnerability management directly into your development lifecycle, not as an afterthought.

Success in this phase is measured by the quality of your evidence. Regulators are looking for a clear, unbroken story that connects your initial claims to your final validation data, proving your device is both safe and effective.

Phase 3: Regulatory Submission

Now it’s time to package all your hard work into a coherent submission for regulators like the FDA or a Notified Body in the EU. Your job is to tell a compelling and complete story about your device, leaving no room for doubt.

- Draft a Predetermined Change Control Plan (PCCP): This is absolutely critical for any adaptive AI model. A PCCP pre-defines the scope of future algorithm changes you can make without needing to go through a full re-submission for every minor update.

- Prepare All Required Documentation: Gather everything. Assemble your technical file, making sure it includes your risk management reports, clinical validation data, software documentation, and cybersecurity reports.

- Engage with Regulators: Don’t just throw your submission over the wall and hope for the best. Use programs like the FDA's Q-Submission to get feedback on your strategy before you formally submit. This can dramatically reduce the risk of getting blindsided by questions or rejection.

Phase 4: Post-Market Monitoring

Getting that clearance or approval is a huge milestone, but it’s not the finish line. For AI medical devices, compliance is an ongoing commitment. You have to monitor and maintain performance in the real world to prove your device remains safe and effective over its entire lifespan.

- Set Up a Real-World Performance Monitoring System: You need to actively collect and analyze data on how your device is performing after it’s been deployed. This is the only way to catch performance degradation or "model drift" before it becomes a problem.

- Establish a Feedback Loop: Create simple, clear channels for users to report issues. More importantly, have a process for your team to analyze that feedback and use it to inform future updates and improvements.

- Maintain and Execute Your PCCP: When you do need to make one of those pre-approved changes to your algorithm, follow your PCCP protocols to the letter and keep perfect records of everything you did.

Navigating this checklist requires deep expertise and attention to detail. This is precisely where our expert team steps in, helping organizations confidently manage each step to ensure nothing gets missed.

Avoiding Common and Costly Compliance Pitfalls

In the high-stakes world of medical technology, getting your regulatory strategy right is everything. It’s less like following a checklist and more like navigating a minefield. One misstep can cause devastating delays, get your submission rejected, or even force a recall after your product is already on the market.

Learning from the mistakes of others isn’t just good advice; it’s a core survival strategy. So many of these errors aren't about deep technical flaws. They’re simple oversights that spiral into massive, expensive problems. By understanding where teams often trip up, you can build a smarter, more proactive plan from day one. This kind of foresight is exactly what effective AI strategy consulting provides and is crucial for anyone building serious AI solutions.

The Ambiguity of Intended Use

One of the most common—and damaging—mistakes I see is a poorly defined "Intended Use" statement. This single sentence is the bedrock of your entire regulatory submission. It dictates your device's risk classification and, as a result, the level of scrutiny it will face from regulators.

If it's vague, you're building on a shaky foundation. For instance, saying your AI tool "assists with radiological analysis" is practically useless. Does it simply highlight potential areas of interest for a radiologist to review (a lower-risk function)? Or does it make an autonomous diagnosis all on its own (a much, much higher-risk function)? This lack of clarity can send you down the wrong regulatory path entirely, wasting months or even years on a submission that was destined to fail from the start.

Proactive Strategy: Be relentlessly specific. Clearly state who the user is (e.g., a board-certified radiologist), the exact clinical situation (e.g., screening for lung nodules in asymptomatic patients), and precisely what role the AI plays in the workflow. This precision locks in the correct classification and tells regulators you know exactly what you've built.

The Hidden Costs of Poor Data Annotation

Great data is the lifeblood of any medical AI. But the truth is, your data is only as good as the labels attached to it. Poor, inconsistent data annotation is a silent killer of AI projects. It quietly injects biases and errors that completely undermine your model's performance and credibility.

Think about an AI designed to spot a specific type of skin lesion. If the training data was labeled by several dermatologists who all had slightly different standards and no consensus process, the algorithm will bake that inconsistency right into its logic. When regulators inevitably dig into your data, these discrepancies will raise major red flags, casting doubt on your entire clinical validation. The result? They could ask for a complete re-validation—a truly gut-wrenching and expensive setback. Looking at various real-world use cases makes it clear: solid data governance isn't optional.

You absolutely have to treat data annotation with the same discipline you would any other clinical process.

- Develop a Detailed Annotation Protocol: Create a crystal-clear guide that defines every label and provides rules for handling ambiguous cases.

- Use Multiple, Trained Annotators: Never rely on a single person. Have at least two qualified experts review each data point to ensure you have consensus.

- Conduct Regular Quality Audits: Set up a schedule to periodically review samples of your annotated data. This helps you catch and correct any "protocol drift" before it contaminates your dataset.

Ignoring the Reality of Model Drift

Getting that initial regulatory approval is a huge milestone, but it is not the finish line. A huge pitfall is failing to plan for model drift. This is the natural, inevitable decline in an AI's performance over time as real-world data starts to look different from the pristine data it was trained on.

An algorithm that performed brilliantly in a controlled clinical trial can easily stumble when it encounters images from new hospital scanners or patients from a different demographic. Ignoring this is a critical mistake. Regulators don't just expect you to launch a product; they expect you to have a robust post-market surveillance plan to monitor its real-world performance continuously. Without one, you risk being the last to know that your model's accuracy is declining—a discovery you don't want to make after it leads to adverse patient events, regulatory action, and a public relations nightmare. A solid post-market plan is a key deliverable that our expert team helps companies build.

To bring these points together, it's helpful to see these common issues laid out side-by-side. The table below summarizes the most frequent stumbles we see teams make and offers clear, actionable strategies to keep you on the right path.

Common AI Compliance Pitfalls and Mitigation Strategies

| Common Pitfall | Potential Consequence | Proactive Mitigation Strategy |

|---|---|---|

| Vague "Intended Use" Statement | Incorrect risk classification, wasted resources on the wrong regulatory pathway, submission rejection. | Draft a hyper-specific statement defining the user, clinical condition, and the AI's precise function. Involve regulatory experts from day one. |

| Inconsistent Data Annotation | Biased or unreliable model performance, failed validation during regulatory review, need for expensive re-training and re-validation. | Establish a formal annotation protocol, use multiple trained annotators for consensus, and conduct regular quality audits on labeled data. |

| No Plan for "Model Drift" | Declining real-world performance goes undetected, potential for patient harm, regulatory penalties, or product recall. | Develop a comprehensive post-market surveillance (PMS) plan to continuously monitor model performance with real-world data and define triggers for retraining. |

| "Black Box" Algorithm | Regulators cannot assess the model's logic, leading to a lack of trust and a higher likelihood of rejection, especially for high-risk devices. | Focus on model explainability (XAI). Document the model's architecture, logic, and decision-making processes clearly. Be prepared to defend why it works. |

| Poor Cybersecurity Measures | Vulnerability to data breaches (violating HIPAA/GDPR), risk of malicious model manipulation, loss of patient and provider trust. | Implement a secure software development lifecycle (SDLC), conduct regular penetration testing, and create a detailed threat model specific to the AI and its data. |

By proactively addressing these challenges, you're not just checking boxes for a regulator. You're building a safer, more effective, and more trustworthy product from the ground up. This strategic foresight separates the companies that succeed from those that get bogged down in preventable regulatory battles.

Building a Compliance Strategy That Lasts

The rulebook for AI is being written in real-time, which means a "one-and-done" approach to compliance is a surefire way to fall behind. The real goal isn't just to get your device cleared or approved once; it's to build a compliance framework that can adapt and evolve. This is how you stay ahead of the curve and maintain trust over the entire life of your product.

Getting this right means making regulatory thinking a part of your company's DNA. It's not a siloed job for the compliance department. It’s a collective effort, backed by smart internal tooling and the right partners.

Adopting a Living Quality Management System

Your Quality Management System (QMS) is the engine room of your compliance strategy. But for AI-powered medical devices, a traditional, paper-based QMS just won't cut it. You need an AI-ready QMS designed for the unique challenges of machine learning—things like managing enormous datasets, meticulously documenting model training, and controlling every software update.

This isn't a dusty binder on a shelf. It's a living system that should plug directly into your development pipeline, ensuring every single change is tracked, validated, and deployed under strict quality controls. For companies that need to build this from the ground up, specialized custom healthcare software development can be a huge asset.

The Strategic Role of a PCCP

For any algorithm that's designed to learn and change over time, the Predetermined Change Control Plan (PCCP) is your most important tool. This FDA-endorsed plan is a true game-changer. It gives you a pre-approved pathway for making specific, planned updates to your algorithm without having to go back to the FDA for every single tweak.

Think of a PCCP as a pre-authorized playbook for your AI's evolution. It lays out exactly what kinds of changes the model can make, the methodology you'll use to prove those changes are safe and effective, and how you'll keep an eye on performance.

Creating a Continuous Learning Loop

Long-term compliance ultimately hinges on a continuous feedback loop fueled by real-world data. Once your device is on the market, you have to actively monitor its performance to watch for "model drift." This is the natural tendency for an algorithm's accuracy to degrade over time as it encounters new data that looks different from what it was trained on.

This post-market surveillance provides the evidence you need to trigger an update according to your PCCP. It closes the loop, turning real-world insights into validated, safe improvements. This is how you build a resilient product that doesn't just meet the bar for compliance but constantly raises it. It's a complex journey, but guiding teams through it is exactly what our expert team does best.

Frequently Asked Questions

When you're wading into the regulatory waters of AI-powered medical devices, a lot of questions pop up. Let's tackle some of the most common ones I hear from teams navigating this space.

What’s the real difference between SaMD and SiMD?

Think of it this way: Software as a Medical Device (SaMD) is its own medical product. A great example is a mobile app that uses your phone's camera to analyze a mole and flag it for potential skin cancer risk. The software is the device.

On the other hand, Software in a Medical Device (SiMD) is the brains inside a piece of hardware. It's the essential code that makes an MRI machine or a modern pacemaker actually work. The regulatory approach is different because a standalone app (SaMD) has its own unique risks around data security and user error that aren't tied to a physical machine.

How does the FDA keep up with AI models that continuously learn?

This is a big one. The FDA's answer is a Predetermined Change Control Plan, or PCCP. It's a clever framework that lets a manufacturer get pre-approval for how their AI will learn and adapt once it's out in the real world.

Instead of submitting for new approval every time the algorithm updates, the PCCP lays out the ground rules in advance. You have to specify exactly what kinds of changes the model can make, the strict testing protocols you'll follow, and how you'll monitor its performance. It’s a way to allow for innovation within a tightly controlled, transparent, and safe process. It helps you manage your evolving AI tools for business without constantly going back to square one with regulators.

What's the single biggest hurdle in AI medical device compliance?

Hands down, the toughest part is proving the clinical validity and safety of your AI across every type of patient it might encounter. To do this, you need massive, high-quality datasets that truly represent the real world, which is incredibly expensive and logistically challenging to assemble.

If there's any hidden bias in your data—say, it was trained mostly on one demographic—the model’s performance can drop, and regulators will spot it immediately. The other major headache is explaining how a "black box" AI model makes its decisions in a way that satisfies auditors. Starting with a rock-solid AI requirements analysis is the only way to systematically plan for these challenges and build a case that will stand up to scrutiny.

Ready to build an AI strategy that embeds regulatory compliance from day one? The Ekipa AI platform helps you move from idea to execution with confidence. Get your Custom AI Strategy report in 24 hours and let our expert team guide you.