Your Guide to Responsible AI Implementation

A practical roadmap for responsible AI implementation. Learn proven strategies for AI governance, transparency, and compliance to build trust and drive growth.

Responsible AI implementation isn't just a buzzword; it's the real-world process of weaving ethical principles like fairness, transparency, and accountability directly into how you build and use AI. It’s about moving beyond talk and into action, setting up practical governance, ensuring fair data practices, and maintaining continuous oversight.

When done right, AI becomes a competitive and trustworthy advantage instead of a looming liability.

Moving from AI Principles to Real-World Practice

Many companies have a set of AI principles, but that’s the easy part. The real challenge is making those principles a daily reality for your teams. A mature responsible AI program is much more than a policy document collecting digital dust; it’s a living, breathing system of clear governance and consistent oversight.

The gap between good intentions and actual practice is where most organizations stumble. Why? Because bridging that gap requires a genuine operational shift. It means threading ethical checks and balances through the entire AI lifecycle, from the first brainstorming session to long-term monitoring in production. The first step is often defining a clear, actionable path—something an AI strategy workshop can help crystallize, making sure your ethical goals and business outcomes are pulling in the same direction.

The Core Pillars of Implementation

To get responsible AI working in practice, you have to focus on a few key areas that all support each other. If you neglect one, the whole structure gets wobbly. As we explored in our AI adoption guide, to really move from abstract ideas to solid, responsible systems, it helps to lean on established guidelines, like those found in these AI Agent Best practices.

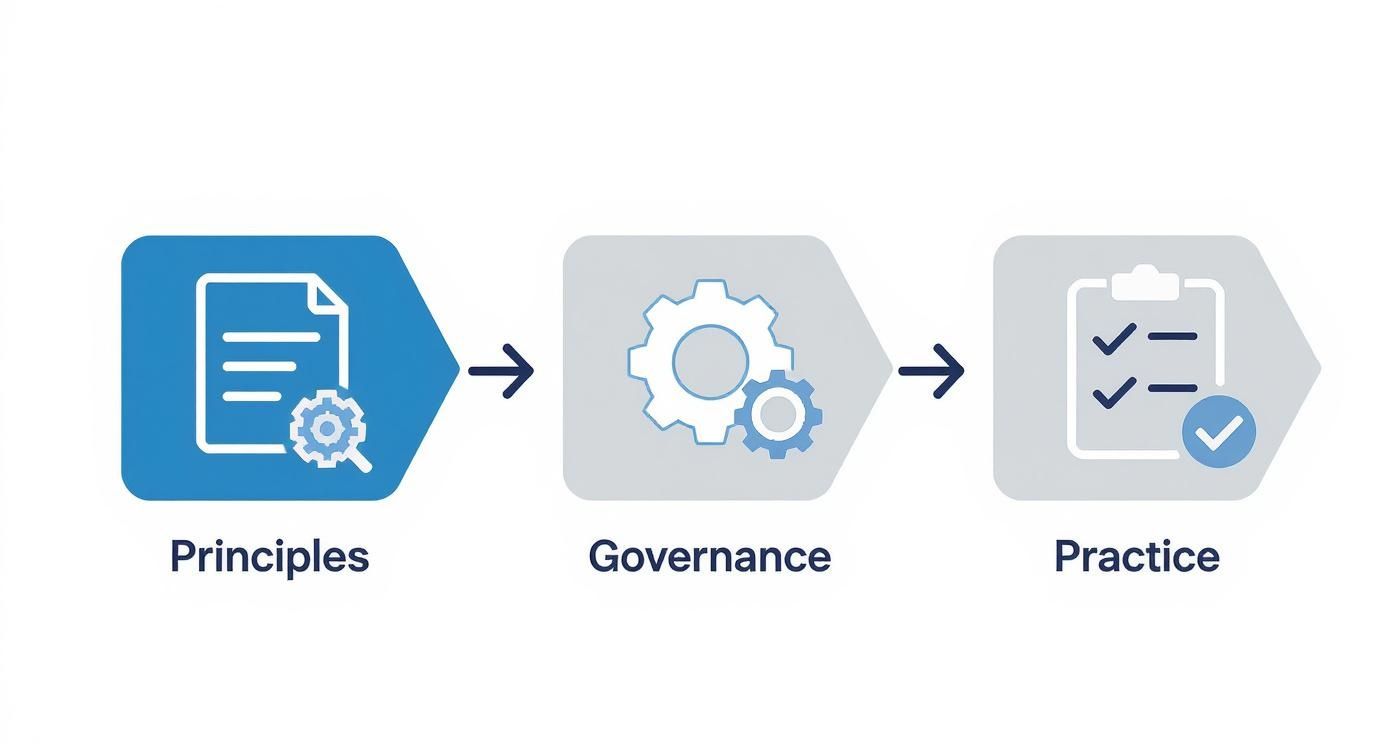

This journey from high-level principles to concrete, on-the-ground action is a structured process.

As you can see, the principles are just the starting point. It’s the governance and operational layers that provide the scaffolding needed to turn those ideas into repeatable, measurable actions.

Bridging the Strategy-to-Execution Gap

The biggest hurdle for most isn't a lack of desire to be ethical; it's the absence of a clear playbook for execution. Your teams need more than just a list of rules. They need practical tools, simple checklists, and clearly defined roles that fit right into their existing workflows. This is where the hard work of strategic planning pays off.

A responsible AI framework isn't a barrier to innovation. It's the guardrail that lets you accelerate with confidence, knowing you're building technology that is safe, fair, and true to your company's values.

Building this framework can't happen in a silo. It demands input from a mix of stakeholders—think legal, compliance, data science, and product management, all in the same room. A hands-on approach, like a dedicated AI workshop, is one of the best ways to get everyone aligned. It creates a shared understanding of the risks and opportunities and lets you build a practical roadmap together.

This kind of collaboration ensures the policies you create are not only solid but also usable and adopted across the entire organization. The ultimate goal is to make responsibility a natural part of how you innovate, not just a checkbox exercise at the end.

Building Your Responsible AI Governance Framework

Without a solid framework, "responsible AI" is just a buzzword—a nice idea with no teeth. This is where you build the operational backbone for ethical AI, moving from well-intentioned principles to concrete, day-to-day practices. The goal here is to bake accountability directly into your AI Product Development Workflow, making responsibility a core ingredient, not just a last-minute compliance check.

Think of this framework as the central nervous system for all your AI solutions. It guides how they're conceived, built, and managed over their entire lifecycle, giving you the structure needed to turn abstract concepts like "fairness" and "transparency" into specific, measurable actions.

Establishing an AI Ethics Committee

First things first: you need a dedicated team to steer the ship. Assembling a cross-functional AI ethics committee or review board is the only way to get centralized oversight and ensure you’re not making decisions in a silo. This is a team sport, not a task for a single department.

Your committee needs to be a healthy mix of technical and non-technical folks to get a complete picture of both the opportunities and the risks.

- Executive Sponsor: A C-level champion who can secure the resources and authority this initiative needs to succeed.

- Legal & Compliance Lead: The person who keeps an eye on the ever-shifting landscape of AI regulations.

- Data Science/ML Engineering Rep: Your technical expert who can explain model behavior, limitations, and what's actually feasible.

- Product Manager: The voice of the user and the business, ensuring ethical guardrails are part of the product roadmap from day one.

- Ethics Officer or Privacy Lead: The conscience of the group, laser-focused on societal impact and protecting user rights.

- Business Unit Representatives: People from HR, finance, or marketing who can ground every discussion in real-world business scenarios.

Getting this diverse group together is critical. It ensures that technical decisions are always weighed against business impact, legal risk, and ethical considerations.

A governance committee is the human-centric layer of your AI strategy, ensuring that technology serves the business without compromising on values. To make it effective, each member must have a clear mandate.

Below is a breakdown of the key players you'll want at the table and what they're responsible for.

| Role | Primary Responsibility | Key Contribution |

|---|---|---|

| Executive Sponsor | Champions the RAI initiative, secures budget, and ensures alignment with business strategy. | Provides top-down authority and removes organizational roadblocks. |

| Legal & Compliance Lead | Monitors regulatory changes and ensures AI systems comply with laws like GDPR, CCPA, and the EU AI Act. | Mitigates legal risk and translates complex regulations into actionable policy. |

| Data Science Lead | Oversees the technical implementation of fairness, transparency, and accountability in models. | Provides deep technical expertise on model limitations, bias detection, and explainability methods. |

| Product Manager | Integrates responsible AI principles into the product lifecycle, from ideation to post-launch monitoring. | Ensures products are designed with user safety and transparency in mind. |

| Ethics or Privacy Officer | Serves as the independent ethical voice, conducting impact assessments and guiding policy development. | Upholds the organization's ethical principles and advocates for user rights. |

| Business Unit Representative | Provides domain-specific context on how AI systems will impact specific operations (e.g., HR, Finance). | Grounds theoretical policies in practical, real-world use cases and consequences. |

This structure creates a system of checks and balances, ensuring no single perspective dominates the conversation.

Defining Roles and Accountabilities

Once you have your committee, the next job is to get crystal clear on who is responsible for what. Effective governance hinges on accountability. If ownership is vague, nothing gets done. You need to map specific responsibilities to specific roles across the organization.

Governance isn't about creating bureaucracy; it's about creating clarity. When everyone knows their part in upholding responsible AI principles, ethical practices become a shared and manageable responsibility.

This clarity has to extend far beyond the committee. For example, a data scientist might be accountable for documenting model bias, while a product manager is on the hook for clearly communicating an AI's limitations to customers. By distributing responsibility, you weave responsible practices directly into existing workflows instead of creating a separate, clunky process.

While many companies are starting to adopt responsible AI programs, there’s often a huge gap between what the policy says and what actually happens on the ground. One survey found that 58% of organizations saw responsible AI initiatives improve their ROI and efficiency. The key differentiator? Companies with mature frameworks—defined by clear governance and accountability—were nearly twice as likely to report that their AI practices were actually effective. You can read the full research about these responsible AI findings for more details.

Crafting Guiding Principles and Internal Policies

With your team and roles locked in, it’s time to create clear, practical principles that your teams can actually use. These can't be fluffy, generic statements. They need to be actionable rules that guide daily work.

For instance, instead of a vague principle like "Be fair," get specific: "We will actively test all models for demographic bias against protected attributes before deployment and document the results in a central repository."

These principles become the foundation for more detailed internal policies that cover the nitty-gritty of data privacy, model fairness, transparency, and security. These documents give your teams the specific instructions they need, creating a consistent approach across every project. Getting this structure right, often with the help of AI strategy consulting, is what turns your governance framework from a document that collects dust into a real-world driver of action.

Getting Real About Fairness and Transparency in Your Data and Models

Once you've got a governance framework sketched out on paper, the real work starts on the command line. This is where your high-level principles on fairness and transparency get stress-tested against the code, data, and algorithms that actually run your AI. Moving these ideas from the boardroom to the dev environment is what separates a genuine responsible AI program from a simple press release.

The objective here is to build systems that don't just work, but work for everyone. That means getting your hands dirty and proactively digging into your data for hidden biases. It also means cracking open those "black box" models so you can actually explain how they think. I've seen firsthand in many real-world use cases how catching these issues early can save a company from major reputational and financial headaches down the road.

Mitigating Bias at the Source: Your Training Data

You've heard it a million times: "garbage in, garbage out." With AI, it's never been more true. If your training data is a reflection of historical societal biases—and let's be honest, most of it is—your model will not only learn those biases, but it will amplify them. This isn't a maybe; it's a guarantee if you don't intervene.

Think about an AI model trained on decades of hiring data. It might quietly learn to favor candidates from specific universities or penalize applicants for taking a career break, simply because that's what the data reflects. Stopping this requires a rigorous audit of your data before you even start building.

Here’s what your teams should be doing:

- Audit Your Data Sources: Before a single line of code is written, ask tough questions. Where is this data coming from? Does it actually represent the diverse population your AI will interact with? Are we importing known industry biases?

- Analyze Your Features: Scrutinize every data point you're feeding the model. A feature like a zip code might seem harmless, but could it be acting as a proxy for sensitive attributes like race or income? It happens all the time.

- Augment Your Data: If you find your dataset is skewed, you have to fix it. Techniques like oversampling underrepresented groups or even using synthetic data generation can help create a more balanced and fair training environment.

Making "Black Box" Models Explainable

One of the toughest parts of getting people to trust AI is the "black box" problem. So many of the most powerful models, like deep neural networks, are so complex that even the people who built them can't fully trace why they made one decision over another. That kind of opacity is a massive roadblock for responsible AI.

Thankfully, the field of Explainable AI (XAI) gives us tools to peek inside the box.

Transparency isn't just a "nice-to-have," especially when AI is making critical financial calls. The CFPB has made it clear with its stance on borrowers' right to explanation for AI-driven credit denials. This makes explainability a legal requirement in many cases, not just a best practice.

Tools like SHAP and LIME are indispensable here. They help data scientists pinpoint which features carried the most weight in a model's final decision. This is how you debug bizarre outcomes, root out hidden biases, and give stakeholders—and customers—a straight answer when they ask, "Why?"

Weaving Fairness Checks into the Entire Model Lifecycle

Fairness isn't a checkbox you tick off right before deployment. It has to be a constant, disciplined practice baked into your entire development process, just like quality assurance is for traditional software.

This means setting up clear checkpoints and using specialized tools to automate fairness testing wherever you can. For organizations looking to make this process systematic, our VeriFAI platform is designed to help teams manage and document these crucial checks. It’s all about putting guardrails in place to operationalize responsible AI and ensure your models are meeting the ethical standards you’ve set. You can see how that works at https://www.ekipa.ai/products/verifai.

A solid lifecycle must include:

- Pre-Training Checks: Analyze the raw dataset for statistical bias before you even begin training.

- In-Training Mitigation: Use algorithms designed specifically to reduce bias while the model is learning.

- Post-Training Evaluation: Test the finished model against a whole suite of fairness metrics. How does it perform across different demographic groups? For example, does a loan approval model have a similar true positive rate for all applicants, regardless of gender?

By making these steps a non-negotiable part of your workflow, you move fairness from a frantic, after-the-fact cleanup job to a core design principle. This technical due diligence is the bedrock on which trustworthy AI is built.

Keeping a Watchful Eye: Continuous Monitoring and Human Oversight

Getting an AI model into production isn't the finish line—it's the starting gate. This is where the real work of responsible AI begins, shifting from development to a continuous cycle of monitoring, feedback, and refinement. You're essentially creating a living system of accountability to ensure your AI behaves as expected day after day, month after month.

Without this constant vigilance, even the most carefully built models can start to fail. We see this all the time. It’s a phenomenon called model drift, where the real-world data the model sees in production slowly starts to look different from the data it was trained on. An algorithm that was perfectly fair and accurate on day one can quickly become biased or just plain wrong if it's left to its own devices.

Building Your AI Dashboard

To keep your AI on the right track, you need a solid monitoring dashboard that tracks the right Key Performance Indicators (KPIs). Think of these metrics as your early warning system, flagging small issues before they snowball into business-critical problems. This dashboard needs to be clear and actionable for everyone, from your data scientists to your product managers.

Here’s what you absolutely must be tracking:

- Performance Metrics: Keep a close eye on the basics like accuracy, precision, and recall. A sudden nosedive in performance is usually the first sign something’s amiss.

- Data Drift: You need to monitor the statistical profile of your incoming data. If the new data starts looking wildly different from your training set, you can’t trust the model’s predictions anymore.

- Bias and Fairness Metrics: This isn't a one-and-done check. You have to continuously re-evaluate the model's outputs across different groups. Are new biases creeping in that you didn't catch in testing?

- Latency and Uptime: Don’t forget the operational side. A slow or buggy system can kill user trust just as fast as an inaccurate one.

This constant improvement loop is a core part of how we deliver our AI Automation as a Service, taking on the complexity of continuous monitoring so our clients don’t have to.

Designing Smart Human-in-the-Loop Systems

Automation is great, but for high-stakes decisions, you simply can't take human judgment out of the equation. A human-in-the-loop (HITL) system creates a smart partnership between your experts and your AI, playing to the strengths of both. This is far more than just adding an "approve" button; it’s about designing an intelligent workflow where human oversight adds real value.

A human-in-the-loop system isn't a sign of AI failure; it's a mark of responsible AI design. It acknowledges that context, nuance, and ethical judgment are things that algorithms alone cannot yet master.

Think about it in practice. In custom healthcare software development, an AI might flag a suspicious area on a medical scan, but a trained radiologist always makes the final call. In finance, an AI can recommend a loan decision, but a loan officer steps in to review complex or borderline cases.

A good HITL system automatically flags anomalies, low-confidence predictions, or high-impact decisions for human review. This ensures an expert can intervene, fix mistakes, and provide feedback that actually helps the model get smarter over time.

Despite how critical these practices are, the truth is most companies are just getting started. A global survey of 1,500 companies found that 81% were in the earliest stages of responsible AI maturity. Very few have managed to operationalize these kinds of comprehensive monitoring and oversight systems. You can learn more about the state of global RAI adoption to see just how big the gap is.

Creating Channels for Real-World Feedback

Finally, real accountability means listening to the people your AI actually affects. One of the most powerful monitoring tools you have is a direct feedback channel for your users. When people can easily report a weird output, a biased recommendation, or just confusing behavior, they become an invaluable part of your QA process.

This could be as simple as a "Was this helpful?" button, a detailed feedback form, or a dedicated support line. The trick is to make it incredibly easy for users to raise their hand and for you to have a clear process for reviewing and acting on that feedback. This does more than just help you spot problems; it builds massive trust and shows your users you’re serious about getting it right.

Keeping Up with the Shifting World of AI Compliance

Staying compliant in the world of AI can feel like you're trying to hit a moving target. The ground is constantly shifting beneath our feet as new laws and frameworks pop up around the globe. This isn't just a headache for massive corporations; any business putting AI solutions into the wild needs to be paying very close attention.

The legislative pace is truly picking up. From 2023 to 2024, mentions of AI in new laws across 75 countries jumped by over 21%. That’s a staggering ninefold increase since 2016. In the U.S. alone, federal agencies rolled out 59 AI-related regulations in 2024, which is more than double the previous year's count. The message is loud and clear: the era of unregulated AI is over. You can discover more insights about these AI Index findings to get a sense of just how big this trend is.

With things changing this quickly, being proactive about compliance isn't just a good idea—it's essential. Building a solid, compliance-ready foundation from the very beginning will save you from a world of pain down the road. It's the same forward-thinking approach we bake into every Custom AI Strategy report we build for our clients.

Key Frameworks You Should Be Watching

While there are many regulations bubbling up, two have really set the tone for the rest of the world: the EU AI Act and the NIST AI Risk Management Framework. Getting your head around their core ideas gives you a massive advantage in building a global compliance strategy, even if you don't operate directly in those jurisdictions.

- The EU AI Act: This is a groundbreaking law that sorts AI systems by risk. It creates four tiers—unacceptable, high, limited, and minimal risk—and slaps much stricter rules on the higher-risk applications.

- NIST AI Risk Management Framework (RMF): Coming from the U.S. National Institute of Standards and Technology, the RMF is a voluntary guide. It’s designed to give organizations a practical way to manage AI risks through four key functions: Govern, Map, Measure, and Manage.

The EU AI Act is a legal requirement, while the NIST RMF is a voluntary framework. But don't miss the forest for the trees—they share the same goal: promoting trustworthy AI that is safe, fair, and transparent. Adopting their principles is a smart play for any business.

Turning High-Level Frameworks into Action

Knowing the rules of the game is one thing, but actually playing by them is another. The real work is in translating these big ideas into concrete actions your teams can take every single day. This means creating rock-solid documentation, running thorough impact assessments, and being relentlessly transparent.

Your documentation needs to be the definitive story of your AI system. It should cover everything from where your data came from and how your model is built to its testing results and known blind spots. Think of it as the ultimate operational manual, ready for any audit or tough questions from regulators. For a closer look at how data should be handled securely, you can review our privacy policy, which details our own commitments.

Building Your Compliance Toolkit

To really get ahead, you have to weave compliance into the fabric of your development process—it can’t be something you tack on at the end.

- Run AI Impact Assessments: Before you even think about deploying a new AI system, you need to conduct a formal assessment to sniff out potential risks around fairness, privacy, and safety. It’s a lot like the Data Protection Impact Assessments (DPIAs) required under GDPR.

- Keep a Model Registry: Create a central inventory of every single AI model your organization is using. This registry should track what each model does, who owns it, what data it uses, and how it’s performing. It’s your single source of truth for governance.

- Set Clear Transparency Rules: Decide exactly how you’re going to tell customers that AI is involved. This could be through simple in-app notifications, crystal-clear terms of service, or even detailed reports that explain how high-stakes decisions were made.

Putting these pieces in place creates a system of checks and balances that doesn’t just keep regulators happy—it builds deep, lasting trust with the people who use your products. After all, the best AI tools for business are always built on a foundation of responsibility.

Your Roadmap for Building AI That People Trust

Putting responsible AI into practice isn't a one-and-done task; it's an ongoing commitment. Think of it less like a project with an end date and more like weaving a commitment to fairness, transparency, and accountability into how you build and use technology. Getting this right is about more than just minimizing risk—it's how you build the deep customer trust that fuels real innovation.

The trick is to avoid trying to fix everything at once. Start small. Pick a single, high-impact area where you can show a clear win and build from there. Maybe that means tackling bias in a customer service chatbot or making a product recommendation engine more transparent. This kind of iterative progress keeps the work manageable and makes it much easier to get buy-in for bigger initiatives down the road.

Weaving Responsibility into Your Culture

A truly responsible AI program is about more than just technical checklists. It's a cultural shift. This means giving your teams the right knowledge and tools, from your internal tooling to your deployment pipelines, so that building responsibly becomes the easiest path, not the hardest.

Becoming a trusted leader in the age of AI isn't just about compliance. It’s about building a brand that customers and employees genuinely believe in, and that creates a powerful, lasting advantage.

This journey takes dedication, but the payoff is huge. Navigating the complexities of building and deploying your own AI solutions can feel daunting, but you don't have to go it alone. If you need help turning your ethical principles into an operational reality, our expert team can help you map out every step of the journey.

Frequently Asked Questions About Responsible AI Implementation

Here are some common questions we hear from leaders trying to put responsible AI into practice.

What is the very first step in a responsible AI implementation?

The most critical starting point is to establish a solid governance framework. This isn't just paperwork; it means forming a cross-functional AI ethics committee, defining clear principles that align with your business values, and assigning real accountability. Without this foundation, any technical efforts to ensure fairness or transparency will be rudderless. Get leadership buy-in first, then map out exactly who needs to be in the room to oversee AI risk through an AI requirements analysis.

How can a small business implement responsible AI without a huge budget?

You don't need a massive budget to get started. Small businesses can make a huge impact by focusing on transparency and human oversight. Be upfront with your users about how and when AI is involved. For any high-stakes decisions, make sure there's always a human in the loop who can review and override the AI's recommendations. Prioritize data privacy and start with a simple checklist to gut-check your models for potential bias. You don't need expensive AI tools for business to begin—the initial focus should always be on your processes and principles.

How do you actually measure the ROI of responsible AI?

The return on investment for responsible AI shows up in two key areas: risk mitigation and value creation. On the risk side, you can track quantifiable metrics like a reduction in customer complaints, fewer costly model errors, and lower exposure to regulatory fines. On the value side, you can measure boosts in customer trust through surveys, track improvements in brand reputation, and see higher adoption rates for your AI-powered features. At the end of the day, responsible practices lead to more robust and reliable AI systems, which directly translates to better business outcomes.

What’s the difference between "ethical AI" and "responsible AI"?

They're closely related, but the distinction is important. Ethical AI deals with the high-level moral principles—the "shoulds" and "should nots" of artificial intelligence. Responsible AI is the operational side of the coin. It's about creating the tangible governance structures, tools, and processes needed to make sure your AI systems are fair, transparent, and accountable out in the real world. In short, responsible AI is how you turn ethical concepts into concrete business practices.

Ready to build an AI program that's both effective and trustworthy? The Ekipa AI team is here to help you move from principles to practice. Let's talk—get in touch with our experts.