Mastering Responsible AI Principles for Fair, Transparent, Accountable AI

Explore the core responsible ai principles your business needs. Learn practical steps to implement fairness, transparency, and accountability in your AI strategy

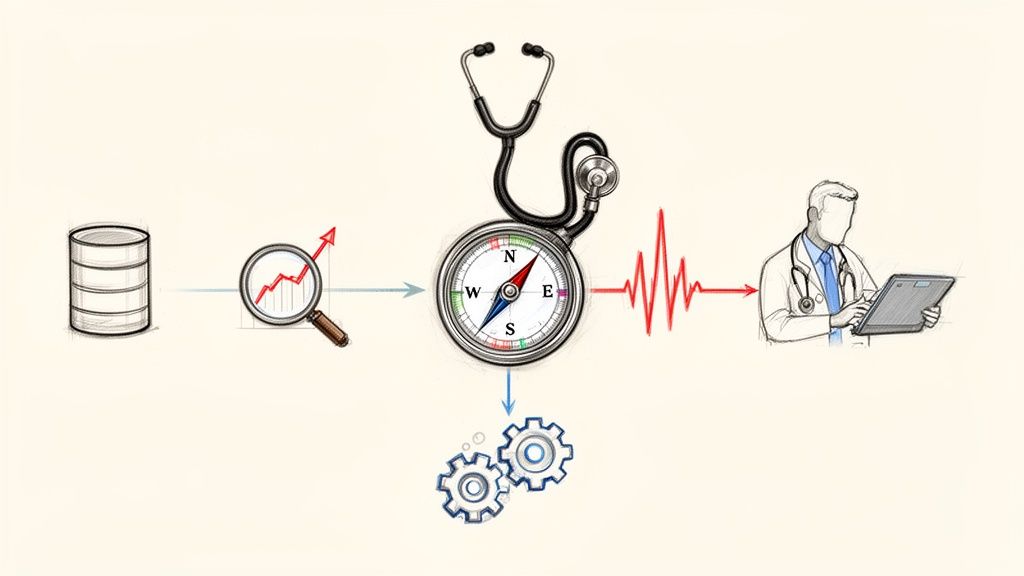

Responsible AI isn't just a set of technical guidelines. Think of it as a compass for developing and using artificial intelligence in a way that’s safe, fair, and accountable. Getting this right is more than a box-tick exercise for compliance; it's a strategic move that builds trust, minimizes risk, and ultimately drives real, long-term business value.

This guide will be your roadmap, showing you how to connect these crucial principles to measurable outcomes for your business.

Why Responsible AI Is No Longer Optional

In today's market, you can't afford to treat responsible AI as an afterthought. It’s moved from a high-level ethical debate straight to the heart of business operations, and the consequences of getting it wrong are very real. The risks of rolling out AI without a solid governance plan are huge and can hit your bottom line directly.

Imagine the fallout from a biased algorithm. Your brand's reputation could be shattered overnight, and the customer trust you've spent years building could evaporate. Plus, with regulations like the EU AI Act solidifying, the financial penalties for non-compliance are becoming a serious business threat. But this isn't just about dodging bullets—it's about gaining a serious competitive advantage.

The Strategic Value of Trust

Building resilient AI solutions that people actually trust is what separates the leaders from the laggards. When your customers feel confident that your systems are fair and transparent, they're far more willing to engage with what you're offering. That trust pays dividends.

-

Deeper Customer Loyalty: Users who trust your AI will stick with you.

-

A Stronger Brand: A clear commitment to responsible AI paints you as a modern, ethical leader.

-

Better Innovation: With a clear ethical framework in place, your teams can innovate with more speed and confidence.

By weaving responsible AI principles into the fabric of your operations, you're doing more than just managing risk. You're building a foundation for sustainable growth and a stronger market position. This proactive mindset is a cornerstone of modern AI strategy consulting.

Turning Principles Into Profit

The link between ethical AI and financial success is surprisingly direct. We’ve seen that companies who truly commit to these principles often stumble upon new efficiencies and unexpected opportunities. For example, the work required to build fair AI models can reveal entirely new market segments you were previously missing. Transparent systems don't just benefit customers; they improve your own team's internal decision-making.

As we explored in our AI adoption guide, thinking about ethics from day one of the AI Product Development Workflow saves you from expensive and painful re-engineering down the line. It sharpens your competitive edge by ensuring your technology is not just powerful, but also robust, reliable, and ready for whatever comes next. It all starts with a deep understanding of the core tenets and a solid plan to make them a part of your company's DNA—a journey that our expert team is here to help you navigate.

Understanding the 5 Core Principles of Responsible AI

Jumping into responsible AI can feel a bit like learning a new language. But when you boil it down, it's really about a simple, powerful idea: building and using intelligent systems in a way that’s fair, safe, and worthy of our trust.

These aren't just abstract ideals to hang on a wall. They’re the practical pillars that support AI solutions that actually work in the long run. Think of them as the essential ingredients in a recipe—if you leave one out, the whole dish can be spoiled, leading to biased outcomes, lost customer trust, or a run-in with regulators.

From our experience helping businesses build out their AI strategies, we've seen time and again that a solid grasp of these fundamentals is non-negotiable for any leader who wants to succeed.

To make these concepts concrete, we've broken down the key principles into a quick-reference table. It explains what each one looks like in the real world and, more importantly, what it means for your bottom line.

Core Principles of Responsible AI Explained

| Principle | What It Means in Practice | Why It Matters for Your Business |

|---|---|---|

| Fairness & Equity | Actively checking and correcting for bias in your AI models to ensure they don't unfairly disadvantage any group of people. | Avoids brand-damaging discrimination, expands your potential customer base, and ensures you're not alienating key demographics. |

| Transparency & Explainability | Being able to understand and clearly explain how and why your AI system made a particular decision. | Builds crucial trust with customers and users. It’s also a lifesaver for debugging and is increasingly a regulatory requirement. |

| Accountability & Governance | Establishing clear ownership and responsibility for how your AI systems operate and the results they produce. Someone is answerable. | Protects your business when things go wrong. A clear governance structure provides oversight and a framework for fixing issues quickly. |

| Privacy & Security | Protecting user data from misuse (privacy) and protecting the entire AI system from cyber threats (security). | Safeguards your most valuable asset—customer data. Prevents costly data breaches and ensures compliance with regulations like GDPR. |

| Safety & Reliability | Ensuring your AI systems work as intended, are resilient to unexpected inputs, and don't cause unintended harm. | Creates dependable products that customers can rely on. A safe system minimizes financial, physical, and reputational risks. |

By putting these principles into practice, you're not just "doing the right thing"—you're building a more resilient, trustworthy, and ultimately more successful business. Let's dig a little deeper into what each of these means.

Fairness and Equity

Fairness in AI is all about making sure an algorithm's decisions don't create or amplify unfair biases against people, especially those in legally protected groups based on race, gender, or age. The hard truth is that an AI model is only as unbiased as the data you feed it.

For instance, an AI hiring tool trained mostly on historical data from a male-dominated field might automatically—and unfairly—screen out highly qualified female candidates. A true commitment to fairness means you're actively auditing your datasets, testing model performance across different demographics, and building in corrections to get equitable results.

Transparency and Explainability

Transparency is about opening up the "black box" so you can understand the inner workings of an AI system. Explainability is what you do with that transparency—the ability to clearly describe why a model reached a specific conclusion.

Let's say an AI system denies a loan application. A transparent system allows an auditor to review the data and logic it used. An explainable system takes it a step further and gives the applicant a clear, human-readable reason, like, "Your application was denied because of a high debt-to-income ratio." This is absolutely critical for building user trust and giving people a path to appeal.

Accountability and Governance

Accountability means having clear lines of responsibility for what your AI systems do. When something goes wrong—and it will—someone or some team must be answerable. This isn't about pointing fingers. It's about having a solid framework for oversight, fixing mistakes, and making sure they don't happen again.

This is where a robust governance structure is essential. It involves creating an AI ethics committee, defining roles for who watches over what, and keeping a detailed inventory of all the AI models you have in production. If you want to dive deeper into these foundational elements, there are some great resources on AI governance principles that can help.

Privacy and Security

These two go hand-in-hand and are all about protecting data.

-

Privacy is about handling personal information responsibly, respecting individual rights, and following the rules laid out in regulations like GDPR.

-

Security is about the technical shields you put in place to protect the AI systems and their data from hackers, corruption, or unauthorized access.

Take healthcare AI, for example. Models trained on patient data absolutely must be anonymized and stored in ultra-secure environments to prevent catastrophic breaches. Things like strong encryption and strict access controls aren't nice-to-haves; they are the absolute baseline.

Navigating the Global AI Regulatory Maze

Knowing the principles of responsible AI is one thing, but making your way through the tangled and ever-changing web of global regulations is another challenge entirely. As AI becomes more deeply woven into how we do business, governments everywhere are putting rules in place. Keeping up isn't just about ticking a legal box; it’s fundamental to earning customer trust and staying ahead of the competition.

The world of AI regulation can feel like a labyrinth. However, a few key laws and frameworks are starting to set the pace for everyone else. For any business, the real trick is figuring out which rules apply to you and then creating a governance model flexible enough to adapt as those rules inevitably change. Think of this less as a one-and-done project and more as a permanent part of your strategy.

The EU AI Act: Setting a Global Precedent

The European Union is out in front with its AI Act, which is one of the most thorough pieces of AI legislation we’ve seen so far. Its reach goes well beyond Europe’s borders, creating a powerful benchmark for how AI might be regulated worldwide. The Act is built on a risk-based approach—a concept every business leader needs to grasp.

It’s simple, really: not all AI systems carry the same weight, so they shouldn't all be treated the same.

-

Unacceptable Risk: These are AI systems that are flat-out banned because they pose a clear threat to people's safety or fundamental rights. Think of government-run social scoring systems.

-

High-Risk: This is the category most businesses really need to focus on. If you're using AI in critical areas like hiring, loan applications, or medical diagnostics, you're in this zone. These systems are subject to tough rules around risk management, data quality, transparency, and human oversight.

-

Limited Risk: AI systems like chatbots fall here. The main rule is transparency—people need to know they’re talking to a machine, not a person.

-

Minimal Risk: The vast majority of AI tools, like spam filters or inventory management AI, fit into this category and face no new legal hurdles.

Figuring out where your AI systems land on this spectrum is your first step. For example, if you're using AI for internal tooling to help screen job applicants, you’re almost certainly dealing with a high-risk system under the EU AI Act.

Finding the Common Ground in Global AI Policy

While the EU AI Act gets a lot of attention, it’s not an island. It actually shares a lot of common ground with other major frameworks, like the OECD AI Principles and the NIST AI Risk Management Framework from the U.S. What we're seeing is a convergence around a core set of responsible AI principles.

No matter which jurisdiction you look at, a clear pattern is taking shape. Regulators are all pointing to the same things: transparency, accountability, and solid risk management. The message is that AI must be built and used in a way that puts people first and earns our trust.

This global alignment is good news. It means if you build your AI governance around these core ideas, you'll be in a strong position to comply with regulations almost anywhere you do business. These rules often link back to data protection, an area we cover in our own privacy policy, where we outline our commitments to responsible data handling. Your own approach should be just as interconnected.

Building a Governance Model That Can Bend, Not Break

In the world of AI regulation, the only thing you can count on is change. Laws will get updated, and new frameworks will pop up. A rigid, "set it and forget it" compliance plan is simply not going to work. The real goal is to build an agile governance model that can evolve right alongside the regulatory environment.

This means you have to be proactive, not reactive. You need to keep a constant eye on the global landscape, conduct regular AI requirements analysis to see how new rules affect your systems, and weave ethical reviews into your entire development process. When you start treating responsible AI as an ongoing strategic priority, compliance stops being a chore and becomes a real, sustainable advantage.

How to Build Your Responsible AI Governance Framework

Having a set of responsible AI principles is a great start, but let's be honest—they're just words on a page without a real system to back them up. To make ethics a part of your company's DNA, you need a solid governance framework. This is your internal rulebook, the blueprint that turns good intentions into consistent, everyday practice.

Think of it like the quality assurance (QA) process in software development. It's not a final check you do at the end; it's a continuous function woven into the entire lifecycle, from the first idea to long-term maintenance. Building this framework means creating clear lines of authority, defining who is responsible for what, and establishing repeatable processes to keep every AI project aligned with your ethical standards.

Assemble a Cross-Functional AI Ethics Committee

Your first move should be to put together a dedicated team to steer the ship. This isn't just a job for the IT department. An effective AI ethics committee needs voices from all corners of the business to provide a well-rounded perspective.

Ideally, your committee should include representatives from:

-

Legal and Compliance: To keep you on the right side of the complex, ever-changing regulatory landscape.

-

Technology and Data Science: To ground the conversation in what's technically possible and understand the limitations of your models.

-

Business Units: To ensure your governance practices actually support strategic goals and work in the real world.

-

Ethics or Risk Management: To act as an objective voice on potential societal harm and reputational risks.

-

Human Resources: This is crucial, especially if you plan to use AI in hiring or employee management.

This group will take the lead in defining your organization's specific AI principles, creating risk assessment protocols, and serving as the final decision-makers for high-stakes AI initiatives.

This flowchart shows how global standards, like those from the OECD, influence major regulations such as the EU AI Act. These, in turn, should directly shape your company's internal policies.

The message is clear: your internal governance can't exist in a bubble. It has to be built to align with and adapt to these powerful external forces.

Define Clear Roles and Standardized Processes

Once your committee is in place, the next task is to figure out who does what. Ambiguity is the enemy of accountability. You need to create clear, documented roles and standardized processes for the entire AI lifecycle.

This means embedding ethical checkpoints at every single stage. You'll want to map out procedures for key activities like AI requirements analysis, model validation, and post-deployment monitoring. A well-defined process, like our AI Product Development Workflow, should have responsible AI checks built right in. This structured approach creates consistency and makes it far easier to track and audit your AI systems down the line.

A key part of this is developing a modern Trust & Safety Strategy to protect both your users and your platform from potential harm.

According to PwC’s 2025 Responsible AI survey, responsible AI is increasingly being recognized not just as a risk mitigation tool but as a driver of business performance and ROI. Nearly 60% of executives believe that implementing responsible AI practices boosts their organization’s ROI and operational efficiency.

This data really drives home the point that a mature governance framework isn't just about playing defense. It's about creating real business value. Companies with strong development standards and risk assessments are simply getting better results, proving that a solid ethical foundation is also a solid business foundation.

Your Practical Checklist for Implementing Responsible AI

Talking about high-level principles for responsible AI is one thing, but putting them into practice on the ground is where the real work begins. To bridge that gap, you need a practical, no-nonsense checklist—a set of clear actions you can weave directly into your development lifecycle.

This isn't about adding another layer of corporate red tape. It’s about making ethical checks as routine as security scans or performance tests.

A recent global survey for the World Economic Forum's 2025 report found a massive gap between what companies say they want to do and what they're actually doing. A staggering 81% of companies are still stuck in the early stages, just trying to define their principles or set up basic governance. If you want to dive deeper, you can read the full report on advancing responsible AI.

This tells us that the biggest hurdle is turning ideas into systematic, repeatable actions. That's exactly what this checklist is designed to help you do.

Table: Responsible AI Implementation Checklist

This checklist isn't just a list of to-dos; it's a practical guide that maps core responsible AI principles to concrete tasks at each stage of the AI lifecycle. It provides your teams with a clear roadmap for embedding ethics and governance directly into their daily workflows, ensuring that principles don't just stay on a poster on the wall.

| AI Lifecycle Stage | Key Action Item | Principle Addressed |

|---|---|---|

| Data Collection | Audit datasets for historical bias and underrepresentation. | Fairness, Equity |

| Data Collection | Document data sources, lineage, and transformations. | Transparency, Accountability |

| Data Collection | Apply anonymization or synthetic data generation for PII. | Privacy, Security |

| Model Development | Define and measure key fairness metrics (e.g., demographic parity). | Fairness, Accountability |

| Model Development | Use explainability tools (like SHAP or LIME) for black-box models. | Transparency, Interpretability |

| Model Development | Conduct adversarial testing (red-teaming) to find vulnerabilities. | Robustness, Security |

| Validation | Implement a human-in-the-loop review for high-stakes decisions. | Accountability, Safety |

| Validation | Mandate a pre-deployment ethical review by a governance body. | Governance, Accountability |

| Deployment | Provide clear documentation on AI capabilities and limitations. | Transparency |

| Monitoring | Continuously track models for performance drift and emerging bias. | Reliability, Fairness |

| Monitoring | Establish a clear feedback channel for users to report issues. | Accountability, Redress |

By following these steps, you build a foundation of trust and reliability from the very beginning. It's a proactive approach that helps you catch potential issues long before they become public-facing problems.

Stage 1: Data Collection and Preparation

Every AI system is built on data, and this is the stage where bias most often creeps in. Getting this part right is absolutely critical if you want to build a fair and reliable model.

-

Audit for Historical Bias: You have to scrutinize your datasets. Are there skewed representations or historical prejudices baked in? For example, a loan dataset that has always favored a certain demographic will teach your AI to do the same, plain and simple.

-

Document Data Provenance: Keep a crystal-clear record of where your data comes from, how you collected it, and any changes you've made to it. This "data lineage" is your lifeline when it comes to accountability and debugging down the road.

-

Implement Privacy-Preserving Techniques: Before you even think about training, apply methods like data anonymization or generate synthetic data to protect personally identifiable information (PII). This is non-negotiable, especially for sensitive areas like custom healthcare software development.

Stage 2: Model Development and Training

During the development phase, your focus has to expand. It's not just about building a model that performs well; it's about making sure it does so in a way that's transparent and fair. This means being deliberate about the algorithms and testing you choose.

-

Establish Fairness Metrics: You need to define what "fair" means for your specific project and then measure it. This could mean tracking things like demographic parity (making sure the model’s predictions are equal across groups) or equal opportunity (checking that the true positive rate is consistent for everyone).

-

Integrate Explainability Tools: For any model that will have a real impact on people, you must implement techniques like SHAP or LIME. These tools are what help data scientists and business leaders understand why a model made a certain decision, turning it from an intimidating "black box" into a transparent system.

-

Conduct Adversarial Testing: Try to break your model. Seriously. Actively feed it unexpected or even malicious inputs to see how it reacts. This process, often called red-teaming, is one of the best ways to find vulnerabilities and make your model more robust and secure before it ever sees the light of day. It's a core feature in many modern AI tools for business.

Stage 3: Validation and Deployment

Before an AI system goes live, it needs one last, rigorous look from multiple angles. This final review is to make sure it truly aligns with your company's ethical standards and is ready for the real world.

-

Human-in-the-Loop Review: For any high-stakes decisions, make sure a human expert can step in to review, override, or approve the AI's output. This is a crucial safety net in fields like medical diagnostics or legal assessments.

-

Pre-Deployment Ethical Review: Your AI ethics committee or another designated governance group should give the final sign-off. They need to check it against your responsible AI principles before any high-risk system gets deployed to customers.

-

Create Clear User Documentation: Be transparent with your end-users. Give them straightforward documentation that explains what the AI does, what its limitations are, and how their data is being used. This is how you build trust and manage expectations.

Stage 4: Ongoing Monitoring and Governance

Getting an AI model deployed isn't the finish line—it's the starting line. Responsible AI is a continuous loop of monitoring, learning, and adapting.

The goal is to create a feedback loop where real-world performance informs future improvements. This iterative approach ensures your AI systems remain fair, accountable, and effective over time.

-

Monitor for Model Drift and Bias: Keep a close watch on your model's performance in production. You're looking for "drift"—where its accuracy starts to degrade—or the emergence of new biases as it encounters new, real-world data.

-

Establish a Clear Feedback Channel: Give users an easy, accessible way to report problems, appeal decisions, or just ask questions about the AI's behavior. The feedback you get from this is invaluable for making the model better.

Our own platform, VeriFAI, is designed to help teams manage this entire process by tracking and validating AI project requirements, ensuring that governance is baked in from start to finish.

Your Roadmap to Building a Culture of Responsible AI

So, where do you start? Treating responsible AI like a one-off project with a finish line is a common mistake. The real goal is a cultural shift—moving these principles from a document on a server to the very DNA of how your company operates.

Think of it as a journey, not a sprint. This roadmap lays out the key milestones to get you from initial awareness to a future where responsible AI is simply how you do business. The first step is figuring out where you are right now. Before you can chart a course, you need to conduct a thorough ethics risk assessment on all your AI systems, both current and planned. This will help you spotlight the high-risk areas—like a model that influences hiring decisions or a customer-facing algorithm—so you can tackle them first.

Key Milestones on Your Journey

Building this kind of culture happens in phases. Each step builds on the one before it, creating momentum and embedding ethical thinking deeper into your organization.

-

Establish Governance (Months 1-3): Your first move is to assemble a cross-functional AI ethics committee. This isn't just for show; this team's job is to formalize your company's AI principles and create a rock-solid process for reviewing and overseeing projects. It's the bedrock of any serious AI strategy.

-

Launch a Pilot Project (Months 4-6): Now it's time to get your hands dirty. Pick one AI project that's high-impact but still manageable. This is your test case. Apply your new governance framework to it from start to finish—from auditing the data to deploying explainability tools. This pilot won't just teach you what works; it will create the internal champions you need to drive the change forward.

-

Develop Training and Resources (Months 7-9): A culture can't grow without education. It’s time to develop and roll out training programs for everyone involved, from data scientists to product managers. The goal is to make sure they not only understand the principles but also have the practical tools they need to apply them every single day.

-

Scale Across the Enterprise (Months 10-12+): With the lessons from your pilot in hand, you can start applying your governance framework to all new AI initiatives. Begin weaving these ethical checks into the workflows you already have for internal tooling and customer-facing products. The aim is to make responsible AI the default, not the exception.

This roadmap is a guide, not a rigid set of rules. The most important thing is a real commitment to getting better over time. You’ll need to regularly review your processes, learn from what’s working and what isn’t, and adapt as the technology and regulations change. The AI space is always shifting, and your approach to responsibility has to be just as dynamic.

To see some real-world use cases of these principles in action, check out our case studies. For more personalized guidance on navigating this complex but crucial journey, feel free to connect with our expert team. We can help you build trust through technology and turn responsible AI from a concept into a core business strength.

FAQs on Responsible AI Principles

Putting responsible AI into practice can feel like navigating uncharted territory. It’s natural to have questions. Here are straightforward answers to the common concerns we hear from business leaders, helping you cut through the noise and move forward.

What is the primary goal of responsible AI?

The primary goal of responsible AI is to develop and deploy artificial intelligence systems that are fair, transparent, accountable, and safe. It's about ensuring that AI technology benefits individuals and society as a whole while minimizing potential harm, such as algorithmic bias, privacy violations, and unintended consequences.

How does responsible AI benefit a business?

Implementing responsible AI principles benefits a business by building customer trust, which leads to greater loyalty and adoption of AI-powered products. It also mitigates significant risks, including reputational damage from biased outcomes and financial penalties from non-compliance with regulations like the EU AI Act. Ultimately, it fosters a culture of innovation and can lead to more robust and reliable business solutions.

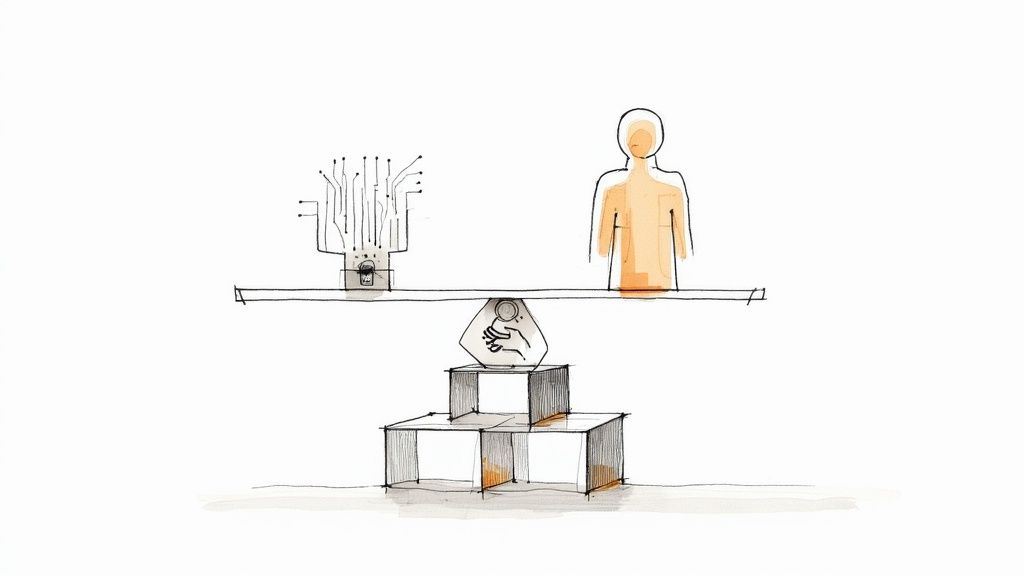

What is the difference between AI ethics and responsible AI?

AI ethics is the broad field of study that examines the moral and societal implications of artificial intelligence. Responsible AI is the practical application of those ethical principles. While ethics provides the "why," responsible AI provides the "how"—the frameworks, governance, tools, and processes needed to build AI systems that are fair, accountable, and transparent in the real world.

Who is responsible for implementing responsible AI in an organization?

Responsibility for implementing responsible AI is shared across an organization. While a dedicated AI governance committee or ethics board often leads the effort, it requires buy-in from everyone:

-

Leadership must set the strategy and provide resources.

-

Data scientists and engineers must build and test models according to ethical guidelines.

-

Product managers must integrate responsible AI checks into the development lifecycle.

-

Legal and compliance teams must navigate the regulatory landscape.

Ready to turn these principles into practice? Ekipa AI delivers tailored AI strategies and implementation support to help you build fair, transparent, and accountable AI solutions. Connect with our expert team today!