Navigating AI Adoption Challenges in Healthcare Industry

Explore the top AI adoption challenges in healthcare industry and learn proven, practical strategies to overcome data, regulatory, and operational hurdles.

The promise of artificial intelligence in healthcare is enormous, but for many organizations, that promise feels just out of reach. Progress often seems to be stuck in first gear. The main AI adoption challenges in the healthcare industry aren't simple; they're a tangled mess of fragmented data, tough privacy rules, regulatory minefields, and a real shortage of people with the right skills.

It's a frustrating situation for healthcare leaders.

The Paradox of Progress in Healthcare AI

Healthcare is standing at a technological crossroads. We all see the potential for AI to sharpen diagnostics, tailor treatments to the individual, and make hospital operations run more smoothly. Yet, so many organizations find themselves stuck in what feels like a never-ending pilot project.

Despite the money being spent and the genuine excitement, the road from a cool proof-of-concept to a tool that clinicians actually use every day is riddled with potholes. Getting past these hurdles takes more than just sophisticated algorithms; it requires a strategic, people-first mindset.

This is the central paradox: the more powerful the AI, the trickier it is to implement. The very things that define healthcare—the high stakes, the intense regulatory oversight, and the deep sensitivity of patient data—amplify every single challenge.

Why Ambition Meets Resistance

So, why does progress stall out? It’s a mix of things. At the very bottom of the pile is the data problem. Patient information is often siloed in different, disconnected Electronic Health Record (EHR) systems. This makes it a nightmare to pull together the clean, comprehensive datasets you need to train a reliable AI model.

And even if you can get the data, its quality is often a major issue. Inconsistent or incomplete records can lead to biased algorithms, which is a serious risk. The last thing anyone wants is a tool that accidentally makes health disparities worse.

Then there's the human side of the equation. Clinicians are, quite rightly, cautious. They worry about the "black box" problem—where an AI solution gives an answer without showing its work—and the real-world consequences of an error. You can't just hand them a new tool; you have to earn their trust. It’s absolutely essential.

The journey to successful AI adoption is less of a sprint toward new technology and more of a marathon in strategic change management. It’s about building bridges between data scientists, clinicians, and patients to ensure technology serves, rather than dictates, the delivery of care.

An Uneven Landscape of Adoption

This complex environment has led to a patchy and inconsistent rollout of AI across the industry. One of the biggest AI adoption challenges in the healthcare industry is just how different the levels of investment and readiness are from one organization to the next.

For instance, a recent analysis found that while AI adoption among health systems is expected to hit 27%, outpatient providers and payers are trailing at 18% and 14%, respectively. You can see this gap in the spending, too. Health systems are responsible for about $1 billion of the total $1.4 billion spent on healthcare AI. You can dig into more of these AI in healthcare findings on menlovc.com.

This unevenness tells us that a one-size-fits-all strategy is destined for failure. What's needed is a solid AI strategy consulting framework that confronts these unique challenges directly. By carefully picking high-impact use cases and laying out a clear roadmap, organizations can finally start turning all that potential into practice, making sure their AI projects deliver real value to patients and providers alike.

Core AI Adoption Challenges in Healthcare at a Glance

To put it all into perspective, here’s a quick summary of the major hurdles holding back widespread AI adoption in the healthcare space. This table breaks down the key challenge areas, their direct impact, and the groups feeling that impact the most.

| Challenge Area | Primary Impact | Key Stakeholders Affected |

|---|---|---|

| Data Accessibility & Quality | Inaccurate or biased models, stalled projects due to insufficient training data. | Data Scientists, Clinicians, Patients |

| Regulatory & Compliance Hurdles | Delayed deployments, increased legal risk, navigating complex laws like HIPAA. | Hospital Administrators, Legal Teams |

| Integration with Clinical Workflows | Low user adoption, disruption of established processes, clinician burnout. | Doctors, Nurses, IT Staff |

| Trust, Ethics & Transparency | Resistance from clinicians and patients, concerns over "black box" algorithms. | Patients, Clinicians, Ethicists |

| Talent & Skills Gap | Difficulty building and maintaining AI systems, reliance on external vendors. | C-Suite Executives, HR Departments |

| High Implementation Costs & ROI | Budget constraints, difficulty proving financial and clinical value. | CFOs, Department Heads, Investors |

Ultimately, navigating these challenges requires a clear-eyed strategy that anticipates these roadblocks from the start. Acknowledging them is the first step toward building a sustainable and impactful AI program.

Solving the Healthcare Data Puzzle

Data is the fuel for artificial intelligence, but in healthcare, it's often a chaotic mess. Think of it like a puzzle with pieces scattered across a dozen different boxes—that’s the data dilemma, and it's one of the biggest AI adoption challenges in the healthcare industry. Without putting those pieces together, AI's real potential stays locked away.

It's a daily struggle for providers. Imagine trying to get a clear picture of a patient’s health when their primary care records, specialist notes, lab results, and imaging scans are all trapped in separate, incompatible systems. These disconnected Electronic Health Record (EHR) systems create data silos, making it almost impossible to gather the comprehensive data needed to train a reliable AI model. This is the heart of the healthcare interoperability challenges that keep different systems from talking to each other.

This fragmentation directly hobbles our ability to create powerful diagnostic tools or build algorithms that could predict patient risks before they become critical.

The Hidden Dangers of Flawed Data

It’s not just about getting the data; the quality is a massive issue. Flawed, incomplete, or biased information is a recipe for dangerously skewed algorithms. If an AI model learns from data that mostly represents one demographic, its performance will likely plummet when used on others, potentially making existing health disparities even worse.

This isn't just a technical glitch—it's an ethical landmine. An AI tool that consistently fails to diagnose a condition in a specific population group isn't just ineffective; it's actively causing harm. This is why digging deep into the data and ensuring its integrity is a moral imperative, not just a step in the development process.

Navigating the Privacy Tightrope

On top of all this, you have the strict regulatory environment, with the Health Insurance Portability and Accountability Act (HIPAA) at the forefront. Protecting patient privacy is absolutely non-negotiable. This creates a tricky balancing act: how do you gather enough data to train a useful AI model while guaranteeing every bit of information stays secure and anonymized?

The answer lies in a sophisticated approach to data governance and security. It demands more than just technical firewalls; it requires crystal-clear policies and strong ethical guidelines. Building a secure data pipeline is the foundational first step, and it often takes specialized expertise in both AI and healthcare compliance.

The goal is to build a data ecosystem that is both rich enough for AI innovation and secure enough to maintain absolute patient trust. If you fail on either front, the entire effort is unsustainable.

Building a Foundation for Reliable AI

Solving this data puzzle is the first and most crucial step for any healthcare AI initiative. It demands a strategy that blends technical solutions with a deep understanding of the healthcare world.

The process usually breaks down into a few key actions:

- Data Aggregation: Bringing in tools and platforms that can pull data from all those different sources.

- Standardization and Cleansing: Forcing the data into a uniform format and cleaning up all the inaccuracies and inconsistencies. For example, a specialized tool can automate pulling structured information from messy, unstructured clinical notes, which saves a ton of time and cuts down on errors. You can see how this works with an AI-powered data extraction engine.

- De-identification: Using advanced methods to strip out all personal identifiers from patient data to stay on the right side of privacy laws.

By tackling these data-centric challenges head-on, healthcare organizations can build the solid foundation needed for trustworthy and effective AI. Without this groundwork, any AI project is built on quicksand, destined to fail before it ever has a chance to deliver on its promise.

Moving AI From Pilot Project to Clinical Practice

We’ve all seen it happen. A brilliant AI initiative in healthcare shows amazing promise in a controlled trial but gets stuck in a perpetual pilot phase, never quite making the leap to everyday clinical use. This is one of the most frustrating ai adoption challenges in the healthcare industry. The journey from a promising proof-of-concept to a tool that actually improves patient care is littered with operational and regulatory hurdles that can bring innovation to a dead stop.

The gap between a successful pilot and widespread adoption is often much wider than leaders anticipate. While AI adoption in healthcare has jumped from 72% to 85% in just one year, that number can be misleading. As of 2025, only about 22% of healthcare organizations have truly implemented domain-specific AI tools into their core operations. Much of the market is still just dipping its toes in the water. You can find more on these AI in healthcare statistics on ventionteams.com.

Overcoming Workflow Integration Hurdles

One of the biggest obstacles is simply fitting AI into how doctors and nurses already work. Clinicians are drowning in information and administrative tasks. If a new AI tool disrupts their established routines or adds even a few more clicks to their day, it’s doomed. No matter how powerful it is, it will be rejected.

Getting this right isn't just a technical task; it’s a human-centered design challenge. Success demands a deep, almost ethnographic, understanding of clinical workflows. The solution has to feel intuitive. It has to save time. And it must present information in a way that is immediately useful at the point of care. An AI diagnostic assistant, for instance, should push its insights directly into the patient's EHR chart, not force the user to open a separate, clunky dashboard.

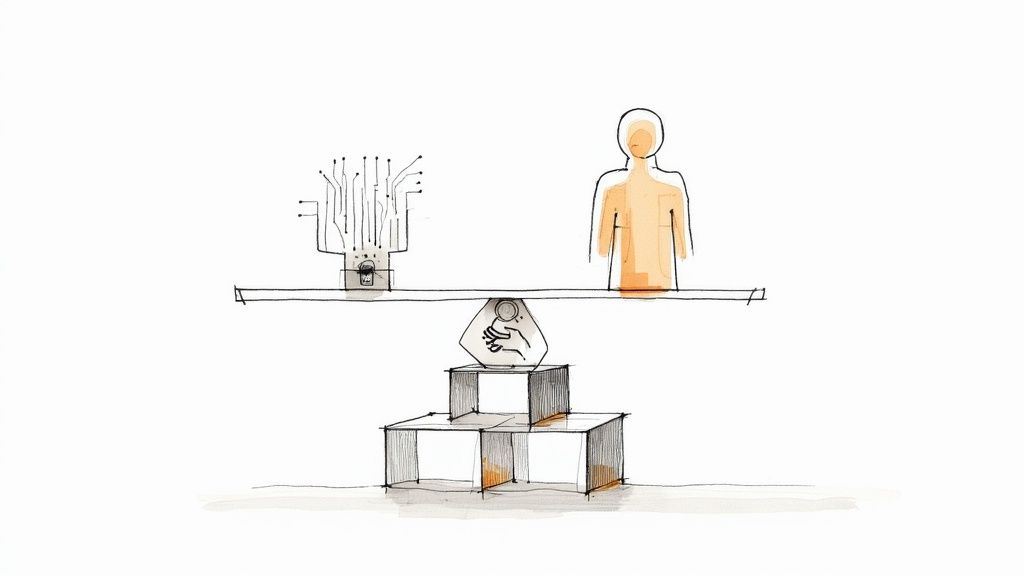

The Black Box Problem and Building Trust

Clinicians are trained to be skeptical and evidence-driven. This leads directly to another major hurdle: the "black box" problem. Many advanced AI models, especially deep learning networks, arrive at conclusions in ways that are nearly impossible for a human to interpret. How can a doctor trust an AI’s treatment recommendation if they can't understand why the algorithm made that specific choice?

This isn't just resistance to change; it's a perfectly reasonable and critical barrier. To get past it, we have to champion explainable AI (XAI) and build trust brick by brick.

- Be Transparent: Clearly document what data trained the model and what its known limitations are.

- Make it Interpretable: Use models that can literally highlight the features in a medical image or the data points in a patient record that led to its conclusion.

- Validate, Validate, Validate: Involve clinicians in rigorous, real-world validation studies to prove the AI's accuracy and reliability in their own environment.

A technically perfect AI that clinicians don't trust is a failed project. The path from pilot to practice is paved with transparency, collaboration, and a relentless focus on making the technology a reliable partner in care, not an opaque authority.

Navigating the Regulatory Maze

Finally, you have the regulatory maze. The rules for AI in healthcare are complex and constantly shifting. Getting a green light from bodies like the U.S. Food and Drug Administration (FDA) for an AI-powered medical device can be a long, expensive, and frustrating process. This is especially true for any AI that makes decisions without a human keeping a close watch.

You need a clear regulatory strategy from day one. This means understanding exactly how your AI tool will be classified, planning for the necessary clinical trials, and meticulously documenting every single step of the development and validation process. A structured workflow is the only way to ensure new AI solutions are not just technically sound but also practical, trustworthy, and fully compliant. You can see more about our approach for the healthcare industry.

Earning Clinician Trust and Closing the AI Skills Gap

Beyond the tough technical work of wrangling data and systems, the biggest hurdles to AI in healthcare are, without a doubt, human. You can have the most sophisticated algorithm in the world, but if the people on the front lines don't trust it or know how to use it, it's just expensive code. Getting this right comes down to two things: earning the trust of clinicians and closing the massive AI skills gap.

The problem often starts with a simple shortage of people who can speak both "clinical" and "code." It’s incredibly rare to find someone who deeply understands the nuances of patient care and the mechanics of machine learning. That unique combination is exactly what healthcare organizations are competing for.

Overcoming Cultural Resistance and Answering Clinician Concerns

It’s completely understandable for clinicians to be skeptical of new technology, especially something that touches their core decision-making process. They worry about their jobs, but more importantly, they worry about patient safety. What if the algorithm is wrong? After building a career on expertise and intuition, the idea of handing over judgment to a machine can feel like a huge step backward.

This is why building trust can't be an afterthought; it has to be a deliberate, central part of any AI strategy consulting effort. The only way to do this is with transparency and involvement. Bring clinicians into the process from day one. Let them help identify the problems to solve, validate the models, and design the workflows. This changes their role from skeptical observers to engaged partners.

And frankly, explainable AI (XAI) is non-negotiable. When a doctor can see why an algorithm flagged a tumor—by highlighting the specific pixels in a scan that it found suspicious, for example—the "black box" disappears. It becomes a diagnostic partner, not a mysterious oracle. This kind of transparency is the absolute foundation of clinical confidence.

The Urgent Need to Upskill the Workforce

Layered on top of the trust issue is a serious talent shortage. Surveys show that while 92% of healthcare leaders believe automation is critical for solving staff shortages, 78% are also increasing their AI budgets. This creates a huge demand for advanced AI skills, and healthcare is competing with every other industry for that same talent pool. For more on this, you can learn more about these healthcare technology trends at RSI Security.

This reality means we can't just hire our way out of the problem. We have to build skills from within. The goal isn’t to turn every nurse into a data scientist, but to develop a workforce that is AI-literate and comfortable working alongside these new tools.

The most sustainable path forward is to empower your current clinical workforce to collaborate with AI, not compete against it. This builds a culture where innovation is seen as a way to deliver better care, not as a threat to professional identity.

So, what does a good training program look like?

- Focus on Practical Wins: Show staff how specific AI tools for business solve their immediate, everyday headaches, like automating prior authorizations or summarizing patient charts.

- Establish Clear Ethical Guardrails: Create simple, clear rules for how and when AI is used, ensuring a human is always in the loop for critical clinical decisions.

- Commit to Continuous Learning: AI isn't a one-and-done training session. Create easy-to-access opportunities for ongoing education as the technology evolves.

Investing in your people is what makes the technology stick. When your team understands and trusts the tools, they become its biggest champions. They start seeing AI for what it is—a way to augment their own skills, freeing them up to focus on the deeply human side of patient care that no algorithm can ever replace. As we explored in our AI adoption guide, demonstrating this value with tangible, real-world use cases is the best way to drive this cultural shift. This is precisely where our expert team can help, by fostering an environment where innovation thrives because your people are at the center of it.

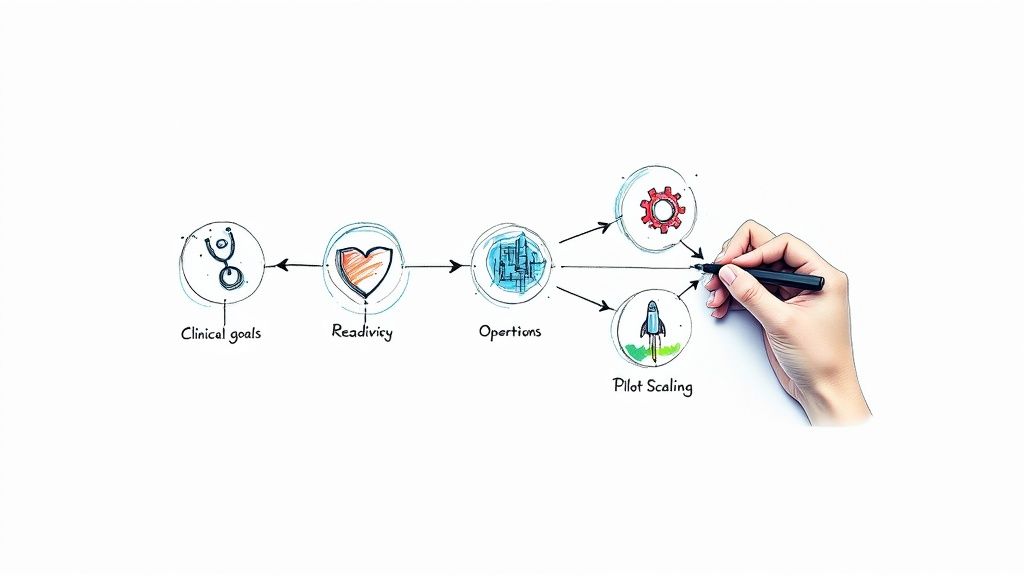

Your Strategic Roadmap to AI Success in Healthcare

Talking about the challenges of AI adoption in the healthcare industry is one thing; actually solving them is another. A scattered, try-anything approach almost always leads to stalled pilot projects and budgets that just evaporate. The alternative? A thoughtful, phased roadmap that turns the intimidating task of adopting AI into a series of manageable, value-driven steps.

The journey has to start with a clear vision. Before anyone writes a single line of code, leadership needs to define what success truly looks like. Are we trying to slash administrative overhead? Improve diagnostic accuracy? Or deliver hyper-personalized patient care? Answering this question is the critical first step that guides everything else.

Phase 1: Lay the Strategic Foundation

The best place to begin is with a serious look at your organization's specific needs and existing capabilities. This is where something like a Custom AI Strategy report is invaluable, because it helps you pinpoint high-impact, low-risk opportunities that actually align with your core mission. This initial deep dive keeps you from chasing shiny new trends and focuses your energy on solving problems that matter.

This foundational work involves a few key things:

- Defining Clear Goals: Get specific. Pinpoint the exact clinical or operational metrics you want to improve. To really get the most out of AI, it's crucial to evaluate the strategic rationale for AI adoption and ensure your goals are tied to meaningful outcomes like revenue growth or serious efficiency gains.

- Establishing Governance: Pull together a cross-functional team. You need clinical, IT, and administrative leaders at the table to oversee the AI strategy, set ethical ground rules, and keep projects on track.

- Developing a Data Strategy: Take an honest look at your data's quality and accessibility. This upfront AI requirements analysis will quickly reveal the gaps in your data infrastructure that need to be fixed before any real model-building can happen.

Phase 2: Secure Quick Wins and Build Momentum

With a solid strategy in your back pocket, the next move is to show value—and fast. This is how you get buy-in from stakeholders and clinicians who might, understandably, be skeptical. The key is to focus on projects that solve immediate headaches without blowing up established clinical workflows.

This is where targeted solutions really shine. Think about implementing AI Automation as a Service to streamline tedious back-office tasks like billing, scheduling, or prior authorizations. Or maybe you can develop better internal tooling to cut down on the documentation burden that burns out your physicians.

These "quick wins" deliver a measurable return and prove that AI can be a helpful partner, not just another disruptive force. Our structured AI Product Development Workflow is designed specifically to guide these projects from a simple concept to a fully deployed tool that delivers value efficiently.

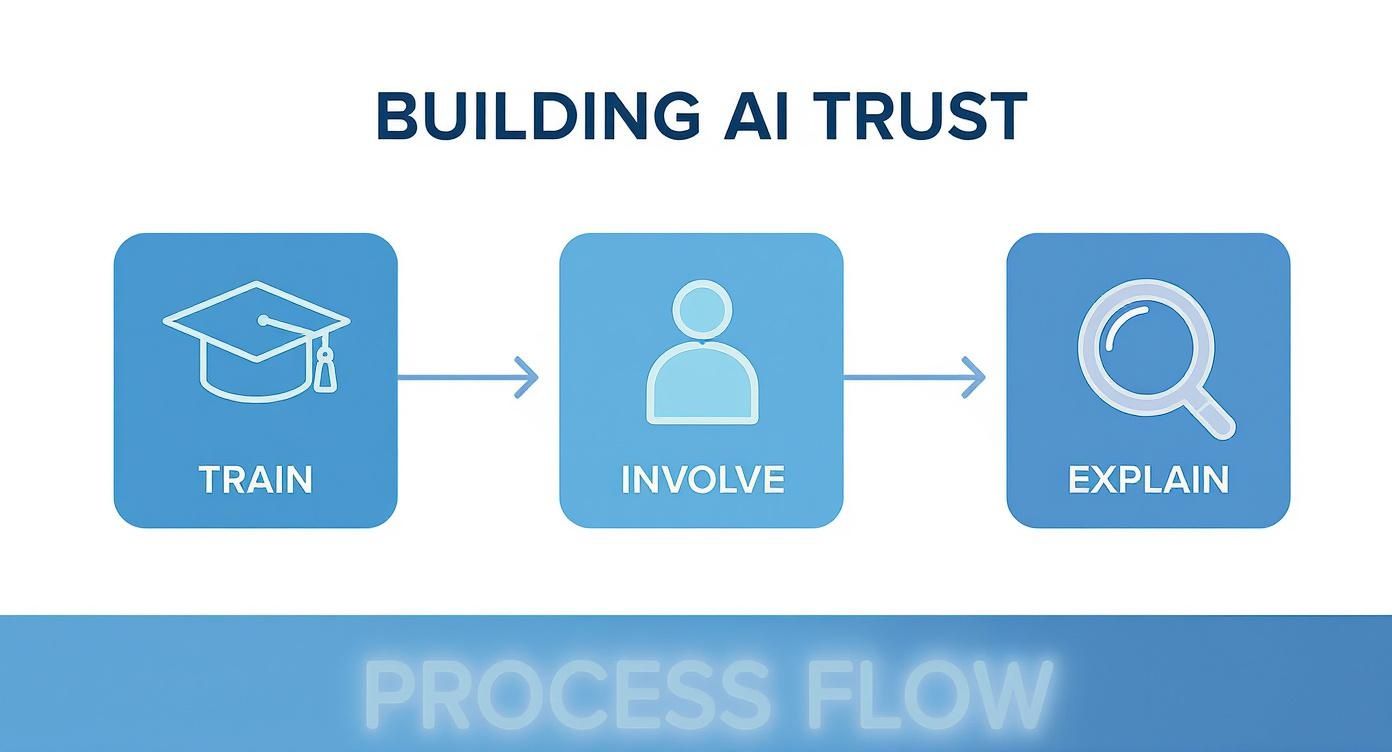

Building trust in AI isn't an overnight thing. It's a gradual, deliberate process that hinges on training people, involving them, and explaining how the tech works.

This image nails it: trust isn't a given. It has to be earned by educating your users, including them in the process, and pulling back the curtain on the technology.

Phase 3: Scale and Optimize for Broader Impact

Once you’ve built some momentum with those initial successes, you can start aiming higher and tackling more ambitious clinical applications. The lessons you learned from the early projects will give you a blueprint for how to approach more complex challenges, whether that’s predictive analytics for patient risk or AI-assisted diagnostics.

For organizations that need highly specialized tools, looking into options like custom healthcare software development can deliver solutions perfectly tailored to your unique clinical environment.

A successful AI roadmap isn't a static document you file away. It's a living strategy that evolves. It starts with a clear vision, proves its worth with targeted wins, and scales intelligently to truly change how care is delivered.

This phased approach breaks the massive challenge of AI adoption into a series of achievable steps. By using an effective AI Strategy consulting tool, healthcare leaders can navigate this journey with confidence, ensuring every investment moves them closer to a future where technology truly enhances human expertise and improves patient lives.

Turning Healthcare's Biggest Headaches into AI Wins

The hurdles we see in healthcare—messy data, regulatory red tape, clunky workflows, and a shortage of AI talent—are the very definition of AI adoption challenges in the healthcare industry. It’s easy to look at this list and see a wall of roadblocks.

But what if we saw them differently? Instead of roadblocks, think of them as signposts. They're pointing us toward a smarter, more intentional way to bring AI into the fold.

Getting over these humps means changing how we think about the problem. This isn't just a tech upgrade; it's about building a complete system with people and strategy at its core. Real success starts with a clear goal, a focus on solving actual clinical and operational problems, and a real investment in your team. This is exactly where the right partner can change the game.

You Don't Have to Go It Alone

Trying to navigate this complex world by yourself is a huge ask. To turn AI's potential into real-world results, you need a focused approach backed by deep expertise. That’s why our AI solutions are built to be more than just tools—they're designed to help your organization cut through the complexity and turn those challenges into genuine opportunities.

We know from experience that the best journeys start with a clear map. A strong partnership can give you:

- A solid starting point with a Custom AI Strategy report.

- Expert navigation from our AI strategy consulting team.

- The specific AI tools for business you need to solve your unique problems.

At the end of the day, the goal is to create a durable, effective AI plan that delivers real value to clinicians and, most importantly, to patients. Ready to start building that plan? Connect with our expert team today.

Frequently Asked Questions

As healthcare leaders and clinicians start exploring AI, a lot of questions come up. It's a complex field, and it’s natural to want clear, straightforward answers. Here are some of the most common ones we hear.

What Is the Biggest Challenge to AI Adoption in Healthcare?

If you have to pick just one, it's the data. Hands down.

AI models are fundamentally data-driven; they're only as smart as the information they learn from. In healthcare, that data is often a mess. Patient information is frequently trapped in separate EHR systems that don't talk to each other, buried in unstructured doctors' notes, or is simply incomplete. As we explored in our AI adoption guide, this fragmentation is a primary barrier.

Without a solid plan to pull all that data together, clean it up, and make it consistent, even the most sophisticated algorithm will stumble. That's why getting your data house in order is the first, most critical hurdle. A detailed AI requirements analysis is the only way to figure out where your data gaps are before you even think about building a model.

How Can Healthcare Organizations Build Clinician Trust in AI?

You can't just drop a new AI tool on a clinical team and expect them to embrace it. Building trust is a deliberate process, and it takes a few different tactics working together.

It has to start with transparency. Using explainable AI (XAI) helps clinicians see why an algorithm is making a certain recommendation, which is huge. It's also crucial to bring them into the fold right from the beginning—get their input during development and have them help validate the tools.

A great way to get early wins is to start with low-risk, high-impact tools, like using AI for internal tooling to automate tedious paperwork. This shows the value without touching critical clinical decisions. And for those bigger decisions, you always need strong governance with a human-in-the-loop. Ultimately, successful pilot programs, managed with a structured AI Product Development Workflow, are what prove that AI is there to support their expertise, not replace it.

Is AI Cost a Major Barrier for Smaller Healthcare Providers?

Absolutely. For smaller hospitals and clinics, the price tag can feel overwhelming. It’s not just the software license; it's the cost of the underlying infrastructure, the data storage, and the hard-to-find talent needed to run it all. This financial barrier is a big reason why you see such a gap in AI adoption between large hospital systems and smaller providers.

But the game is changing. Newer, more flexible models like AI Automation as a Service and cloud-based platforms are leveling the playing field. They reduce the massive upfront cost, letting organizations start small. By working with good AI strategy consulting to pinpoint high-return use cases first, even smaller providers can get started without needing a massive budget.

Ready to turn your organization's challenges into opportunities? Ekipa AI provides the strategic guidance and tools needed to navigate the complexities of AI adoption in healthcare. Start building your AI roadmap today by exploring our AI solutions.