A Practical Guide to Clinical AI Risk Management

A practical guide to clinical AI risk management. Learn how to build a governance framework, ensure patient safety, and navigate healthcare AI regulations.

When we talk about clinical AI risk management, what we're really talking about is building a safety net. It's the structured process of finding, analyzing, and neutralizing potential harm from AI systems used in actual patient care.

Think of it as the modern-day version of clinical trials or the safety protocols we demand for any new medical device. As AI gets woven into everything from diagnostic imaging to planning treatments, the risk of unexpected errors also climbs. That's why having a solid governance plan isn't just a good idea—it's absolutely critical for protecting patients and keeping the trust of clinicians.

Why Clinical AI Risk Management is Now Essential

In medicine, the guiding principle has always been "first, do no harm." Now that artificial intelligence is leaving the research lab and showing up at the bedside, that principle has to apply to the algorithms themselves. Rolling out a clinical AI tool without a formal risk management plan is like constructing a hospital while ignoring fire safety codes. The potential for disaster is huge, even if it’s not immediately visible.

The stakes are far too high to just hope for the best. A structured approach to risk is no longer a "nice-to-have"; it's a fundamental requirement for any healthcare organization looking to innovate responsibly. Proactive governance is the only way to safeguard patients, maintain clinical trust, and stay on the right side of regulations.

The Shift From Reactive To Proactive Governance

Historically, medical device safety often involved fixing problems after something went wrong. But AI is different. Its ability to learn and adapt over time means we have to get out ahead of potential issues. A diagnostic model that works flawlessly on day one could slowly "drift" over the next few months, becoming less accurate and introducing subtle, dangerous errors into its analysis.

This new reality demands a proactive stance. Effective clinical AI risk management is built on:

- Continuous Monitoring: Keeping a constant eye on how the model is performing with real-world clinical data, not just the data it was trained on.

- Human Oversight: Making sure clinicians are not just users, but critical evaluators who are empowered—and trained—to override an AI's suggestion when their expertise tells them to.

- Transparent Documentation: Maintaining crystal-clear records of everything, from the AI’s training data and validation methods to its ongoing performance history.

A strong governance framework does more than just stop bad things from happening; it builds confidence. When doctors, nurses, and technicians trust the tools they're using, they’re far more likely to embrace them, leading to better patient care and more efficient workflows.

Balancing Innovation With Patient Safety

The point of risk management isn't to put the brakes on innovation. It's to create a safe road for it to travel on. By systematically identifying and addressing potential harms before they ever have a chance to materialize, healthcare organizations can deploy powerful new technologies with confidence, not anxiety.

This philosophy is the bedrock of building responsible Healthcare AI Services. Patient safety has to be the top priority at every single stage, from initial concept to full-scale implementation. A well-designed risk framework transforms potential liabilities into sources of strength, ensuring your organization’s AI initiatives are both ambitious and grounded in safety.

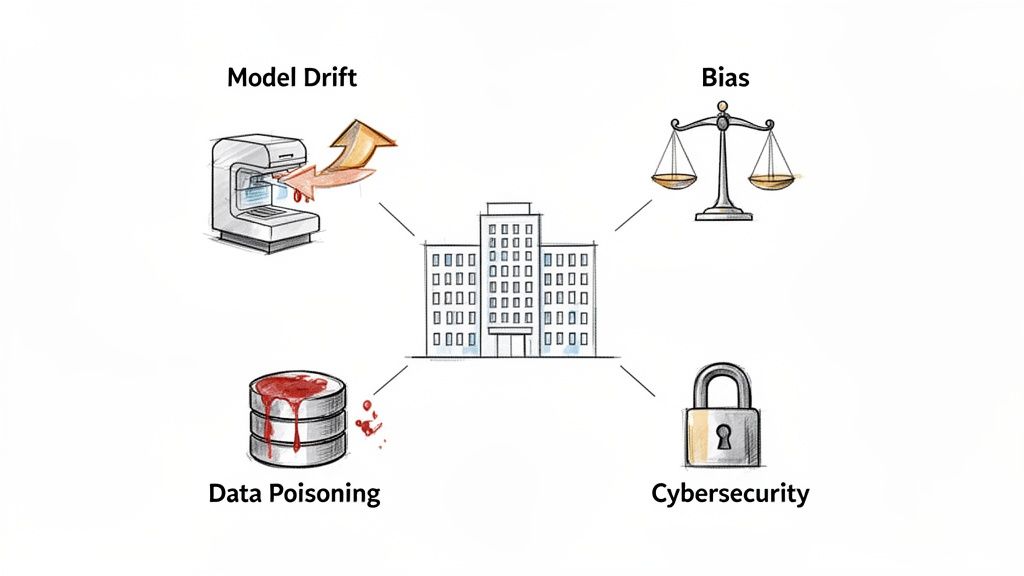

Mapping the Modern Landscape of Clinical AI Risks

To get a real handle on clinical AI risk, you have to look past the usual software worries and focus on the unique threats AI brings to patient care. These aren't your standard IT problems. They’re dynamic, they’re complex, and they can have a massive impact on patient outcomes and your organization's reputation. The first step in building a solid defense is knowing exactly what you're up against.

Think about it this way: an AI diagnostic tool has been a star performer for months. But suddenly, its accuracy starts to slip. There’s no bug, no system crash. The problem? The hospital just installed new imaging equipment. This quiet decline is called model drift, a huge patient safety risk where an AI’s performance slowly erodes as the real world changes around it.

Unpacking Algorithmic Bias and Data Privacy

Another major hurdle is the ethical minefield of algorithmic bias. If you train a model on data from just one demographic, it’s bound to struggle—or even make harmful recommendations—for everyone else. This is how AI can accidentally make existing health disparities even worse, opening the door to serious ethical and legal trouble. Our AI solutions are built from the ground up to prioritize fairness.

And of course, data privacy is non-negotiable. AI systems need massive amounts of patient data to learn and operate effectively. Making sure that data is properly anonymized, secured, and handled according to regulations like HIPAA is absolutely critical. A single breach doesn't just leak information; it shatters the trust you’ve built with your patients.

The New Frontier of AI-Specific Cybersecurity Threats

Your traditional cybersecurity playbook won’t cut it here. AI creates entirely new ways for bad actors to attack, and they require a specialized defense. Attackers aren't just trying to steal data anymore; they're trying to fool the AI into making bad decisions. This is such a big deal that it’s sparked major industry collaborations to get ahead of the problem.

For instance, the Health Sector Coordinating Council pulled together a task group of 115 healthcare organizations to create foundational guidance on this new risk frontier. They identified threats that go far beyond standard frameworks, highlighting the need for new strategies to protect clinical, administrative, and financial AI tools. You can read the full details about this large-scale collaborative initiative.

The greatest risks in clinical AI often hide in plain sight—not as dramatic system failures, but as slow, silent degradations in performance or subtle biases that compound over time. Proactive monitoring is the only effective countermeasure.

Understanding these threats is central to any effective AI strategy consulting engagement. Let's break down the most critical risks healthcare leaders need to get on top of:

-

Data Poisoning: This is when a bad actor intentionally feeds garbage data into your AI model's training set. The goal is to quietly sabotage its logic, causing it to make systematic errors—like misdiagnosing a specific condition or ignoring red flags in certain patient groups. It’s incredibly hard to spot once the model is live.

-

Model Inversion and Evasion Attacks: Here, attackers try to "reverse-engineer" a model to pull sensitive patient details out of its training data, even if it was anonymized. Evasion attacks are even sneakier; someone makes tiny, invisible changes to an input (like a medical scan) to trick the AI into making a completely wrong diagnosis.

-

Performance Drift: As we talked about earlier, this is the natural decline of a model’s accuracy as things change—new equipment, evolving patient populations, updated clinical guidelines. Without constant monitoring and retraining, a once-reliable AI tool can become a dangerous liability. This makes ongoing validation a core part of the AI Product Development Workflow.

Tackling these challenges takes more than just good intentions; it requires deep expertise. Our Healthcare AI Services are designed to spot and shut down these risks from the earliest design stages all the way through long-term monitoring, ensuring your AI initiatives are both powerful and safe.

The Hidden Dangers of Shadow AI and Clinical Deskilling

Let's talk about one of the biggest—and most often overlooked—threats in clinical AI: Shadow AI. This isn't about some malicious actor; it's about the unauthorized, often well-intentioned use of consumer-grade or unvetted AI tools by clinicians just trying to be more efficient.

Think about a doctor, swamped with paperwork, who uses a free online AI to summarize a patient's dense medical history. It saves them 20 minutes, but it creates a massive blind spot for the hospital. Where did that sensitive patient data just go? Who owns that AI model? And most importantly, is the summary it generated even clinically accurate? This single, innocent action, happening in pockets all across a health system, opens up a huge, unmanaged risk.

Why Unsanctioned AI is on the Rise

The reality is clinicians are under incredible pressure. Faced with burnout and crushing administrative tasks, it’s completely understandable that they’d reach for accessible AI tools for business to get some relief. This isn't a new problem, but it's accelerating fast.

With ongoing staff shortages, health systems have seen a huge jump in shadow AI. It's a grassroots effort to plug efficiency gaps. But this creates a dangerous cocktail of risks: you have the immediate problem of unvetted AI making mistakes, and a slower, more insidious problem of workforce deskilling. It's a situation that screams for modern governance, including proper AI training and real technical guardrails to keep everyone safe. You can learn more about these evolving healthcare AI trends and see just how widespread this has become.

The great irony here is that the very drive for efficiency ends up threatening patient safety and data security. Without any oversight, these shadow tools are operating completely outside the lines of any risk management framework.

The Long-Term Threat of Clinical Deskilling

The problem runs deeper than just bad data or privacy breaches. When clinicians rely too heavily on AI, we start to see the long-term threat of clinical deskilling. This is the gradual erosion of critical judgment, diagnostic intuition, and hands-on skills that are the bedrock of medicine.

It’s like a pilot who gets so used to autopilot that their manual flying skills start to get rusty. In the same way, a radiologist who just accepts an AI's initial read without doing their own rigorous review might slowly lose the ability to spot those subtle, weird anomalies that the algorithm was never trained to see.

Over-reliance on AI is not just a technological issue; it's a human factors risk. The goal is to augment clinical intelligence, not replace it. A system that makes clinicians passive observers instead of active participants is a system that has failed.

This decay of expertise is a slow burn, but it's a profound threat to the quality and resilience of healthcare. As we explored in our AI adoption guide, true success comes from balancing an AI’s assistance with the irreplaceable value of human expertise.

Building Guardrails to Channel Innovation Safely

The answer isn't to lock everything down and ban new tools. A strong governance framework isn't about stifling innovation—it's about creating safe channels for it. It gives your staff clear guardrails so they can find better ways to work without putting patients or the organization on the line.

An effective model must include:

- Clear Usage Policies: Have a simple, explicit document that defines which AI tools are approved, how to request a new one, and makes it crystal clear that using unvetted apps with patient data is forbidden.

- A Dedicated Oversight Committee: Pull together a team with members from clinical, IT, legal, and security. Their job is to review and approve any new AI tool, making sure it passes muster on all fronts. This is a non-negotiable part of any serious AI requirements analysis.

- Transparent Reporting Channels: Create an easy, non-punitive way for staff to say, "Hey, I've been using this tool, and it's really helpful—can we get it officially approved?" or to flag potential risks they see.

Navigating these challenges requires a clear plan. A Custom AI Strategy report can provide the blueprint for building a governance structure that encourages safe innovation and neutralizes the hidden dangers of shadow AI and clinical deskilling.

How to Navigate the AI Regulatory and Compliance Maze

Healthcare is already tangled in a dense web of regulations. When you introduce AI into the mix, that complexity skyrockets. Getting an AI solution off the ground isn't just a technical challenge; it’s about mastering the legal and ethical rulebook that protects patients. The trick is to see these regulations not as roadblocks, but as guardrails for building systems that people can actually trust.

Right now, innovation in clinical AI is moving much faster than the laws meant to govern it, creating a real tension for healthcare leaders. With no specific federal AI legislation on the books yet, you’re left piecing together compliance from existing laws like HIPAA and the Food, Drug, and Cosmetic Act. This means your risk management strategy can't be set in stone; it has to be agile and look ahead.

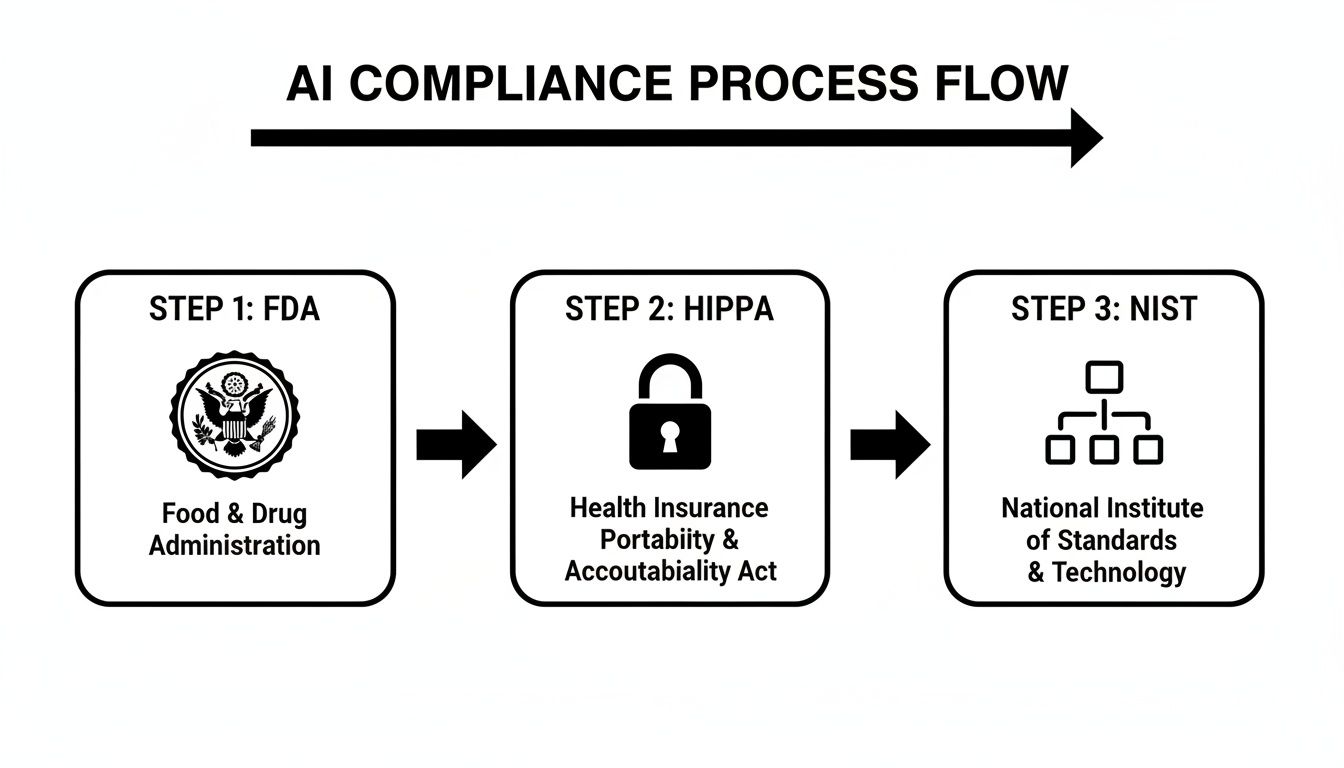

Key Frameworks You Need to Know

To build a solid, compliant AI program, you have to get comfortable with a few key frameworks. Each one tackles a different piece of the risk puzzle—from device safety and data privacy to ethical integrity. Any good AI strategy consulting engagement starts by mapping your initiatives against these critical standards.

Let's break down the three most important ones.

-

FDA Guidance on Software as a Medical Device (SaMD): If your AI tool helps diagnose, treat, or prevent a disease, the FDA will likely see it as a medical device. That classification brings serious oversight. You'll need meticulous documentation on how the model was validated, how it performs, and the data it was trained on. The FDA wants to see clear proof that the tool is both safe and effective for its specific job.

-

HIPAA and AI Data Handling: The Health Insurance Portability and Accountability Act (HIPAA) is the foundation of patient privacy, and it’s non-negotiable. But what happens when an AI model is trained on what you think is de-identified patient data? There's still a risk of re-identification. You absolutely must conduct a thorough risk analysis to prove that patient data is protected at every single step, from training all the way to deployment.

-

NIST AI Risk Management Framework: This isn't a law, but the framework from the National Institute of Standards and Technology is the gold standard playbook for managing AI risks. It gives organizations a flexible but structured way to map, measure, and govern AI risks, helping you build trustworthy systems that align with ethical principles.

Translating Regulation into Action

Knowing these frameworks is one thing, but putting them into practice is where the real work begins. For instance, your governance for new internal tooling should include a mandatory checklist based on NIST principles. This forces every new AI application to pass a risk assessment before it ever gets near patient data, turning high-level guidance into a practical, day-to-day process.

The real challenge isn’t just ticking the box on individual regulations. It's about creating a single, unified governance structure that can adapt to the legal shifts we all know are coming. Your framework needs to be a living system, not a static checklist you file away.

This is where a deep focus on compliance becomes a true competitive advantage. Our AI Automation as a Service is built with these complex requirements baked in from the start, letting you innovate with confidence. By weaving compliance directly into your development lifecycle, you can avoid expensive rework and build trust with clinicians and patients right from day one.

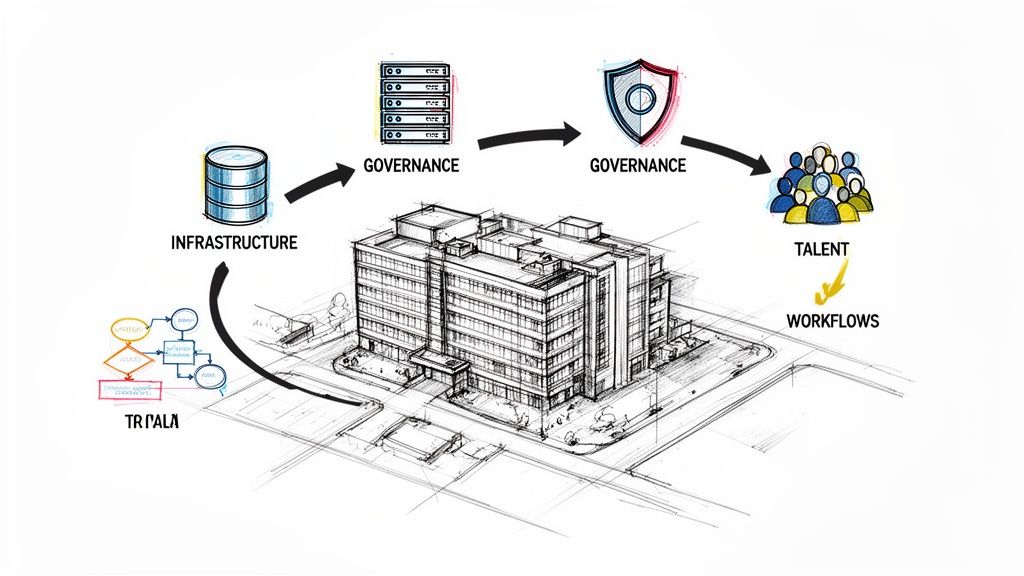

Building Your Practical AI Risk Management Framework

Moving from theory to action means creating a structured, practical playbook for managing AI risk. A solid clinical AI risk management framework isn't a static document you file away—it’s a living system that has to evolve right alongside your AI tools and the technology itself. The real goal here is to bake safety and oversight into every single step of the AI lifecycle.

This all starts with a strong foundation. The first move is to assemble a dedicated AI review board or governance committee. This isn't just an IT project; you need a cross-functional team with clinical leaders, data scientists, IT security experts, and your legal and compliance officers at the table. Their job is to evaluate, approve, and continuously monitor every AI tool to make sure it lines up with your organization's safety and ethical standards. Think of this committee as the central nervous system for your entire AI strategy.

The Risk Assessment Process From Start to Finish

With your governance team in place, you can focus on the heart of the framework: the risk assessment process. This isn't a final checkbox you tick before going live. It has to start way back in the AI requirements analysis phase. Trust me, it's far easier to design out potential harms before a single line of code is written than it is to patch problems after the fact.

A key part of building a resilient AI framework involves integrating strong development habits, like following secure code review best practices. This proactive step helps you catch and fix potential vulnerabilities at the source, making your clinical AI systems fundamentally more secure and reliable. The assessment must also quantify the real-world impact of a failure—what happens if this model gets it wrong, and how will we know?

Here's a critical point: our standards for accuracy have gotten much tougher. A few years ago, a single-purpose AI with 95% accuracy seemed pretty good. But now, with foundation models analyzing dozens of clinical findings at once, that same 5% error rate becomes a massive problem. Small false-positive rates compound quickly at scale, leading to overwhelming alert fatigue that destroys clinician trust. Today, accuracy is patient safety, and that has to be central to your risk analysis.

This flowchart maps out the key compliance frameworks that should guide any comprehensive risk assessment.

As you can see, regulatory bodies like the FDA, privacy laws like HIPAA, and guidance from NIST all come together to form a connected pathway for deploying AI safely and responsibly.

To help you put this into practice, here is a simple checklist for evaluating new clinical AI tools before they ever touch a patient workflow.

Practical Checklist for Assessing Clinical AI Tools

| Assessment Category | Key Question to Answer | Example Mitigation Tactic |

|---|---|---|

| Data Integrity & Bias | Was the training data diverse enough to represent our patient population? | Audit the dataset for demographic gaps; test the model's performance across different patient subgroups. |

| Clinical Safety | What is the clinical consequence of a false positive or false negative? | Implement a "human-in-the-loop" workflow where a clinician must confirm high-stakes AI recommendations. |

| Model Performance | How will we know if the model's performance starts to degrade over time? | Set up automated alerts for performance drift and schedule regular model re-validation against new data. |

| Cybersecurity | Can an attacker manipulate the model's inputs to generate a malicious output? | Conduct adversarial testing (e.g., "red teaming") to identify and patch vulnerabilities before deployment. |

| User Trust & Usability | Is it clear to clinicians when they are interacting with an AI recommendation? | Design the user interface with clear visual cues and provide transparent "explainability" features. |

This checklist is a starting point, designed to spark the right conversations and ensure you cover the most critical risk domains from day one.

Continuous Monitoring and Key Performance Indicators

A framework is only as good as its ability to track what's happening in the real world. Once an AI tool goes live, you have to monitor its performance relentlessly. This is where Key Performance Indicators (KPIs) become your best friend.

A "set it and forget it" approach to clinical AI is a recipe for failure. The most dangerous risks aren't the ones you catch at launch, but the ones that creep in silently over time through model drift and changing clinical realities.

Your monitoring dashboard should be a mix of technical and clinical metrics:

- Model Accuracy Trends: Is the model holding steady, improving, or getting worse over time when compared to the ground truth?

- Drift Detection Alerts: Set up automated flags that trigger when the model's predictions start to stray too far from its original training baseline.

- Frequency of User Overrides: A high rate of clinicians ignoring or changing the AI’s suggestions is a massive red flag signaling poor performance or a lack of trust.

- Adverse Event Reporting: You need a clear, no-blame channel for clinicians to report any suspected AI-related errors or near misses.

These essential risk management steps are built directly into our implementation support methodology. By weaving monitoring and governance into the development lifecycle from the very beginning, you create a system that is resilient by design, keeping patient safety exactly where it should be: front and center.

Your Path to Safe and Effective Clinical AI

Let's be clear about one thing: effective clinical AI risk management isn't a roadblock to innovation. It's the engine that drives it forward, safely.

A proactive, well-structured approach is the only way to protect patients, safeguard your organization, and truly unlock what AI can do for healthcare. This goes way beyond just avoiding penalties. It's about building a foundation of trust so your clinicians can embrace these powerful new tools with total confidence.

An Executive Checklist for Building Resilience

To wrap things up, here’s a quick-hit checklist of the essential actions leaders must take to build a resilient and trustworthy AI program. Consider these your non-negotiables.

-

Establish a Cross-Functional Governance Committee: Your very first move? Assemble a dedicated AI review board. This team absolutely must include leaders from clinical, IT security, legal, and data science to get a 360-degree view of every project.

-

Mandate a Pre-Deployment Risk Assessment: No AI tool should ever touch a clinical workflow without a formal risk assessment, ideally based on a solid framework like the one from NIST. This has to include rigorous testing for algorithmic bias and any data privacy weak spots.

-

Develop Clear Usage Policies: Create and communicate explicit guidelines on which AI tools are approved and how they can be used. This is your number one defense against the growing problem of "Shadow AI," ensuring all innovation happens within safe, monitored channels.

-

Implement Continuous Performance Monitoring: Once an AI goes live, the job isn’t done. You have to continuously track key metrics like model drift, accuracy trends, and clinician override rates. This is how you catch small problems before they can grow and impact patient care.

The journey is complex, but you don't have to navigate it alone. Partnering with experts who genuinely understand the unique intersection of technology and patient care is critical for success. For instance, tools like our Clinic AI Assistant are designed from the ground up with these safety principles in mind.

Connect with our expert team to learn how our deep experience in custom healthcare software development and AI strategy can build your foundation for safe, scalable innovation.

Frequently Asked Questions

When it comes to managing the risks of AI in a clinical setting, a lot of tough questions come up around accountability, how to actually get started, and what success even looks like. Let's tackle some of the most common ones we hear from healthcare leaders.

Where Do We Even Begin with Managing AI Risks?

Your very first move should be to pull together an AI governance committee. This can't be just an IT project; you need a mix of experts in the room—clinicians, data scientists, IT security pros, and your legal and compliance officers.

Once assembled, their first task is simple but critical: create a complete inventory of every AI tool currently in use. This includes the officially sanctioned systems and, just as importantly, any "shadow AI" tools that staff might be using on their own. This map gives you a baseline for your real-world risk exposure and is the foundation for any formal management plan. After that, they can focus on building a solid AI requirements analysis process for any new tools.

How Do We Know if Our AI Risk Program Is Actually Working?

You'll need a blend of metrics that look at the technology, the clinical workflow, and your overall operations. Think of it like a balanced scorecard for AI safety.

Here are a few Key Performance Indicators (KPIs) to track:

- Model Performance Drift: Are the AI's predictions getting less accurate over time?

- Clinician Override Rates: How often are doctors ignoring the AI's suggestions? A high rate could mean they don't trust it or it's not accurate enough for real-world cases.

- Incident Logs: Track every AI-related error or even near-misses.

Don't forget the human element. Surveying your clinical staff about their confidence in specific AI tools for business can give you invaluable feedback that raw numbers can't. The goal is to build a dashboard that shows you the technical health of your AI and how it's truly impacting patient care.

When an AI Gets It Wrong, Who’s on the Hook?

This is the million-dollar question, and the legal and ethical landscape is still taking shape. As it stands today, accountability is often a chain of shared responsibility.

The clinician who makes the final call on patient care is typically seen as the final decision-maker, since most AI is considered a support tool, not an autonomous one. However, the healthcare organization itself carries a massive responsibility to ensure that any AI it deploys has been properly vetted, validated, and is continuously monitored for safety.

And let's not forget the AI vendor—they are responsible for the model's fundamental integrity. This is precisely why a strong clinical AI risk management program is non-negotiable. It protects your organization by creating a clear, documented trail of your vetting process, monitoring, and training, making it clear you've done your due diligence.

Ready to build a resilient AI strategy that prioritizes patient safety? Ekipa AI delivers expert guidance and a Custom AI Strategy report to help you navigate the complexities of clinical AI risk management with confidence. Connect with our expert team to learn more.