CEO's Guide to Ethical Al in Clinical Environments and Responsible AI Leadership

A concise playbook for CEOs on ethical Al in clinical environments, covering bias mitigation, patient safety, and governance.

For healthcare leaders, bringing AI into the clinic isn't just an option anymore—it's a fundamental shift in how we deliver patient care. But this new frontier is loaded with complex ethical landmines, and navigating it requires a strategy that goes far beyond just ticking compliance boxes.

When we talk about ethical AI in clinical environments, we're talking about weaving principles of fairness, transparency, and accountability into the very fabric of your AI strategy. Getting this right is how you build patient trust, drive real clinical excellence, and ultimately, secure a lasting competitive edge.

The New Strategic Imperative for Ethical AI in Healthcare

AI is finding its way into clinical settings at a staggering pace. In fact, the healthcare AI market is on track to hit $102.7 billion by 2028. This rush to adopt the technology brings a critical challenge to the forefront: without a solid ethical foundation, even the most sophisticated AI can become a huge liability, putting patient safety at risk and shattering the trust we work so hard to build.

For CEOs and CTOs, the key is to stop seeing ethical AI as a regulatory hurdle and start seeing it as a core strategic advantage. That's the only way to win in the long run.

This requires a complete mindset shift, moving from reactive damage control to proactive design. Instead of scrambling to fix ethical problems after they've already caused harm, the smartest organizations are building ethics into the DNA of their AI from day one. It’s a deep commitment to creating Healthcare AI Services that are not just technically brilliant, but morally and ethically sound.

Why Ethics Equals Excellence in Clinical AI

Think of an ethical framework as the foundation for trustworthy tech. When your clinicians and patients actually believe an AI system works fairly and transparently, they’re far more likely to use it. That’s when the technology can finally deliver on its promise to improve outcomes.

Here’s what that looks like in practice:

-

Enhanced Patient Trust: When clinicians can understand why an AI made a certain recommendation, it builds confidence. This kind of transparency safeguards the critical doctor-patient relationship, rather than undermining it.

-

Improved Clinical Outcomes: By actively working to root out bias from your algorithms, you can ensure your diagnostic and treatment tools serve every patient population equally. This is a direct way to reduce health disparities.

-

Reduced Organizational Risk: A strong governance structure is your best defense against the legal and reputational fallout from biased algorithms, data privacy breaches, or "black box" AI systems that can't be explained.

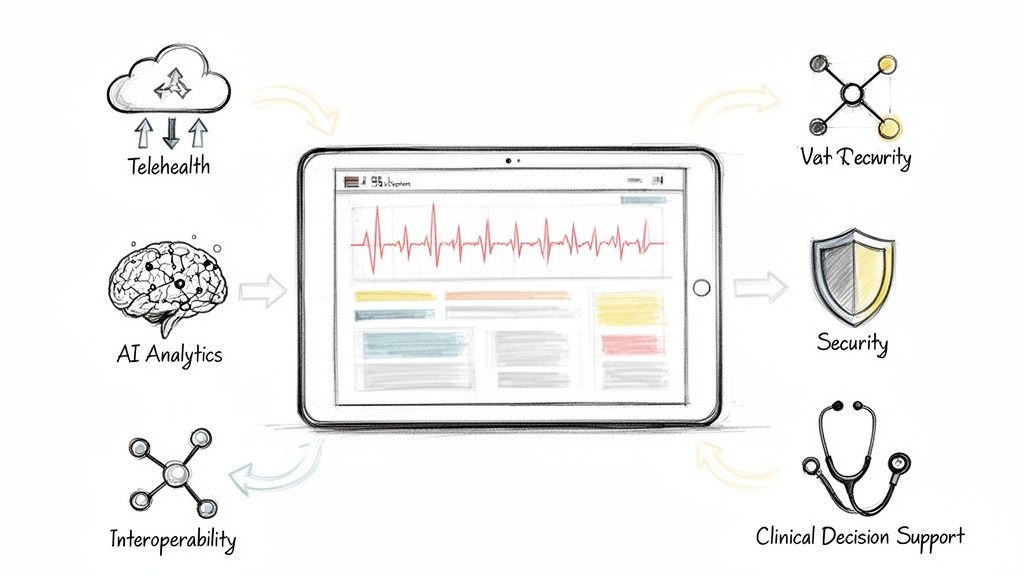

As organizations start working with more advanced models, understanding platforms like Azure OpenAI for clinical solutions is vital for building and deploying these tools responsibly. This proactive stance ensures that your innovation always aligns with your core mission: patient care. The goal, after all, is to create a clinical environment where technology truly empowers providers and protects patients, turning powerful AI into a genuine force for good.

Decoding the Principles of Trustworthy Clinical AI

To bring ethical AI into clinical environments, we have to move past buzzwords and get real about the principles that make a technology trustworthy. Think of it as a Hippocratic Oath for algorithms. These aren't just nice-to-haves; they are fundamental requirements for any tool that touches patient care. Without them, even the most technically impressive AI can do more harm than good.

The entire framework really boils down to four core pillars: fairness, transparency, accountability, and privacy. Getting these right is the first, most critical step for any leader making high-stakes decisions about which AI tools to bring into their healthcare setting.

To put these ideas into a practical context, let's break down what each pillar means for your organization. The following table maps these ethical concepts to their real-world clinical implications and poses the key strategic questions you should be asking.

The Four Pillars of Ethical AI in Clinical Practice

| Ethical Pillar | Clinical Implication | Key Question for Your AI Strategy |

|---|---|---|

| Fairness | The AI system provides equitable recommendations and diagnoses for all patient demographics, avoiding the amplification of existing health disparities. | How are we actively auditing our data and models for hidden biases to ensure they don't disadvantage certain patient groups? |

| Transparency | Clinicians can understand why an AI model made a specific recommendation, allowing them to validate its reasoning and build trust in the tool. | Can our doctors easily interpret the AI's output, or is it a "black box" that demands blind faith? |

| Accountability | There is a clear line of responsibility when an AI-assisted decision results in a negative outcome, involving the institution, clinician, and developer. | Who is responsible when things go wrong? Do we have a clear governance framework that defines roles and oversight? |

| Privacy | Sensitive patient health information used to train and operate the AI is rigorously protected, maintaining patient trust and regulatory compliance. | Are our data handling, anonymization, and security protocols strong enough to protect patient confidentiality at every stage? |

By internalizing these pillars, you're not just managing risk—you're building a foundation for AI that genuinely enhances patient care and earns the trust of both your clinicians and the communities you serve.

Fairness: Preventing Digital Health Disparities

In AI, fairness means making absolutely sure an algorithm doesn’t perpetuate or, worse, amplify existing societal biases. In a clinical setting, getting this wrong can have life-or-death consequences. Imagine an AI trained mostly on data from one demographic; it could easily misdiagnose a serious condition in an underrepresented patient, leading to disastrously delayed treatment.

Achieving fairness isn’t a passive exercise. It demands that you actively seek out and curate diverse datasets, run rigorous bias audits, and constantly monitor how the model performs across all your patient populations. The ultimate goal is simple: an AI that helps deliver equitable care to everyone who walks through your doors, regardless of their background.

Transparency: The End of the Black Box

Transparency, often called explainability, is all about tackling the "black box" problem. You can't expect a clinician to trust and act on an AI's recommendation if they have no idea how it got there. A doctor must be able to ask why the algorithm flagged a tumor as malignant or suggested a specific medication.

True transparency empowers clinicians, allowing them to use AI as a co-pilot rather than a mysterious oracle. It turns a black box into a glass box, fostering the trust necessary for safe and effective integration into clinical workflows.

This isn't just about making people feel comfortable. It's essential for clinical validation, for figuring out what went wrong when there's an error, and for building the confidence needed for your teams to actually use the tool.

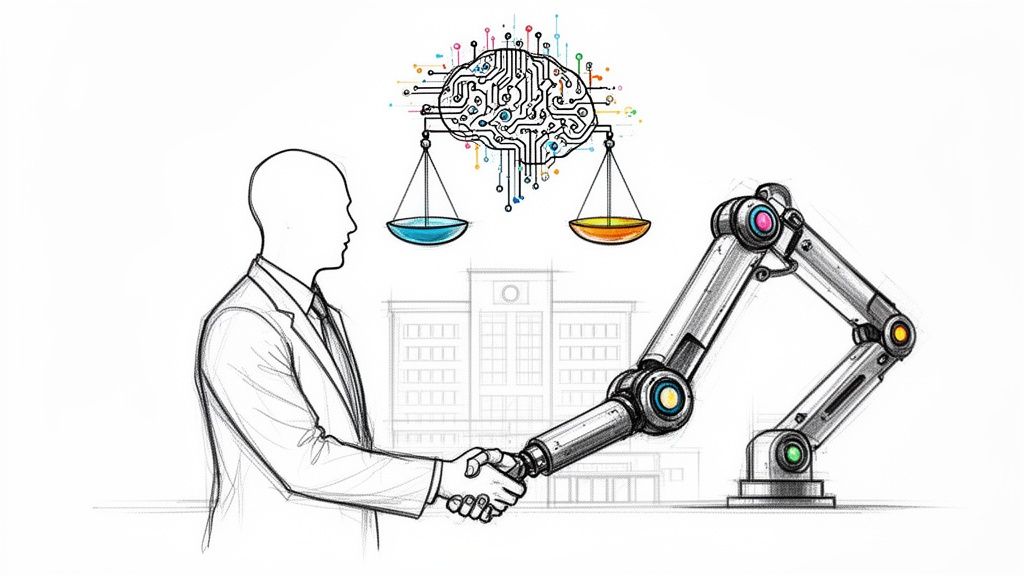

Accountability: Defining Responsibility in an Automated Age

When a decision guided by AI leads to a bad outcome, who’s on the hook? Accountability in clinical AI is about establishing a clear, unambiguous chain of responsibility that includes the healthcare institution, the clinician, and the AI developer. It means creating a system where mistakes can be traced, understood, and corrected.

This requires a few key actions:

-

Robust Governance: You need clear, written policies for how you buy, deploy, and monitor every AI system in your hospital.

-

Human Oversight: A qualified clinician must always be in the loop, ready to use their professional judgment to either confirm or override what the AI suggests.

-

Vendor Due Diligence: You have to hold your technology partners accountable for the safety and transparency of their products. This involves rigorous validation and quality assurance, often using disciplined approaches like test automation in healthcare, to ensure every tool is reliable and safe.

Privacy: Safeguarding Sensitive Patient Data

Finally, and most fundamentally, is privacy. Clinical AI models are hungry for data—vast amounts of incredibly sensitive patient health information. Protecting this data isn’t just a legal requirement; it’s a core ethical duty that underpins the sacred trust between a patient and a provider.

This means implementing ironclad data anonymization techniques, secure storage protocols, and transparent consent processes so patients know how their information is being used. As a leader, you have to ensure your AI strategy reinforces your commitment to patient confidentiality, rather than weakening it. Embedding these four principles is how you build an AI ecosystem that is not only powerful but also profoundly responsible.

Navigating the Global Regulatory Maze for Clinical AI

The rulebook for AI in healthcare is being written as we speak, and keeping up isn't just about ticking compliance boxes—it's a core business strategy. For any leader in the clinical space, getting a handle on the global regulatory landscape is non-negotiable. Think of these regulations less as hurdles and more as a roadmap for building healthcare software solutions that people can actually trust.

Get it wrong, and you're looking at more than just steep fines. You risk damaging your reputation and facing the nightmare of having to re-engineer entire systems from the ground up.

The only sustainable path forward is proactive compliance. This means weaving regulatory awareness into your AI requirements analysis from day one, not tacking it on as an afterthought. This approach not only saves you from expensive rework but also cements your reputation as a leader in responsible innovation.

The EU AI Act: Setting the Pace for Clinical AI Worldwide

Right now, one of the most important pieces of legislation to watch is the European Union’s AI Act. Even if you don't operate in the EU, its influence is rippling across the globe, setting a benchmark that will almost certainly shape future regulations everywhere.

The Act uses a risk-based approach, and it's no surprise that most clinical AI tools—like diagnostic algorithms or systems that personalize treatment plans—are automatically classified as 'high-risk'.

This isn't just a label; it's a call to action. It triggers a whole set of tough obligations. High-risk systems have to prove they meet strict standards for:

-

Data Governance: Is the data you used to train the model high-quality, relevant, and as free from bias as possible?

-

Technical Documentation: Can you produce detailed records of how the system was built, validated, and how it’s meant to be used?

-

Human Oversight: Have you designed the system so a human can meaningfully intervene and have the final say?

-

Transparency and Explainability: Can you clearly explain to clinicians and patients what the AI does, what its limits are, and the basic logic behind its outputs?

Getting to grips with these requirements is the first step in aligning your AI strategy consulting with global best practices. It's how you ensure your tools are built on a foundation of safety and trust from the very start.

Turning Legal Text into a Business Playbook

The EU AI Act isn't just a legal document; it's a timeline that every healthcare provider needs to have on their wall. The Act’s prohibitions on unacceptable AI practices kick in just six months after it formally enters into force. General-purpose AI transparency rules follow 12 months later, with a deadline of August 2, 2026.

The big one for healthcare—the full obligations for high-risk AI systems—comes 36 months after enactment. This risk-based framework demands serious, rigorous assessments to protect patient rights, which has to be a top priority for any healthcare organization. As we cover in our guide to VeriFAI, getting ready for these deadlines is absolutely critical. Learn more about the evolving ethical and regulatory AI frameworks in Europe.

For healthcare leaders, these regulations aren't a burden. They're a blueprint for mitigating risk, building patient trust, and creating AI that is both powerful and responsible.

Sticking your head in the sand is not an option. The fines for non-compliance are eye-watering, reaching up to €35 million or 7% of global annual turnover. But the true cost is the loss of trust with patients, clinicians, and partners.

By embracing these standards proactively, you turn a potential liability into a massive competitive advantage. You send a clear signal to the market that you are committed to the highest standards of patient safety and innovation—the cornerstones of any successful and sustainable AI journey in healthcare.

Confronting Algorithmic Bias to Ensure Health Equity

Algorithmic bias isn't some abstract, far-off risk. It's a real and present danger to patient safety and health equity, and it’s one of the biggest ethical hurdles we face in clinical AI. Simply put, bias is what happens when an AI system produces skewed results because of faulty assumptions baked into its learning process. Think of it as a dangerous blind spot that can twist a promising diagnostic tool into a source of patient harm and a massive liability for your organization.

Where does this blind spot come from? Usually, it's the data. If you train an AI model on a dataset that mostly reflects one demographic, its performance will nosedive when it encounters anyone outside that group. This isn’t a small statistical hiccup; it’s a critical failure. It leads directly to misdiagnoses, delayed care, and worse outcomes, especially for women and ethnic minorities. For any leader in healthcare, ignoring this is the same as accepting a two-tiered system of care, where technology works for some patients but fails others.

The Real-World Impact of Flawed Data

The line between flawed data and patient harm is terrifyingly direct. Imagine an AI built to spot skin cancer. If it was trained almost exclusively on images of light-skinned patients, it could easily miss a malignant melanoma on a person with darker skin. In that scenario, a delayed diagnosis could be a death sentence. Or consider a tool that predicts cardiac risk. If it learned from mostly male data, it might completely misjudge the risk for a female patient, whose symptoms often show up differently.

These aren't just hypotheticals. We’re seeing this play out right now, with many real-world use cases highlighting the risks. One landmark analysis revealed that machine learning models trained on predominantly white male data were 15-20% less accurate for minorities and women. In another study, 62% of clinicians pointed to bias and fairness as their top concerns, specifically calling out the lack of diversity in training data. Discover more insights into how bias impacts AI in clinical settings. The message is crystal clear: biased AI doesn’t just mirror the inequities that already exist in healthcare; it makes them worse.

A Playbook for Mitigating Bias and Promoting Fairness

Tackling algorithmic bias requires more than just awareness; it demands a hands-on, multi-pronged strategy. You have to build a framework to actively find, measure, and fix bias at every single stage of the AI lifecycle. This is a non-negotiable part of any responsible AI strategy.

Here’s a practical playbook for building fairness directly into your clinical AI systems:

-

Conduct Rigorous Bias Audits. Before you even think about deploying a new AI model, put it through its paces. Audit its performance across every demographic you serve—age, gender, race, and ethnicity. Don't just look at the overall accuracy score; dig into how it performs for each specific subgroup to find those hidden, dangerous disparities.

-

Demand Vendor Transparency. When you're buying a third-party AI tool, refuse to accept a "black box." Your vendor must provide detailed documentation on their training data, how they validated their model, and its performance metrics across diverse populations. Make fairness a deal-breaker in your procurement process. For instance, our guide on Diagnoo emphasizes just how critical explainability is for diagnostic tools.

-

Invest in Diverse and Representative Data. You have to be proactive about diversifying your datasets. This might mean partnering with other hospitals, joining data-sharing consortiums, or using advanced methods like federated learning to train models on decentralized data without compromising patient privacy.

-

Establish Continuous Monitoring Protocols. Fixing bias isn't a one-and-done job. You need to monitor your models in real time, even after they're deployed. Patient populations shift and clinical guidelines change. Your AI has to adapt without quietly becoming biased again.

By taking these steps, you can shift from being reactive to being proactive. This approach doesn't just solve a problem; it turns the challenge of bias into an opportunity to build more equitable, effective, and trustworthy healthcare. The goal of achieving health equity isn't just a lofty ideal—it's a tangible outcome for organizations willing to do the work.

Building a Governance Framework That Protects Patients and Your Organization

Without a strong governance plan, even the most promising AI tools can quickly become liabilities. An ungoverned, "wild west" approach to adopting AI is a direct path to significant risks, from patient harm to steep compliance penalties. You need a practical blueprint for oversight to turn powerful technology into a trustworthy, scalable asset for your organization.

A huge driver behind this is the growing threat of "Shadow AI"—unvetted tools used by staff that create massive blind spots. In the rush for productivity, especially with ongoing staffing shortages, clinicians are bypassing official channels. Recent reports show a shocking 70-80% of healthcare staff have adopted unvetted GenAI apps on their own. This trend is sparking real fears of "deskilling," a dangerous scenario where clinicians start trusting authoritative-sounding—but completely wrong—AI outputs, putting patient safety on the line. For more on this, check out these expert insights on healthcare AI trends.

Establishing a Multidisciplinary Ethics Committee

Your first move should be to form a multidisciplinary AI ethics committee. This can't just be an IT project; it needs to bring together a wide range of experts from across your organization to get a complete picture of how AI will impact everything you do.

Think of this committee as the central command for your entire AI strategy. Its core job is to make sure every single AI initiative, from buying a new tool to deploying it in a clinic, lines up with your organization's ethical principles and patient safety standards.

Your team should include:

-

Clinical Leaders: The doctors and nurses on the front lines who know the real-world workflows and can judge if an AI tool is clinically sound.

-

Data Scientists and Engineers: The tech experts who can pop the hood on an algorithm to inspect its code, data sources, and potential for bias.

-

Legal and Compliance Officers: The pros who can navigate the maze of regulations and make sure you’re following privacy laws like HIPAA.

-

Ethicists and Patient Advocates: The essential voices who represent patient interests and keep everyone focused on the mission of providing care.

This cross-functional group is your first line of defense against irresponsible AI adoption.

Defining Clear Procurement and Development Policies

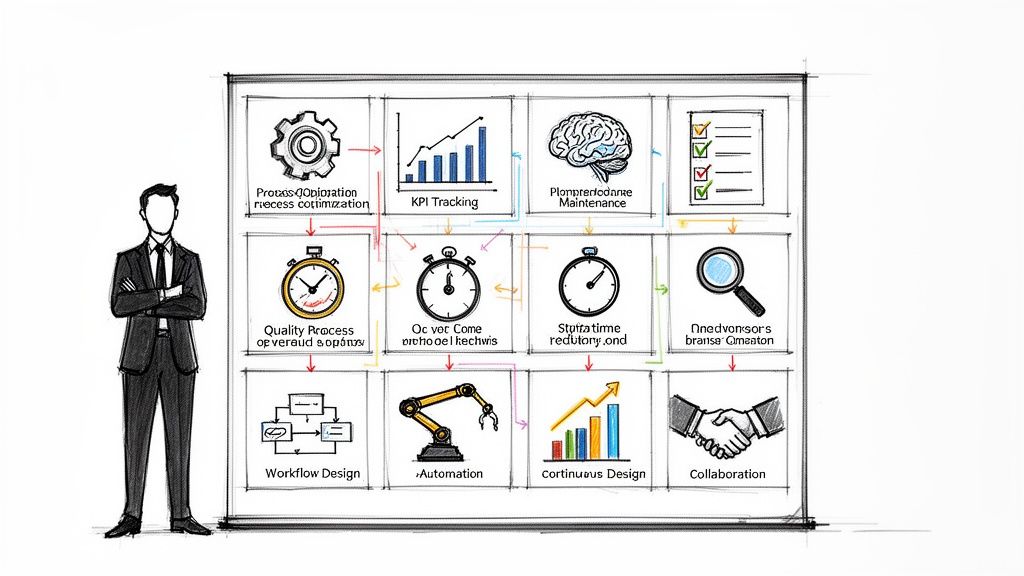

Once the committee is in place, its next job is to create clear, enforceable policies for buying and building AI systems. A chaotic, tool-by-tool approach is just a recipe for disaster. What you need is a structured process that moves your organization from scattered experiments to a well-managed ecosystem of validated AI.

Whether you're building your own tools internally or partnering with a third-party vendor, your policies must require a tough validation process. This means embedding ethical checks at every stage, from the initial idea to post-deployment monitoring. The goal is simple: no AI tool gets near a patient until it has been thoroughly vetted.

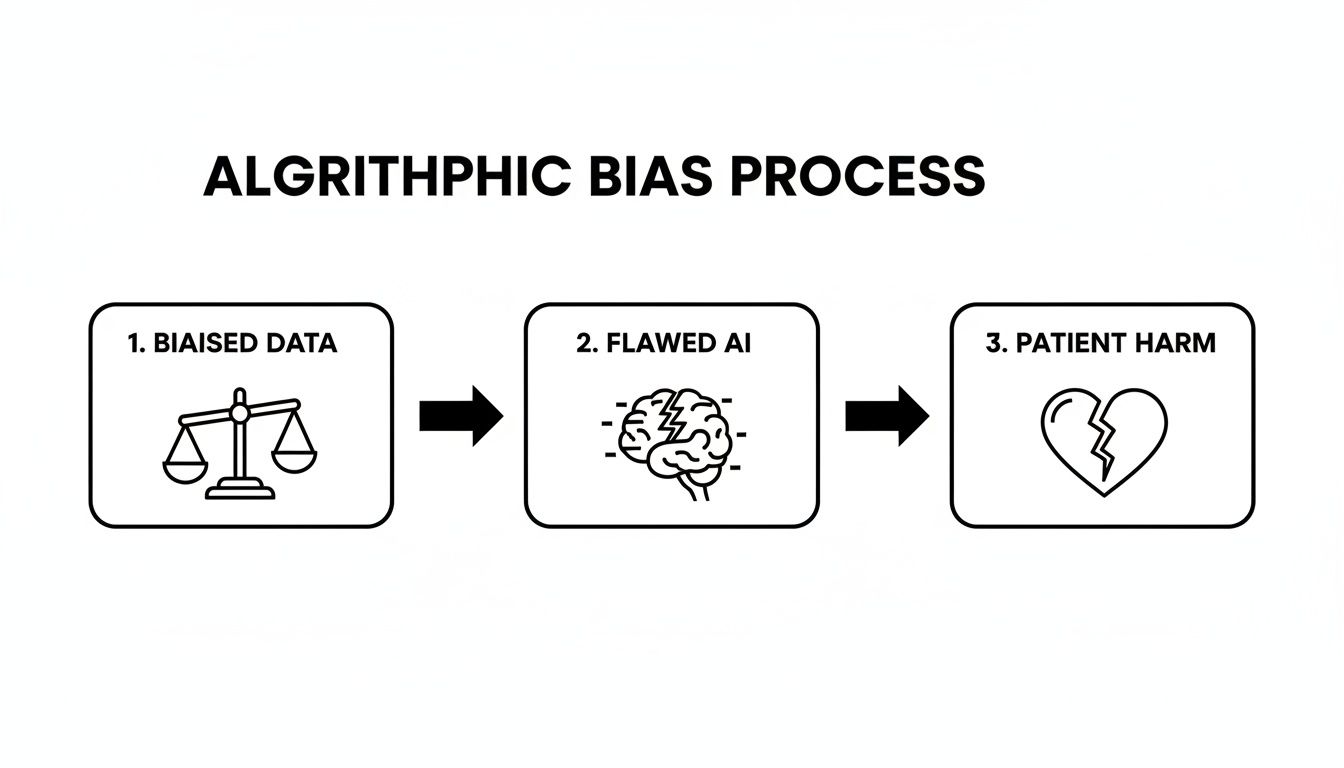

The flowchart below shows just how easily small problems, like biased data, can snowball into flawed AI models and cause direct harm to patients.

This visual makes it crystal clear why strong governance isn't optional. It shows the dangerous and direct line from poor data quality to negative patient outcomes, highlighting why a structured approach to both custom healthcare software development and third-party tools is so critical.

A strong governance framework is the bridge between AI's potential and its responsible application. It ensures that innovation serves your primary mission of patient care, rather than undermining it.

By setting up this kind of oversight, you transform AI from a potential threat into a reliable clinical asset. This structure gives you the confidence to scale your use of AI effectively and, most importantly, safely.

Where Do We Go From Here? Leading the Charge in Trustworthy Healthcare AI

For any healthcare leader—a CEO, a CTO, an AI strategist—getting ethical AI right isn't just another box to check. It's the central challenge of our time, and frankly, it's our biggest opportunity.

We've walked through the regulations, the risks, and the frameworks. It should be clear by now that weaving ethics into your AI strategy is far more than a compliance exercise. It’s the very foundation of patient safety, risk management, and any innovation that hopes to have a lasting, positive impact. The time for simply talking about responsible AI is over. It’s time to act.

Building a culture around ethical AI doesn’t happen by accident. It starts with a clear, documented vision for what you want to achieve. That's why creating a Custom AI Strategy report is the essential first move. It gives you a concrete roadmap to make sure your tech ambitions and your ethical duties are pulling in the same direction, right from the start.

This strategic groundwork is non-negotiable. Only once it's in place should you start looking at specific AI tools for business or rolling out sophisticated internal tooling.

From Blueprint to Bedside

A great strategy is one thing; bringing it to life is another entirely. This is where specialized expertise becomes critical. You need partners who live and breathe this stuff.

Working with specialists in Healthcare AI Services who genuinely understand the nuances of the clinical world can save you from costly missteps and get you to your goals faster. Whether you’re looking at AI Automation as a Service to free up your clinicians or need a battle-tested AI Product Development Workflow, the right team makes all the difference.

The future of medicine won't be defined by the smartest algorithm. It will be shaped by leaders who insist on technology that is, above all, trustworthy. Real innovation in healthcare is measured by the confidence it inspires in both the clinician at the keyboard and the patient in the bed.

At the end of the day, we're all working toward the same thing: a healthcare system where technology truly serves people. A system that empowers clinicians, safeguards patients, and raises the standard of care for everyone. Getting there takes a combination of bold thinking, which an AI strategy consulting partner can provide, and painstaking, detail-oriented execution.

The responsibility for building this future rests on leaders like you. If you're ready to take the next step, I encourage you to meet our expert team and see for yourself how we can help you lead with confidence.

Frequently Asked Questions about Ethical AI

Navigating the complexities of ethical AI in clinical settings raises many questions. Here are answers to some of the most common concerns for healthcare leaders.

Where is the best place to start with implementing an ethical AI framework?

The most critical first step is establishing a multidisciplinary AI governance committee. This shouldn't be a task for the IT department alone. Your committee needs diverse expertise: clinicians who understand workflows, data scientists who can analyze algorithms, legal experts to ensure compliance, and patient advocates to maintain a focus on care. Their primary mandate is to audit existing AI tools (including unapproved "shadow AI") and develop a clear, standardized process for evaluating, procuring, and monitoring all future AI systems, as we explored in our AI adoption guide.

How can we mitigate AI bias if our own patient data lacks diversity?

This is a significant and common hurdle. The key is a proactive, multi-layered strategy. First, transparently acknowledge and document the limitations of your models—where they excel and which patient populations they were not adequately trained on. Second, actively seek to enrich your datasets. This can involve strategic partnerships with other institutions, participating in data-sharing consortiums, or exploring privacy-preserving techniques like federated learning. Finally, make vendor accountability a non-negotiable part of your procurement process. Demand performance metrics broken down by demographics instead of a single accuracy score.

Who is ultimately accountable when an AI system makes a mistake?

Accountability in AI-assisted healthcare is a shared responsibility, and your governance framework must define these roles clearly to avoid dangerous ambiguity.

-

The Healthcare Institution is responsible for the systems it chooses to deploy and for implementing robust monitoring to ensure ongoing safety and performance.

-

The Clinician remains the final decision-maker. They must apply their professional judgment to interpret, verify, or override AI-generated recommendations. The AI is a tool, not a replacement for clinical expertise.

-

The AI Vendor is accountable for the transparency, validity, and safety of their product. They must provide clear documentation on performance, limitations, and training data.

Clearly defining this chain of responsibility protects patients, empowers clinicians, and safeguards your organization. For expert guidance in establishing these protocols, connect with our expert team.