Your Guide to a Healthcare AI Compliance Framework

Build a robust healthcare AI compliance framework that balances innovation and patient safety. Learn to navigate HIPAA, the EU AI Act, and risk management.

Think of a healthcare AI compliance framework as your organization's playbook for AI. It’s a complete set of rules, processes, and technical guardrails that make sure your AI systems are built and used in line with all the legal, ethical, and regulatory standards. It's the blueprint for managing risk, protecting patient data, and building real trust in AI-driven healthcare.

Why AI Compliance Is No Longer Optional in Healthcare

For any leader in healthcare, bringing AI into the fold is a high-stakes game where exciting innovation runs headfirst into immense responsibility. A healthcare AI compliance framework isn't just another box-ticking exercise; it's a strategic guide for leading the market while keeping patient trust sacred. Regulations from bodies like HIPAA and the EU AI Act are getting stricter, creating a complex environment full of both pitfalls and opportunities.

This guide is designed to reframe compliance as a powerful tool for deploying AI safely, ethically, and effectively. If you ignore the rules, you're looking at crippling fines, a tarnished reputation, and a total loss of patient confidence. But if you get ahead of it, a proactive compliance strategy can give you a serious competitive advantage, turning regulatory hurdles into a launchpad for growth.

The Rising Stakes of Non-Compliance

The fallout from ignoring AI compliance standards can be staggering, both financially and operationally. Regulators are watching more closely than ever, and the penalties for getting it wrong are getting harsher. In the U.S., a telling 56% of healthcare compliance leaders admit they don't have the resources to keep up with growing risks—a gap that AI can either make worse or help solve.

Just think about the potential costs:

- Financial Penalties: Fines can run into the millions of dollars for a single violation, hitting your bottom line directly.

- Operational Disruption: One compliance slip-up can bring projects to a grinding halt, force you to pull valuable AI tools offline, and kick off long, expensive audits.

- Reputational Damage: In healthcare, trust is everything. A data breach or a biased algorithm can do permanent damage to your organization's reputation, causing patients to leave and making it harder to attract top talent.

A well-structured compliance framework is the best defense against these threats. It moves an organization from a reactive, crisis-management posture to a proactive state of control and readiness, ensuring that AI solutions are built on a foundation of safety and trust.

Compliance as a Strategic Differentiator

Instead of seeing compliance as a chore, smart leaders are treating it as a strategic advantage. A solid healthcare AI compliance framework is a clear signal to patients, partners, and regulators that your organization is serious about maintaining the highest standards of care and data protection. This commitment builds a "moat" of trust around your business that competitors will struggle to cross.

Getting compliance right from the start allows you to confidently explore advanced Healthcare AI Services without constantly looking over your shoulder for regulatory trouble. It actually speeds up innovation by giving your data scientists and developers clear guardrails, which removes ambiguity and smooths out the AI Product Development Workflow.

In the end, a strong compliance program isn’t just about dodging penalties. It's about building a healthcare organization that is sustainable, trustworthy, and truly innovative. Expert AI strategy consulting can be instrumental in turning these regulatory requirements into a core part of your business DNA.

Untangling the Global Maze of Healthcare AI Regulations

Diving into healthcare AI means stepping into a complex, overlapping web of global rules. It’s not about ticking one box; it's about understanding how different regulations—from U.S. patient data laws to European algorithmic fairness standards—all intersect. The first step to building a solid compliance strategy is cutting through the jargon to see how these pieces fit together.

The real challenge? Many of these rules weren't written with AI in mind, yet they absolutely apply. Imagine trying to build a vehicle that simultaneously meets the safety standards for a car, a boat, and an airplane. Each system has to be compliant on its own, but they also have to work together perfectly. That's the world of healthcare AI compliance.

The Bedrock of US Healthcare AI: HIPAA

In the United States, everything starts with the Health Insurance Portability and Accountability Act (HIPAA). It’s the foundation for protecting patient data, and its rules on Protected Health Information (PHI) are non-negotiable. Those rules now stretch to cover how AI models are trained, validated, and used in the wild.

Put simply, any AI system that comes near PHI must have rock-solid controls in place:

- Secure Data Handling: All PHI used for training or running an AI model must be de-identified or locked down with strong encryption, both when it's stored and when it's moving.

- Strict Access Control: Only authorized people should ever touch the sensitive data fueling your AI. Every interaction needs to be logged in a comprehensive audit trail.

- Constant Risk Assessments: You have to run regular security risk analyses to find and fix weak spots in your AI pipeline. It's not a one-and-done task.

Understanding the nitty-gritty details, like those in guides on HIPAA compliance for small businesses, is essential. Getting these foundational processes right from the start is the only way to build securely.

Europe’s High Bar: The GDPR and EU AI Act

Over in Europe, the bar is set incredibly high by two major pieces of legislation. The General Data Protection Regulation (GDPR) gives individuals powerful rights over their personal data, and the new EU AI Act brings a risk-based approach specifically for artificial intelligence.

For healthcare, the EU AI Act is a game-changer. It classifies most AI tools used for diagnosis or treatment as ‘high-risk,’ which brings a whole new level of mandatory obligations before they can even get to market.

If your AI falls into this high-risk category, you'll need to deliver on:

- Radical Transparency: You must have clear, detailed documentation on how your model works, what data it was trained on, and exactly what it’s supposed to do.

- Human-in-the-Loop Oversight: A human expert must always be able to step in and override an AI's decision, especially in a clinical setting.

- Proactive Bias Detection: Your systems need to be rigorously tested to find and fix algorithmic bias, ensuring fair and equitable outcomes for all patients.

The FDA and Software as a Medical Device (SaMD)

Back in the U.S., the Food and Drug Administration (FDA) is also adapting its rules for the AI era, especially for what it calls Software as a Medical Device (SaMD). This category covers AI algorithms that perform a medical function, like analyzing an MRI scan or predicting a patient's risk of disease.

The FDA isn't just looking at a snapshot in time. Its focus is on the entire lifecycle of the AI model. Validating a model once before you launch it is no longer good enough. The agency expects you to continuously monitor its performance to make sure it stays safe and effective as it encounters new, real-world data.

Mapping the Key Global Regulations

Navigating the global landscape requires a clear map of the key rules shaping healthcare AI. The table below outlines some of the most influential regulations you'll encounter.

| Regulation | Geographic Scope | Core Focus for AI | Key Requirement Example |

|---|---|---|---|

| HIPAA | United States | Protecting patient health information (PHI) in AI systems. | Implementing Business Associate Agreements (BAAs) with AI vendors who handle PHI. |

| GDPR | European Union | Ensuring lawful processing and individual rights over personal data. | Conducting a Data Protection Impact Assessment (DPIA) before deploying an AI model. |

| EU AI Act | European Union | Establishing a risk-based framework for AI systems. | High-risk AI (like diagnostic tools) requires conformity assessments and human oversight. |

| FDA (SaMD) | United States | Ensuring the safety and effectiveness of AI/ML-based medical devices. | Submitting a predetermined change control plan for AI models that learn and adapt over time. |

| PIPEDA | Canada | Governing the collection, use, and disclosure of personal information. | Requiring explicit consent for using personal data to train AI and explaining how decisions are made. |

This table is just a starting point, but it highlights how different regions are tackling similar challenges—from data privacy to model safety—with their own unique approaches. Being compliant means building a framework that can satisfy all of them.

The Eight Pillars of a Resilient Compliance Framework

Think of your healthcare AI compliance framework as the blueprint for a modern medical facility. It’s not just one thing; it’s a series of interconnected systems, each essential for the building to be safe, functional, and up to code. If you remove even one structural pillar, you risk the integrity of the entire operation.

These eight pillars work together, creating a structure that's not only tough enough to stand up to regulatory audits but also agile enough to adapt as AI technology and regulations evolve. Let's walk through the blueprint for this structure, piece by piece.

Data and Model Governance: The Foundation

Your entire framework rests on two foundational pillars: Data Governance and Model Governance. Think of data governance as the strict protocol for handling patient records. It sets the rules for how you collect, store, use, and protect sensitive health information at every step, making sure you stay firmly within the lines of regulations like HIPAA.

Model governance is its twin, applying those same rigorous principles to the AI models themselves. It establishes a clear, documented process for how algorithms are built, trained, deployed, and eventually taken offline. This pillar ensures every AI tool for business you use is consistent, accountable, and traceable from start to finish.

These governance layers answer critical questions like:

- Data Sourcing and Lineage: Where did we get this training data? Was it sourced ethically and legally?

- Access Controls: Who can see this sensitive data or touch this AI model, and why?

- Version Control: Which specific version of an algorithm is running right now? How do we manage and track updates?

Risk Management and Validation: The Safety Systems

With a solid foundation in place, the next set of pillars focuses on safety and performance. Risk Management is your framework's early-warning system. This is where you methodically identify, evaluate, and find ways to reduce potential dangers, whether it's algorithmic bias creeping into a diagnostic tool, a patient safety concern, or a data privacy vulnerability.

A proactive risk management process is non-negotiable. By spotting potential trouble before it turns into a full-blown crisis, you can protect patients, sidestep massive regulatory fines, and safeguard your organization's reputation.

Validation and Testing is the pillar that proves your AI actually does what you claim it does. This goes way beyond checking for technical accuracy. It involves rigorous, real-world testing to confirm a model is effective, fair, and reliable for all the different kinds of patients it will encounter. This is the quality assurance that certifies an AI tool is truly ready for clinical use. For particularly complex models, specialized platforms like VeriFAI can provide the deep validation needed to ensure total integrity.

Documentation and Monitoring: The Oversight and Audit Trail

For long-term trust and compliance, you need transparency and continuous oversight. Documentation and Transparency is all about creating a detailed, easy-to-follow paper trail for every single AI system. This means clearly recording the model's purpose, the data it was trained on, its known limitations, and how it's performing. This is a central part of any professional AI Product Development Workflow and is absolutely vital for showing regulators you've done your homework.

But the work isn't over once an AI goes live. Continuous Monitoring is the pillar that ensures your systems stay safe and effective over their entire lifespan. AI models can experience "drift" as they encounter new, real-world data, which can degrade their performance or introduce new biases. You need automated systems to watch performance in real-time, flagging any dip or strange behavior immediately.

Vendor and Incident Management: The External and Emergency Protocols

Finally, you're never operating in a bubble. The Vendor Management pillar ensures any third-party AI provider you partner with is held to the same high standards you set for yourself. This involves deep due diligence, ironclad contracts, and ongoing audits to make sure their tech and processes are fully aligned with your compliance framework.

And for when things inevitably go wrong, there's Incident Response. This is your pre-planned playbook for how to react if an AI fails, a data breach occurs, or a patient is negatively affected. A well-rehearsed incident response plan contains the damage, ensures you communicate clearly with everyone involved, and guides the recovery, turning a potential catastrophe into a managed event.

Putting Your Compliance Framework Into Action

A brilliant compliance framework on paper is just that—paper. The real test is turning those policies into a living, breathing part of your organization's daily operations. This is where the abstract concept of compliance becomes a concrete, day-to-day practice, moving from a theoretical safety net to an active system of governance.

The goal isn't just to check a box. It's to weave compliance so deeply into your culture that responsible AI development becomes second nature for everyone, from data scientists to clinicians. This means building a dynamic system that actively manages risk, measures performance, and adapts to the inevitable changes in technology and regulation. It’s less about avoiding penalties and more about building a durable foundation for trust and innovation.

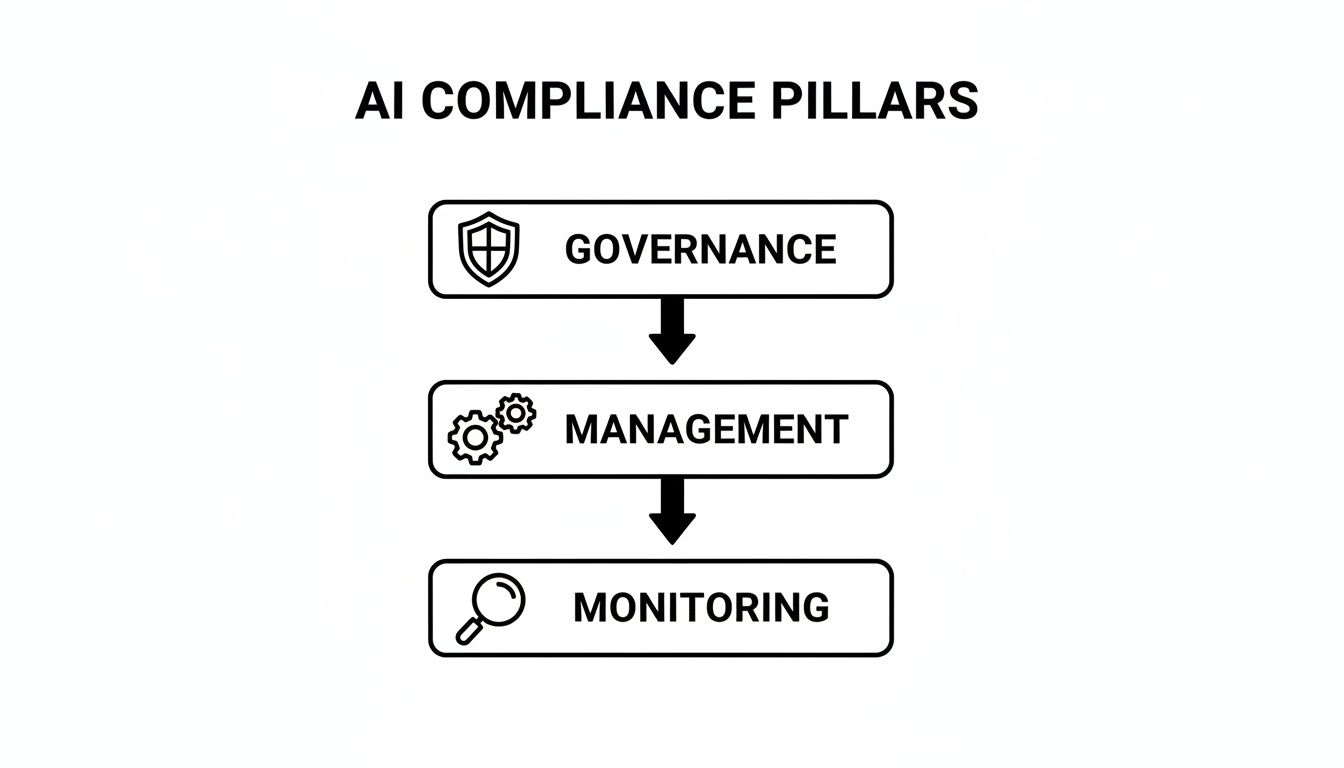

To make sense of how these operational layers work together, think of it as a top-down structure with three core pillars.

This structure shows how high-level governance sets the direction, tactical management executes the plan, and continuous monitoring ensures everything stays on track.

Who Owns AI Compliance? Assembling Your Team

Let's be clear: AI compliance can't live in a single department. It’s a team sport that requires true cross-functional collaboration. Trying to silo this responsibility is a recipe for failure. Instead, you need a dedicated governance team or committee where different perspectives come together.

Bringing a framework to life means having clearly defined roles. The table below outlines who typically does what.

| Role (e.g., CEO, CTO, Data Scientist, Compliance Officer) | Primary AI Compliance Responsibility | Key Artifacts They Own |

|---|---|---|

| Chief AI Officer (CAIO) or CTO | Provides strategic vision, secures resources, and holds ultimate accountability for the framework's success. | AI Strategy & Vision Document, Budget Approvals |

| Chief Compliance/Legal Officer | Interprets regulations, translates them into actionable policies, and oversees legal and ethical adherence. | Compliance Policies, Risk Assessment Framework, Incident Response Plan |

| Data Science & Engineering Leads | Oversee the technical build, ensuring models are fair, transparent, and secure. They implement the "how" of compliance. | Model Documentation, Validation Reports, MLOps Monitoring Dashboards |

| Clinical/Operations Leaders | Act as the domain expert, validating that AI tools are safe, effective, and integrate smoothly into real-world clinical workflows. | Clinical Validation Protocols, User Feedback Reports |

| Data Governance Lead | Manages the entire data lifecycle, ensuring data used for training and inference is private, secure, and fit for purpose. | Data Usage Policies, Data Lineage Maps, PHI Access Logs |

Defining these responsibilities from the start prevents confusion and ensures that every critical component of the framework has a clear owner. If your internal team needs support, finding the best HR advisors for healthcare businesses can help structure these roles effectively.

A Practical Implementation Roadmap

Trying to implement everything at once is overwhelming and inefficient. A phased approach is far more effective, allowing your organization to build a solid foundation and adapt as you go. Think of it as a 9-month journey.

Phase 1: Foundation & Assessment (Months 1-3): This is your discovery phase. Start by auditing every AI system and data source you currently use. The main goal here is to identify your biggest compliance gaps and establish the AI Governance Committee. You’ll finish this phase with a comprehensive risk assessment report and the first drafts of your core policies.

Phase 2: Tooling & Training (Months 4-6): Now it's time to build the infrastructure. This involves deploying the technology needed for oversight, like documentation platforms and automated monitoring tools. Just as important is training—you’ll conduct sessions for all stakeholders to get them up to speed on the new rules of the road.

Phase 3: Operationalize & Optimize (Month 7 onward): With the framework now live, the focus shifts from implementation to continuous improvement. You'll begin tracking your KPIs, conducting regular audits, and updating policies as new regulations emerge. This is where you fine-tune the system and ensure it runs like a well-oiled machine. This is exactly the kind of operational challenge our team at Ekipa AI can help you accelerate.

Are We Succeeding? Measuring What Matters With KPIs

You can't manage what you don't measure. Vague goals like "becoming more compliant" are useless. To truly understand if your framework is working, you need to track specific, measurable Key Performance Indicators (KPIs) that paint a clear picture of your compliance health.

An effective KPI doesn't just tell you if you are compliant; it tells you how well you are managing risk and driving improvement. The best metrics are action-oriented and go far beyond a simple pass/fail check.

Instead of generic metrics, consider tracking KPIs like these:

- Model Bias Remediation Time: What is the average time from when algorithmic bias is detected to when a fix is deployed? A shorter time indicates a more responsive system.

- Audit-Ready Artifacts: What percentage of your AI models have 100% complete and up-to-date documentation (e.g., Algorithmic Impact Assessments, data sheets, validation reports)?

- Training Completion Rate: Track the percentage of relevant employees who have completed mandatory AI ethics and compliance training within 30 days of its assignment.

- Vendor Compliance Scorecard: Rate third-party AI vendors on a scale of 1-100 based on their adherence to your data security and model transparency requirements.

By focusing on tangible metrics, you transform your compliance framework from a static document into a dynamic, data-driven program that protects patients, earns trust, and enables you to innovate with confidence.

Accelerating Innovation While Building Patient Trust

Building a healthcare AI compliance framework isn't just about ticking boxes and avoiding fines. Think of it as the foundation for your entire innovation strategy—the very thing that gives you a competitive edge. When you build compliance into the DNA of your operations, you're not slowing down; you're building a trusted environment where your teams can innovate safely and confidently.

This approach flips the script on regulation. Instead of reacting to new rules, you’re already prepared, with agile systems designed to adapt. It ensures your AI solutions are built for the long haul and fosters a culture of responsibility that patients and partners can see and feel.

From Framework to Actionable Advantage

A compliance framework is just a document until you put it to work. Its real value comes to life when it starts driving tangible business outcomes. The first step is getting a crystal-clear picture of your organization’s unique needs and risks. A rapid AI requirements analysis is the perfect way to kick things off, giving you the insights to create a framework that's both tough and practical.

This initial analysis ensures compliance isn’t an afterthought tacked on at the end. Instead, it's woven into every project from day one, aligning your technical roadmap with the necessary guardrails and smoothing your path to market.

Navigating the Perpetual Engineering Challenge

Let's be honest: the modern regulatory environment is a minefield. Developers are wrestling with complex issues like data sovereignty and a patchwork of global rules, all while trying to address legitimate concerns about bias and unequal patient outcomes. Compliance is no longer a one-time setup; it’s a constant engineering challenge.

The data backs this up. Only 43% of developers feel moderately confident they can build systems that work across both HIPAA and GDPR. You can read more about these AI delivery challenges here.

This reality highlights just how critical proactive model validation and interoperability standards have become. A solid framework gives you the structure to tackle these demands head-on, helping you avoid penalties that can climb as high as 4% of annual revenue under GDPR and, more importantly, building the kind of trust that keeps patients loyal.

Your Partner in Compliant Innovation

Navigating this intricate landscape isn't something you should have to do alone. Our deep experience in Healthcare AI Services and our ability to deliver custom healthcare software development mean we can help you build a framework that is a living, breathing asset—not just a binder on a shelf. We’ll help you integrate these critical guardrails right into your workflows, making compliance a natural part of how you innovate.

Don’t let regulatory complexity become a bottleneck. Check out our library of real-world use cases to see how a strong compliance posture can actually fuel growth. When you're ready, connect with our expert team to start designing a healthcare AI compliance framework that builds lasting patient trust and gets you to market faster.

Frequently Asked Questions (FAQ)

What’s the very first step to create a compliance framework?

Your starting point is always a deep, honest look at where you stand right now through a comprehensive risk and maturity assessment. Before you can write a single policy or choose a new tool, you have to get a clear, unvarnished picture of your current reality. This means taking inventory of every AI system you have, mapping what data they touch—especially Protected Health Information (PHI)—and evaluating them against major regulations like HIPAA and GDPR. The insights from this assessment form the foundation of a framework tailored to your specific risks and operational needs. A professionally developed Custom AI Strategy report can significantly accelerate this discovery phase.

How do we keep our AI models compliant after deployment?

Staying compliant after launch requires a commitment to continuous monitoring and regular, scheduled audits. You need automated systems to watch for issues like data drift and algorithmic bias in real-time, with alerts to flag performance degradation immediately. This automation must be paired with human-led audits to systematically review model documentation, check access logs, and validate performance against the latest regulatory standards. Streamlining these routines with services like AI Automation as a Service can turn this ongoing monitoring from a manual chore into an efficient, automated function.

Who is ultimately responsible for AI compliance?

While everyone in the organization plays a part, ultimate accountability for the governance program rests with senior leadership, including the CEO and the board. However, day-to-day responsibility is distributed. Typically, the CTO/CIO owns the technical infrastructure, the Chief Compliance Officer handles policy and legal adherence, and data science teams are responsible for building fair and transparent models. Establishing a cross-functional AI Governance Committee is a best practice for ensuring collective oversight and embedding compliance across the entire AI lifecycle.

How can we balance innovation with strict compliance?

The key is to view compliance as an accelerator, not a brake. A well-designed framework provides the clear guardrails that empower teams to experiment and build safely. This is achieved by embedding compliance into the innovation process from the very beginning, as we explored in our AI adoption guide, rather than treating it as a final hurdle. When compliance requirements are baked into your internal tooling and development workflows, your teams can move faster and with greater confidence, turning a commitment to patient safety and trust into a powerful competitive advantage.

What are the key regulations governing AI in healthcare?

The main regulations depend on your geographic area of operation, but several have global influence. In the U.S., the Health Insurance Portability and Accountability Act (HIPAA) is foundational for protecting patient data. The FDA also regulates AI tools classified as Software as a Medical Device (SaMD). In Europe, the General Data Protection Regulation (GDPR) sets a high bar for data privacy, while the new EU AI Act introduces a risk-based framework that classifies most clinical AI as "high-risk," requiring strict oversight and transparency.

Ready to build a compliance framework that speeds up innovation instead of slowing it down? Ekipa AI provides the expert guidance and strategic tools you need to turn regulatory headaches into a competitive edge. Explore our AI solutions and connect with our expert team to build a future of compliant, trusted healthcare AI.