A Practical Guide to Responsible Clinical AI Deployment

A practical guide to responsible clinical AI deployment, covering governance, data privacy, model validation, and seamless healthcare integration.

Deploying artificial intelligence in a clinical setting is far more than just plugging in a new piece of software. It’s a deliberate process focused squarely on safety, ethics, transparency, and navigating a complex web of regulations. We're moving past abstract models and into the real world, where AI tools must be proven safe, effective, and equitable for patient care. Getting this right is fundamental to protecting patients and, just as importantly, building trust with the clinicians who will use these tools every day.

Why Responsible Clinical AI Deployment Is No Longer Optional

For years, clinical AI was something we talked about as being "on the horizon." That horizon is here. Today, the real measure of healthcare innovation isn't just having an AI model, but successfully and safely implementing it. A structured, responsible approach isn't a "nice-to-have"; it's a core requirement for protecting patients, staying compliant, and actually seeing a return on these significant investments.

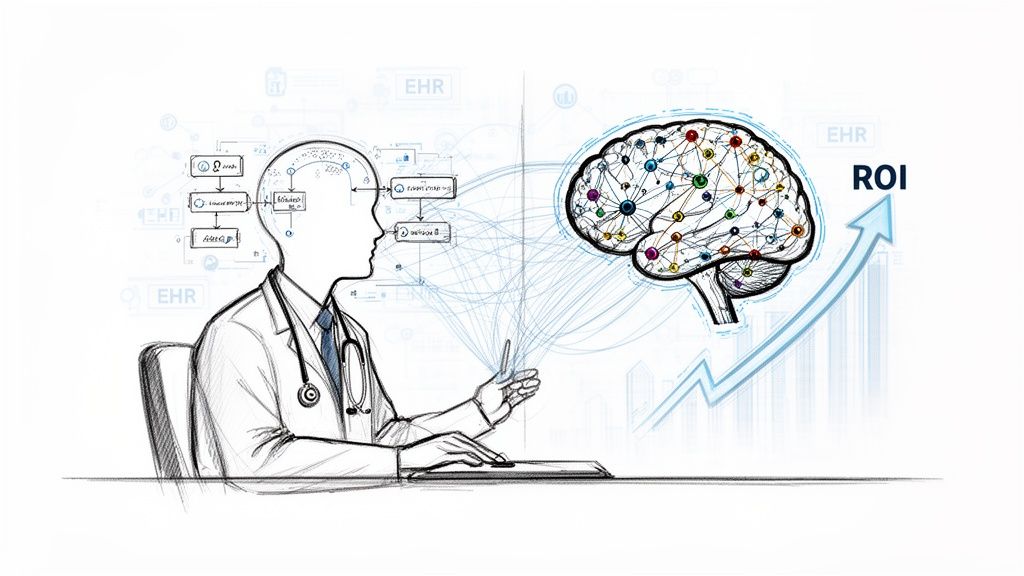

This isn't just talk; the numbers tell the story. The adoption of generative AI in healthcare organizations shot up from 72% in early 2024 to a projected 85% by the end of the year. This isn't reckless expansion, either. It’s a sign of a maturing field where cautious, well-planned rollouts are paying off. In fact, 82% of healthcare organizations reported moderate or high ROI from their AI initiatives in 2025. This growing confidence is mirrored by regulators, with the FDA having approved over 100 AI-driven medical devices by mid-2025. You can dig deeper into these healthcare AI statistics to get a better sense of the market momentum.

This rapid shift is driven by a clear understanding of what it takes to succeed.

As you can see, responsible deployment isn't a roadblock. It's the critical link connecting strategic growth goals with tangible financial outcomes and the necessary regulatory green lights.

Adopting a Practical Framework

Success hinges on a framework that translates high-level principles into day-to-day actions. Clinical leaders and technical teams need a playbook that moves beyond abstract ethical discussions and gets into the weeds of execution. This means establishing clear governance from the very beginning, obsessing over data integrity, and rigorously validating every model before it gets anywhere near a live clinical workflow.

This groundwork is absolutely essential whether your organization is building its own AI solutions or getting expert help through AI strategy consulting. A proactive, structured approach builds a culture of trust where clinicians feel confident using the AI tools for business you provide, rather than viewing them with suspicion.

A well-defined deployment strategy turns potential risks into managed variables. By addressing ethics, compliance, and clinical validation upfront, organizations can de-risk their AI initiatives and accelerate the path to positive patient impact and operational efficiency.

This guide is designed to provide that detailed roadmap. We'll walk through each stage of the process, from assembling an ethics committee to setting up post-deployment monitoring and incident response. This is where big-picture strategy meets on-the-ground reality.

To get started, it's helpful to see how these different components fit together. The table below breaks down the core pillars of a responsible AI framework.

Table: Key Pillars of Responsible Clinical AI

| Pillar | Core Objective | Primary Stakeholders |

|---|---|---|

| Governance & Ethics | Establish clear oversight, ethical guidelines, and accountability for all AI initiatives. | Executive Leadership, Ethics Committee, Legal & Compliance |

| Data Quality & Privacy | Ensure data used for training and validation is accurate, representative, and secure. | Data Science Teams, IT Security, Privacy Officers |

| Model Validation | Rigorously test AI models for accuracy, fairness, and robustness before deployment. | Clinical Champions, Data Scientists, Quality Assurance |

| Regulatory & Legal | Navigate FDA requirements, HIPAA, and other legal obligations for clinical AI tools. | Legal Department, Regulatory Affairs, Compliance Officers |

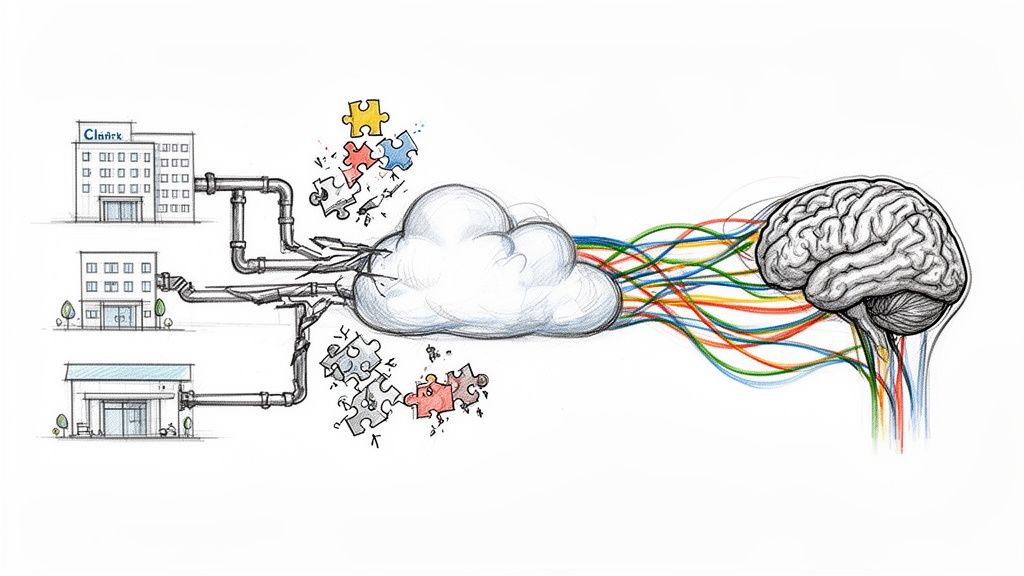

| Clinical Integration | Seamlessly and safely embed AI tools into existing clinical workflows and EMRs. | Clinicians (Doctors, Nurses), IT/Informatics Teams |

| Monitoring & Response | Continuously track model performance post-deployment and have a plan for incidents. | Operations, IT Support, Clinical Governance |

Each of these pillars is a critical piece of the puzzle. Overlooking any one of them can undermine the entire initiative, leading to failed projects, wasted resources, or worse, patient harm. We'll dive into each of these areas in the sections that follow.

Building Your Governance and Ethics Framework

Before you even think about deploying a clinical AI tool, you have to get your governance in order. This isn't just bureaucratic box-ticking; it's the bedrock of a responsible AI program. This framework is what ensures every algorithm, every piece of data, and every decision aligns with patient safety, your ethical compass, and your organization's core values. Frankly, it's the difference between haphazardly adopting new tech and thoughtfully integrating it into patient care.

A good framework is more than just a dusty document on a server—it’s a living, breathing process run by a dedicated team. That’s why your first move should be to create an AI Ethics Committee or a similar multidisciplinary review board. And let me be clear: this can't just be the IT department or a few data scientists. That’s a recipe for disaster.

You need a true cross-section of your organization at the table, each bringing a unique and critical perspective. A solid committee usually includes:

- Clinical Champions: The doctors, nurses, and specialists who actually live in the clinical workflows. They know what will and won't work at the point of care.

- Ethicists and Legal Counsel: These are your guides through the minefield of moral dilemmas and complex regulations.

- Data Scientists and AI Engineers: The technical experts who can cut through the hype and explain what a model can realistically do—and, more importantly, what it can't.

- IT and Security Officers: The guardians of your data, responsible for infrastructure integrity and patient privacy.

- Administrative and Executive Leaders: They ensure AI initiatives actually map to the hospital's strategic goals and have the resources they need.

Putting these different minds together is your best defense against tunnel vision. It forces a balanced conversation where clinical utility, technical reality, and ethical red lines all get an equal say.

Defining Your Core Principles and Policies

With your committee in place, the real work begins. Their first job is to turn lofty ideals like "fairness" and "transparency" into concrete, actionable policies that your teams can actually implement. An abstract principle is useless to an engineer trying to build a model. This work is a crucial part of any serious AI requirements analysis.

You have to be crystal clear about the committee's mandate. It should span the entire AI lifecycle, from the first glimmer of an idea to long-term post-deployment monitoring.

Key Responsibilities for an AI Ethics Committee:

- Defining Ethical Guardrails: They need to establish the non-negotiable values for all AI projects, such as commitments to equity, accountability, and patient well-being.

- Creating Practical Policies: This means developing specific rules for things like data usage, algorithmic transparency, and bias mitigation. For example, a policy might mandate that any predictive model must be audited for racial and socioeconomic bias before it can even be considered for a pilot.

- Reviewing and Approving Projects: The committee acts as the primary gatekeeper. They should have the power to green-light, request changes, or kill any proposed AI project based on whether it meets the established ethical and clinical standards.

- Overseeing Model Performance: Once a model is live, their job isn't done. They must ensure systems are in place to constantly monitor for performance decay, data drift, or any new biases that might crop up.

- Managing Incident Response: When an AI tool gets it wrong—and it will—what happens next? The committee must develop a clear protocol for a fast, transparent, and fair response.

"At GMI, we see AI not as a standalone innovation, but as a tool to strengthen the quality, safety, and precision of the care we deliver. Our collaboration...reflects a deliberate step toward integrating AI responsibly, grounded in clinical need." - Konstantinos Zamboglou, Chief Medical Officer at German Medical Institute

This really gets to the heart of it. A governance framework exists to make sure the technology serves the patient, not the other way around.

Establishing Clear Accountability

I’ve seen promising clinical AI initiatives completely derail because of fuzzy accountability. When an algorithm makes a bad call, who’s on the hook? The data scientist who built it? The doctor who acted on its suggestion? The vendor who sold you the software?

Your governance framework has to cut through that fog. It needs to establish unmistakable lines of authority and decision-making. Define who has the final word on deploying a new model. Spell out who is responsible for watching its performance day-to-day. And most importantly, designate who has the power to pull the plug if a tool is underperforming or causing harm.

This clarity is empowering for everyone, from the developers in the back room to the clinicians on the front lines. It turns governance from a theoretical concept into a practical system of checks and balances that protects your patients and your organization. As we explored in our AI adoption guide, clear internal accountability is essential for navigating the complex regulatory landscape and building trust in your Healthcare AI Services. Getting this foundational work right, often with guidance from our expert team, truly sets the stage for everything that follows.

2. Nail Your Data Integrity and Patient Privacy Strategy

Let’s be blunt: high-quality, secure data is the absolute foundation of any clinical AI system that works. We've all heard the phrase "garbage in, garbage out," but in healthcare, it's far more serious. Flawed data doesn't just produce bad results; it can lead to dangerous models and devastating patient outcomes.

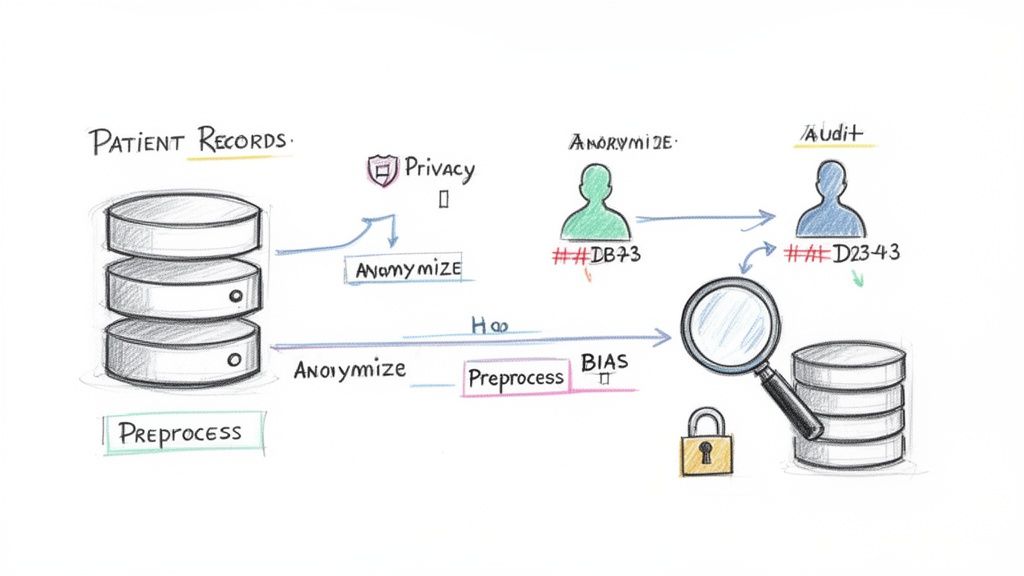

This is why a rock-solid commitment to data integrity and privacy has to be there from day one. I'm talking about creating a meticulous, auditable trail for every piece of data—how it's gathered, how it's cleaned, and how it's locked down.

This thinking needs to start way before anyone writes a single line of code. It means setting up strict rules for data collection to make sure it’s not only accurate but also truly represents the patients you're trying to help. If you skip this, you’re building a sophisticated system on a foundation of sand, and the whole thing is doomed from the start.

Proactively Hunting for and Fixing Bias

One of the biggest landmines in clinical AI is algorithmic bias. This is where a model accidentally reinforces or even worsens existing health disparities. It usually happens when the training data isn't diverse enough. For example, an algorithm trained mostly on data from one demographic group will likely fail—or worse, misdiagnose—patients from different backgrounds.

To get ahead of this, your data science and clinical teams must team up to run regular bias audits. This isn't a passive check; it's an active hunt for imbalances in your data related to:

- Demographics: Are you fairly representing people across race, gender, and age?

- Socioeconomic Factors: Is your data skewed toward certain income levels or zip codes? This can have a huge impact on model performance.

- Clinical Subgroups: Have you included enough patients with different comorbidities or at various stages of a disease?

Finding bias is only the first step. You need a game plan to fix it. This could mean oversampling underrepresented groups or deploying advanced fairness algorithms. It's this proactive mindset that ensures your AI tools actually promote equitable care, rather than undermining it.

Safeguarding Patient Privacy in the AI Era

Beyond just having good data, protecting patient privacy is a non-negotiable. It's not just an ethical duty; it's the law. Regulations like HIPAA give us the baseline, but a truly responsible AI framework goes further by baking privacy principles right into the system's DNA. This concept is often called "privacy by design."

A robust data privacy strategy is not just about compliance; it's about building and maintaining patient trust. If patients do not believe their sensitive information is secure, they will be less willing to engage with the very systems designed to help them.

Strong technical and procedural safeguards are your best defense. It's also worth noting how AI itself can be a powerful ally in this fight, a topic explored in The Role of Artificial Intelligence in Enhancing Cybersecurity for Businesses.

Here are the core techniques your organization absolutely must implement:

- Anonymization and De-identification: Before any data touches a model for training, it must be stripped of all personally identifiable information (PII). I’m talking names, addresses, Social Security numbers—all of it.

- Data Minimization: Stick to a simple rule: collect and use only the data you absolutely need for the task at hand. Avoid the temptation to build massive data lakes that just expand your risk profile.

- Robust Encryption: All patient data must be encrypted, both when it's sitting on a server (at rest) and when it's moving between systems (in transit). No exceptions.

- Strict Access Controls: Implement role-based access so only authorized people can see or handle sensitive patient information.

These measures are the bedrock of any trustworthy AI ecosystem, underpinning everything from custom healthcare software development to advanced predictive modeling.

Validating Models for Real-World Clinical Use

An AI model that performs flawlessly in the lab can fall apart when it hits the messy, unpredictable reality of a live hospital. This gap between the training data and the bedside is where even the most promising AI tools fail. The only way to bridge this divide is through rigorous, multi-stage validation—it’s how you ensure a tool isn't just technically clever but clinically safe and genuinely useful.

The process has to start with deep technical validation, which means looking far beyond a simple accuracy score. Accuracy just tells you how often the model gets it right overall, a metric that can be dangerously misleading, especially with the imbalanced datasets common in healthcare. For a real sense of a model's performance, your data science and clinical teams need to dig into a much richer set of metrics.

Key Technical Validation Metrics to Scrutinize:

- Precision: When the model flags something as positive, how often is it actually correct? High precision is vital for cutting down on false alarms that trigger needless follow-ups, waste resources, and contribute to clinician burnout.

- Recall (Sensitivity): Of all the actual positive cases in the data, how many did the model catch? In situations where missing a diagnosis is catastrophic, high recall is simply non-negotiable.

- Calibration: Does the model know what it doesn’t know? A well-calibrated model that gives a 95% confidence score should be right about 95% of the time. This is fundamental for building clinical trust.

Only after a model has proven its technical chops on paper can you even think about moving it anywhere near a patient.

From the Lab to the Live Ward

This next stage, clinical evaluation, is where the rubber truly meets the road. It’s no longer about code; it’s about care. The question shifts from "Is the algorithm correct?" to "Does this tool actually help my team and improve patient outcomes?" The best way to get that answer is through carefully planned pilot studies or, even better, a silent trial.

A silent trial is often the perfect first step. The AI runs quietly in the background on live clinical data, making its predictions without anyone on the floor seeing them. This lets you directly compare the AI's "decisions" against what your human experts actually did and what happened to the patient—all without any risk of influencing care. It’s a low-stakes way to find major flaws before they can do any harm. For example, you might discover a diagnostic model consistently underperforms for a specific demographic, a critical finding that sends it straight back for retraining.

The Human Side of Validation

The single most important factor here is getting your clinicians involved early and keeping them involved. An AI tool, no matter how accurate, is a failure if doctors and nurses don't trust it or if it gums up their workflow. This is why we build our entire AI Product Development Workflow around a human-centered design process. You have to bring clinicians to the table to help design the pilot, give raw feedback, and make sense of the results.

This collaboration uncovers the subtle but critical issues that raw data will always miss.

Responsible AI in the clinic isn't just a nice idea—it’s backed by hard numbers. We're talking about error reductions of up to 86% and the potential to save 250,000 lives by 2030 with properly validated tools. The momentum is real; by March 2025, 92% of provider health systems were already deploying, implementing, or piloting AI ambient scribes. You can read more in the 2025 AI Index Report.

This fast-paced adoption is exactly why getting validation right is so urgent. During a trial, you have to be on the lookout for unintended side effects. Does the AI add three extra clicks to a doctor’s EHR routine? Is the output confusing? These small frustrations can lead to clinicians creating workarounds or just ignoring the tool completely.

We’ve seen it time and again across our real-world use cases: the AI tools that stick are the ones that feel like they belong in the clinical rhythm. That's why a robust validation process, supported by platforms like our AI model validation tool, is so essential. It’s about moving beyond just checking the model's math to truly evaluating its total impact on the entire system of care. This is what turns a promising algorithm into a trusted clinical partner.

From Deployment to Daily Use: Integrating and Monitoring Clinical AI

Getting an AI model deployed isn't the finish line—it's the starting gun. The real work of responsible AI begins the moment an algorithm goes live in a clinical setting. This is where we shift from development to vigilant oversight, creating a system that ensures the tool remains safe, effective, and trusted for its entire lifespan.

Success hinges on making the AI a seamless part of the clinical workflow. If a tool is clunky or disruptive, clinicians will find workarounds, and it'll gather dust no matter how accurate it is. That’s why a thoughtful change management strategy is so critical. Training needs to go beyond just the "how-to" and dig into the "why," clearly explaining the model's strengths, its known limitations, and the specific scenarios where it can really make a difference.

This end-to-end management is a core part of what we do in our AI Automation as a Service offering. We handle the complete lifecycle to make sure these tools deliver sustained value and see high adoption rates.

Building Your Post-Deployment Oversight Framework

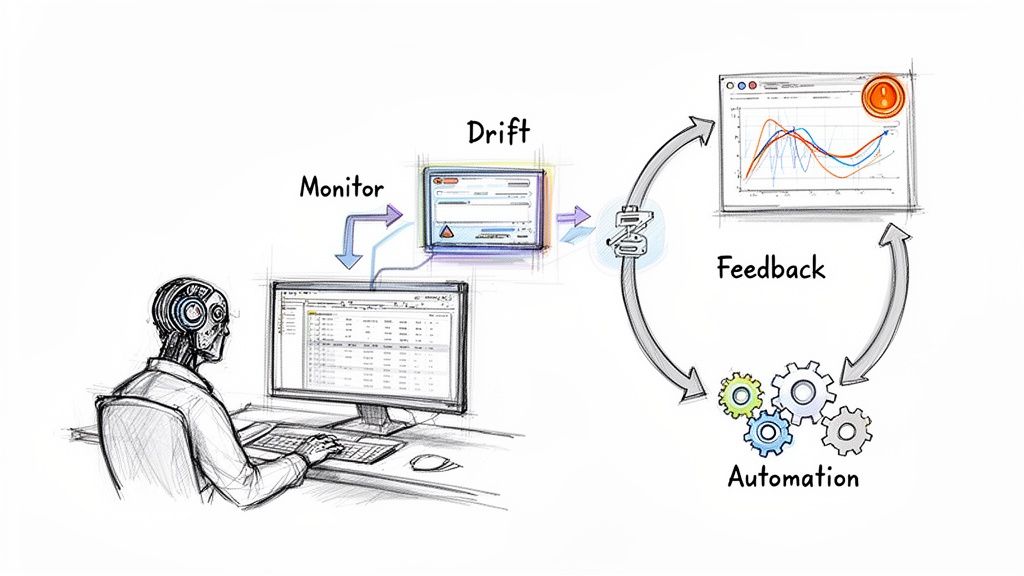

Once an AI is up and running, you have to watch it like a hawk. Healthcare isn't static. Patient populations shift, clinical guidelines get updated, and new imaging equipment is installed. Any of these changes can cause a once-brilliant model to slowly degrade in a process we call model drift. Without a robust monitoring plan, this decay can go completely unnoticed, silently undermining patient care.

Your post-deployment strategy needs to track key metrics in near real-time.

Key Areas to Monitor:

- Performance Metrics: Keep a constant eye on core metrics like precision and recall to catch any dips in accuracy the moment they happen.

- Data Drift: Monitor the data coming into the model. Is it consistent with the data it was trained on? A sudden change in patient demographics, for instance, is a major red flag.

- Bias Audits: Don't just check for bias once. Regularly re-evaluate how the model performs across different demographic groups to spot any new biases that might creep in over time.

- User Feedback: Give your clinicians a simple, direct way to report weird results, incorrect outputs, or workflow snags. Their on-the-ground insights are invaluable.

This continuous oversight is what turns a one-off project into a sustainable solution. It's an active, ongoing commitment that builds deep, lasting trust with your clinical teams.

Planning for When Things Go Wrong

Let’s be realistic: no AI is perfect. Sooner or later, a tool is going to produce a wrong or confusing result. How you respond in that moment is what truly defines a responsible AI strategy. Having a well-defined incident response plan isn't just a good idea; it's non-negotiable.

An incident response plan isn't about pointing fingers. It’s about fast, transparent problem-solving. It ensures a single error becomes a learning opportunity that strengthens the entire system and protects future patients.

Your plan needs to be clear, actionable, and understood by everyone involved, from the frontline nurse to the data science team. It should lay out immediate steps for clinicians, like how to override an AI recommendation and easily flag the event. That flag must trigger an automatic alert to a dedicated review team—a mix of clinical and technical experts—who can perform a rapid root cause analysis.

What happens next? It could be anything from a simple software patch to a more intensive model retraining, or even pulling the tool offline temporarily. This proactive approach to managing failures is absolutely central to maintaining clinician trust and ensuring long-term patient safety. We embed this level of lifecycle management into every system we build, especially for crucial internal tooling, to ensure they are resilient and reliable. By planning for imperfection, you build a much stronger AI ecosystem.

Your AI Deployment and Vendor Evaluation Checklist

Choosing the right partners is a make-or-break decision for any clinical AI initiative. Whether you're bringing in third-party AI tools for business or building your own internal tooling, a tough, thorough evaluation process is your best line of defense. It's what keeps unsafe, ineffective, or non-compliant solutions out of your hospital.

Think of this as vetting a long-term partner who will have a direct hand in patient outcomes, not just making a one-off purchase. Your job is to cut through the marketing fluff and get to the hard evidence of a product's safety, real-world effectiveness, and ethical grounding.

Key Areas for Vendor Due Diligence

When you sit down with a potential vendor, you need a structured approach. I've found it's best to organize the conversation around a few core areas. Probing these domains gives you a clear picture of their technology's maturity and their genuine commitment to doing things the right way. A quick, surface-level review here is a recipe for clinical and financial pain later.

Here’s what I always focus on:

Regulatory & Compliance: First things first—is the solution cleared for clinical use? You need to see the official documentation, like an FDA 510(k) clearance or CE mark. If a vendor is cagey about their regulatory status, that's a huge red flag.

Clinical Validation: Don't just accept the glossy marketing summary. Ask for the full, peer-reviewed clinical validation studies. The real question is: was this tool proven effective in a setting that actually looks like yours? The patient demographics and a clinical environment in the study matter immensely.

Data Security & Privacy: This is non-negotiable. How are they going to protect your patients' data? The vendor must provide crystal-clear documentation on their security architecture, data encryption methods, and ironclad compliance with regulations like HIPAA.

Transparency & Explainability: Can the vendor explain how the algorithm arrives at its conclusions in a way your doctors can actually understand and trust? "Black box" models that provide answers with no reasoning are a massive risk in a clinical setting.

Integration & Support: How much of a headache will it be to get this tool to work with your EMR and existing clinical workflows? You need to know exactly what kind of technical support and training they'll provide—both during the rollout and for the long haul.

The Vendor Evaluation Checklist for Clinical AI

To make this process more concrete, I've put together a checklist. Use this table as a guide during vendor demos and follow-up conversations to ensure you cover all your bases.

| Evaluation Criteria | Key Questions to Ask | Red Flags to Watch For |

|---|---|---|

| Clinical Evidence | Can you share the full, peer-reviewed validation studies? In what patient populations was this validated? | Citing only internal white papers; studies in irrelevant patient populations. |

| Regulatory Status | What is your specific FDA clearance or CE mark classification? Can we see the documentation? | Vague answers like "FDA compliant" instead of "FDA cleared"; reluctance to share documents. |

| Data Handling | Where will our data be stored? Is it encrypted at rest and in transit? Who has access? | Unclear data governance policies; refusal to sign a Business Associate Agreement (BAA). |

| Model Explainability | How does the model work? Can clinicians understand the 'why' behind its recommendations? | Describing the tool as a "black box"; inability to explain influential features. |

| Integration & Workflow | Can you provide a live demo of the integration with our EMR system? What is the average go-live time? | Generic demo environments; lack of experience with your specific EMR vendor. |

| Post-Launch Support | What does your support model look like? What are the defined SLAs for uptime and issue response? | No dedicated support team; vague or missing performance guarantees in the SLA. |

| Ethical Framework | How was the model tested for bias across different demographic groups (race, sex, age)? | No data or analysis on fairness and bias; training data from a single, homogenous source. |

This checklist isn't just about ticking boxes. It's about sparking the right conversations that reveal a vendor's true capabilities and their commitment to being a responsible partner.

Don't Skim the Fine Print: Contracts and SLAs

The legal agreements are where promises get turned into binding commitments. Your contract and Service Level Agreement (SLA) must spell out who is responsible for what, with no room for ambiguity.

The contract should clearly define the vendor’s duties for ongoing model monitoring, performance guarantees, and, crucially, the protocol for what happens if there’s an AI-related adverse event.

A vendor's willingness to put transparent performance metrics and clear lines of accountability into a legally binding contract tells you everything you need to know. It's the ultimate sign of confidence in their own product and a commitment to a real partnership.

Finally, remember that responsible deployment is a continuous effort, not a one-time project. To see how this fits into the bigger picture, from initial idea to clinical reality, check out our in-depth guide on the AI Product Development Workflow. If you have more questions, don't hesitate to reach out to our team of experts, who are always ready to help you navigate your AI journey. You can learn more about them on our team page.

Frequently Asked Questions

What is the first step in responsible clinical AI deployment?

The very first, most critical step is building a solid governance framework. This means putting together an interdisciplinary committee—think clinicians, ethicists, data scientists, and administrators—to define your organization's ethical AI principles, set clear policies for data and model transparency, and establish a formal process for approving any new AI projects before they get off the ground.

How can we measure the ROI of a responsible AI initiative?

Measuring the ROI here is about more than just dollars and cents. You need to track a broader set of metrics: 1) Clinical Outcomes (like fewer diagnostic errors or faster patient recovery), 2) Operational Efficiency (such as less administrative work for staff or quicker report generation), and 3) Compliance & Risk Reduction (meaning a lower chance of litigation and better adherence to regulations). Tracking these KPIs gives you the full picture of an AI's value.

How do we handle a situation where a clinical AI tool provides an incorrect recommendation?

This is where your pre-defined incident response plan kicks in. The plan must outline how to: 1) Let clinicians easily override the AI and report the error, 2) Instantly alert a dedicated review team of clinical and technical experts, 3) Dive in and figure out the root cause (was it data drift? a model flaw?), and 4) Roll out corrective actions, whether that’s retraining the model or taking it offline for a bit. Being transparent with both staff and any affected patients is also key.